NVIDIA Jetson Xavier - Deep Learning Accelerator

The NVIDIA Deep Learning Accelerator (NVDLA) is a fixed function engine used to accelerate inference operations on convolution neural networks (CNNs). You will learn about the software available to work with the Deep Learning Accelerator and how to use it to create your own projects. If you want to learn more about this engine, its hardware, and the open-source project NVDLA please refer to the NVDLA Processor wiki page.

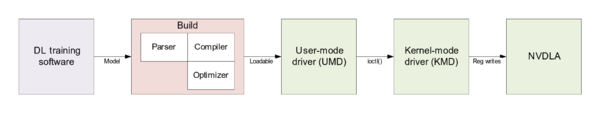

NVDLA software design is grouped into two groups: the compilation tools (model conversion), and the runtime environment (run-time software to load and execute networks on NVDLA). The general flow of this is as shown in the figure below, and each of these is described below.

Compilation Tools

Compilation tools include a compiler, parser, and optimizer.

- Parser – Reads a pre-trained Caffe model and an intermediate representation to pass to the compiler.

- Compiler – creates a sequence of hardware layers optimized for an NVDLA configuration.

These steps can be performed offline on the host device and then deploy the loadable model on Xavier's NVDLAs. Knowing the specific NVDLA hardware configuration enables the compiler to further optimize inference. However, the specific NVDLA configuration on the Tegra Xavier is not yet available.

Runtime Environment

The runtime environment involves running a model on compatible NVDLA hardware. It is effectively divided into two layers:

- User Mode Driver – The main interface with user-mode programs. After parsing the neural network compiler compiles the network layer by layer and converts it into a file format called NVDLA Loadable. User mode runtime driver loads this loadable and submits inference job to Kernel Mode Driver

- Kernel Mode Driver – Consists of drivers and firmware that do the work of scheduling layer operations on NVDLA and programming the NVDLA registers to configure each functional block.

Selecting which DLA to use

You can select which of the two DLAs to use with IBuilder::setDLACore() and IRuntime::setDLACore()