User Guide on Controlling the Stitcher

| Image Stitching for NVIDIA®Jetson™ |

|---|

|

| Before Starting |

| Image Stitching Basics |

| Overview |

| Getting Started |

| User Guide |

| Resources |

| Examples |

| Spherical Video |

| Performance |

| Contact Us |

This page provides a basic explanation of the workflow used when working with the stitcher. The stitcher supports the panoramic and the 360 cases, each with its own workflow.

Panoramic workflow

The stitcher plug-in provides panoramic videos with a combined Field Of View (FOV) of less than 120 degrees. The workflow to obtain panoramic videos is explained in the following sections.

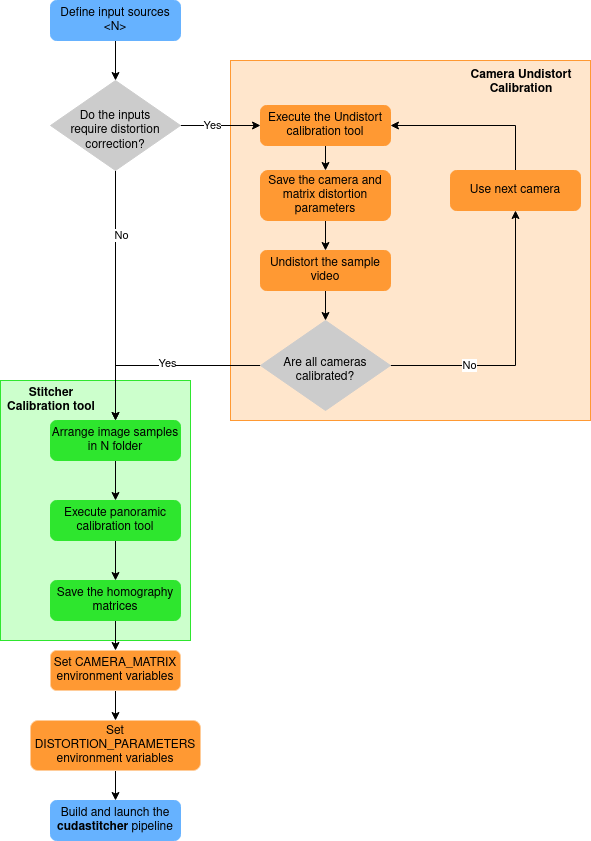

Panoramic workflow diagram

The following diagram provides a visual representation of the workflow needed when using the stitcher, as well as the auxiliary tools required. The workflow overview provides a more detailed written version of the workflow and links to the required wikis.

Panoramic workflow overview

Here are the basic steps and execution order that must be followed to configure the stitcher properly and acquire the parameters for its usage.

- Know your input sources (N).

- Apply distortion correction to the inputs (only if necessary); see CUDA Accelerated GStreamer Camera Undistort Camera Calibration User Guide for more details.

- Run the calibration tool for each source that requires it.

- input: Multiple images of a calibration pattern.

- output: Camera matrix and distortion parameters.

- Save the camera matrix and distortion parameters for each camera since they will be required to build the pipelines.

- Repeat until every input has been corrected.

- Run the calibration tool for each source that requires it.

- Calculate all (N-1) homographies between pairs of adjacent images; see Image Stitching Calibration User Guide for more details.

- Apply an undistort pipeline to the video samples (only if necessary).

- Arrange the sample images for each camera in folders.

- Run the calibration tool for camera image samples.

- input: N-1 folders with image samples from each camera.

- output: Homography matrices that describe the transformation between input sources.

- Save the JSON file with the homography matrices; they will be required in the next steps.

- Build and launch the stitcher pipeline; see Image Stitching GStreamer Pipeline Examples

360 stitching workflow

The Stitcher plug-in can provide 360 videos using the RidgeRun Image Projector plug-in as a complement. The workflow to obtain 360 videos is explained in the following sections.

360 workflow diagram

The following diagram provides a visual representation of the workflow needed when using the stitcher and the projector, as well as the auxiliary tools required. The workflow overview provides a more detailed written version of the workflow and links to the required wikis.

360 workflow overview

The RidgeRun’s stitcher is capable of creating a 360 frame from a group of fisheye cameras. The camera’s FOV must cover the 360° region around the cameras, and the input images must be projected using the equirectangular projection. RidgeRun implemented a GStreamer element that performs the equirectangular projection from an RGBA image in the RidgeRun Image Projector plug-in

The stitcher used for 360 frames generation is the same as that used for regular panoramic stitching; the difference will be the type of input image and the configuration required for each case. As you may know, the stitcher supports putting together any amount of inputs; however, for a 360 solution, 2 or 3 fisheye cameras will be enough to cover the 360 world area around them. If your setup uses 2 cameras back-to-back, it is recommended to use lenses of at least 190 degrees, even though 180 degrees would be enough to cover the area. These 10 extra degrees allow the stitching to have a blending zone to work with, producing smoother transitions between the images. The overlap region will depend on your camera array configuration and the angles of view of the cameras you are using.

360 Calibration

To work with the stitcher, you need to define a homography configuration; this is nothing else than a description of the relationship and transformation between the images. In the case of fisheye 360 stitching, the projector already made most of the transformations required to match the images.

For the camera alignment, you need to define the equirectangular properties of each camera, which can be obtained using the Projector Calibrator. The calibration tool will automatically set the roll angle to align the cameras without a horizontal offset, resulting in an identity homography matrix.

One of the challenges of stitching together 360 equirectangular videos is the parallax effect, which corresponds to the difference in the apparent position of an object viewed from different points of view and lines of sight. To have perfect stitching with no parallax effect, your cameras should be in the same position, which is physically impossible, so there cannot be perfect stitching in the real world. But you can adjust the projections and stitching configuration to have a nice blending at a certain depth, meaning a certain distance from the camera's array. However, if you adjust to some distance, probably objects closer or further from that distance will present parallax issues. Also, the parallax will be determined by the distance between the cameras; smaller cameras closer together will be closer to the ideal setup where both cameras occupy the same space so the parallax effect is small, but for bigger cameras further apart, the parallax effect will be more noticeable.

Steps

Here are the basic steps and execution order that must be followed to configure the stitcher properly.

- Know your input sources (N) and the camera's FOV.

- Calculate all (N-1) homographies; see Image Projector Calibration User Guide for more details.

- Arrange a sample image in a folder named with each of the camera's indexes.

- Run the calibration tool for the camera image samples.

- input: N-1 folders with image samples from each camera.

- output: Homography matrices that describe the translation between input sources.

- Save the JSON file with the homography matrices and camera parameters; they will be required in the next steps.

- Build and launch the stitcher pipeline; see 360 Image GStreamer Pipeline Examples