V4L2 PCIe - FPGA Image Signal Processing with Xilinx OpenCV and V4L2-FPGA

| V4L2 FPGA |

|---|

|

| Introduction |

| Getting the Code |

| Examples |

| GStreamer Pipelines |

| Supported Platforms |

| Contact Us |

Introduction to image processing applications using Xilinx FPGAs

In 2017, Xilinx has made available a templated library with kernels for accelerating image processing applications on Xilinx FPGAs (xfOpenCV[1]), especially oriented for High-Level Synthesis and SDx applications. This library allows users to create Image Signal Processing (ISP) pipelines with low-latency and high throughput, getting up to 8 pixels per clock for single-channel image applications. In practical terms, in a 125 MHz logic, it is possible to get 120 fps of sustained frame rate in a 4K single-channel video sequence, and 30 fps in case of 32-bit RGBA images.

However, interfacing the FPGA with the host computer is not an easy task, RidgeRun offer a wrapper which allows to create your pipeline in a straight-forward approach, without worrying about the interfacing and the drivers that it involves, making available the FPGA input/output ports as video devices V4L2 compliant and ready to use for user-space applications and GStreamer.

In this wiki, we are glad to present how to implement a basic image pre-processor interfaced with our V4L2 FPGA wrapper. If you want to find more information about the compatible functions, please look at List of Xilinx OpenCV functions compatible with V4L2 FPGA

We kindly invite you to visit our projects in: V4L2 FPGA for our wrapper and FPGA Image Signal Processor for our custom ISP IP Cores.

The Preprocessor Design

Generalities

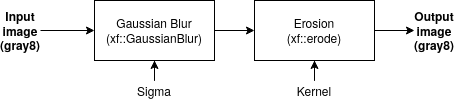

This preprocessor aims to enhance the features detected by a Haar-like cascade classifier implemented by the OpenCV library[2], making more noticeable the borders and the features that the classifier actually detects, speeding the detection up, and reducing the latency of the time-to-result. For this particular case, the preprocessor is composed by three main stages:

1. Histogram Equalization (done in Software)

2. Gaussian Blurring (3x3 kernel)

3. Erosion (5x5 kernel, cross-like kernel)

These stages are connected in cascade, where the result of one of them is the input of the following. Also, the incoming image is already given in grayscale.

You can find more information in : (Designing an Embedded Traffic Dynamics Meter: A case study [3])

xfOpenCV implementation

The implementation in xfOpenCV shall meet some adjustments in order to be compatible. The main one is to represent matrices as a stream to avoid consuming Block RAM (BRAM).

The points discussed above lead to the following block diagram:

Implementation

The V4L2 FPGA project includes a wrapper which allows you to implement ISP algorithms in C++ (High-Level Synthesis) without worrying about the communication standards, block designs, and drivers. A typical project in V4L2 FPGA looks like:

RR_SET_INPUT_PROPERTIES {

/* Set the input image properties, such as width, height and format */

}

RR_SET_OUTPUT_PROPERTIES {

/* Set the output image properties, such as width, height and format */

}

RR_MODULE(output_stream, input_stream) {

/* Your algorithm*/

}

It is inspired in th SystemC standard, but simplifying the accesses to the different resources, avoiding polluting your code with tons of directives for defining all the external ports.

xfOpenCV implementation

Focusing on the RR_MODULE block, we are going to implement our accelerator by defining a wrapper function called preprocessor, which will be invoked inside of the RR_MODULE and defined outside. This function receives the following parameters:

- Input stream (stream_in)

- Output stream (stream_out)

- Image width (width)

- Image height (height)

The implementation will look like

/* Importing HLS and AP INT*/

#include <ap_int.h>

#include <hls_stream.h>

/* Importing the xfOpenCV kernels */

#include "imgproc/xf_erosion.hpp"

#include "imgproc/xf_gaussian_filter.hpp"

#define TYPE XF_8UC1

#define NPC1 XF_NPPC1

#define HEIGHT_MAX 2048

#define WIDTH_MAX 2048

#define TRANSFER_WIDTH 8

#define FILTER_WIDTH_GAUSS 3

#define FILTER_WIDTH_EROSION 5

typedef RR_stream_port stream_t;

typedef ap_uint<16> dim_t;

unsigned char EROSION_KERNEL[FILTER_WIDTH_EROSION * FILTER_WIDTH_EROSION] = {

0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0};

void preprocessor_accel(stream_t& stream_in, stream_t& stream_out, dim_t height,

dim_t width) {

/* Define xf::Mat vars - They are intended to be FIFOS - csim needs these as

* statics */

static xf::Mat<TYPE, HEIGHT_MAX, WIDTH_MAX, NPC1> imgInput(height, width);

static xf::Mat<TYPE, HEIGHT_MAX, WIDTH_MAX, NPC1> imgOutput(height, width);

static xf::Mat<TYPE, HEIGHT_MAX, WIDTH_MAX, NPC1> fromGauss(height, width);

/* Internal streams */

#pragma HLS stream variable = imgInput.data dim = 1 depth = 2

#pragma HLS stream variable = imgOutput.data dim = 1 depth = 1

#pragma HLS stream variable = fromGauss.data dim = 1 depth = 1

/*

The basic flow is

1. From AXI Stream to xf::Mat

2. Kernel execution

3. From xf::Mat to AXI Stream

*/

#pragma HLS dataflow

utils::stream::rraxi2xfMat(stream_in, imgInput, width, height);

xf::GaussianBlur<FILTER_WIDTH_GAUSS, XF_BORDER_CONSTANT, TYPE, HEIGHT_MAX,

WIDTH_MAX, NPC1>(imgInput, fromGauss, SIGMA);

xf::erode<XF_BORDER_REPLICATE, TYPE, HEIGHT_MAX, WIDTH_MAX, XF_SHAPE_CROSS,

FILTER_WIDTH_EROSION, FILTER_WIDTH_EROSION, EROSION_NITER, NPC1>(

fromGauss, imgOutput, EROSION_KERNEL);

utils::stream::xfMat2rraxi(imgOutput, stream_out, width, height);

}

The instantiation of the several ISP kernels takes place at the bottom of the code presented above. There are two important elements, which converts the stream into xf::Mat objects and viceversa (lines 44 and 52), which is the equivalent representation of OpenCV's cv::Mat.

This functions are already provided in the V4L2-FPGA project. One of the key reasons for using streams instead of memory representation is to get higher throughput, since the Memory Mapped AXI supports of to 256 burst transfers after an address query. It adds overhead when having larger data transfers.

On the other hand, as a mandatory rule for dataflows in Vivado HLS (line 43), all the stream-related variables must be declared as statics, given their FIFO nature, and it allows the synthesizer to interpret them as accessible when the scope goes outside of the main function.

About the kernels used in this example, they have OpenCV equivalent functions. For more information, you can see their definitions in (Xilinx OpenCV Manual [4]).

V4L2 FPGA implementation

After implementing and testing the implementation in xfOpenCV, it's time to invoke the module from the V4L2 FPGA wrapper. In order to do so, we are going to copy & paste the basic skeleton provided with the purchase of V4L2 FPGA, and modify it according to our needs. Let's focus in the RR_MODULE.

/* -- Above, there are the properties declaration for I/O images */

RR_MODULE(output_stream, input_stream) {

/* Here are the image properties requested by the host computer and parameters verification */

uint16_t width = GET_INPUT_WIDTH;

uint16_t height = GET_INPUT_HEIGHT;

/* Perform preprocessing */

preprocessor_accel(to_preproc, from_preproc, height, width);

}

The V4L2 FPGA is basically composed by:

1. Format guards (line 5 - hidden to keep the simplicity of the example)

2. The preprocessor accelerator (declared in a header file also included in the source code)

Just using this basic RR_MODULE block, it is possible to exploit all the V4L2 FPGA project, by taking advantage of the built-in drivers and communication standards, making your application V4L2 compliant and usable by GStreamer and other userspace applications.

Results

The results obtained by this preprocessor algorithm are shown below:

-

Figure 1. Input image before grayscaling (software side)

-

Figure 2. Output from OpenCV

-

Figure 3. Output from FPGA

There are differences between the results got from the FPGA respect to the OpenCV library. These differences are explained because of the logic used in the different kernels, which avoid using floating-point numbers and use fixed-point logic instead, especially in the Histogram Equalization and the Gaussian Filtering, which are the most affected by this implementation decision.

The differences can be noticed in the following image

Known issues found during this implementation

We found some issues related to the integration of xfOpenCV kernels to the V4L2 FPGA project. We list them below:

1. Numerical representation: given the fact the xfOpenCV uses fixed-point numbers instead of floating-point, the final results differ.

2. New release incompatibilities: The V4L2 FPGA is based on Vivado 2018.2. The latest xfOpenCV release is based on Vivado 2019.1, which is not compatible backwards. In order to use the kernels from the library, please, use the release for 2018.x.

3. Timing issue in Gaussian Blur: The GaussBlur implementation in xfOpenCV has already issues related to the timing inside of the kernel. We made some fixes in order to get them work.

4. Kernel incompatibilities: some cores which doesn't have sequential access to the elements could lead to issues of incompatibilities with the AXI Stream, resulting in accelerator hangings.

References

- ↑ Xilinx OpenCV library: https://github.com/Xilinx/xfopencv/

- ↑ Cascade Classifier. OpenCV. Available in: https://docs.opencv.org/3.4/db/d28/tutorial_cascade_classifier.html

- ↑ Leon-Vega, L. 2018. Designing an Embedded Traffic Dynamics Meter: A case study, DOI: 10.13140/RG.2.2.17282.43207:https://www.researchgate.net/publication/337113851_Designing_an_Embedded_Traffic_Dynamics_Meter_A_case_study

- ↑ Xilinx OpenCV Manual. Available in: https://www.xilinx.com/support/documentation/sw_manuals/xilinx2018_3/ug1233-xilinx-opencv-user-guide.pdf

For direct inquiries, please refer to the contact information available on our Contact page. Alternatively, you may complete and submit the form provided at the same link. We will respond to your request at our earliest opportunity.

Links to RidgeRun Resources and RidgeRun Artificial Intelligence Solutions can be found in the footer below.