Library Integration for IMU Example Application

|

|

This Page is Under Construction...

Introduction

RidgeRun's Video Stabilization Library provides 2 example applications that serve as in-depth guides on how to use the library. This page explains the expected behavior and operation of these applications. In order to generate a stabilized video output, each application has to execute the integration, interpolation, and stabilization algorithms. The main difference between the examples is that one example works offline on externally provided sensor data and video footage. The other example works online with live video capture and sensor data acquisition.

Concept: Offline Video Stabilization with Gyroscopic Data

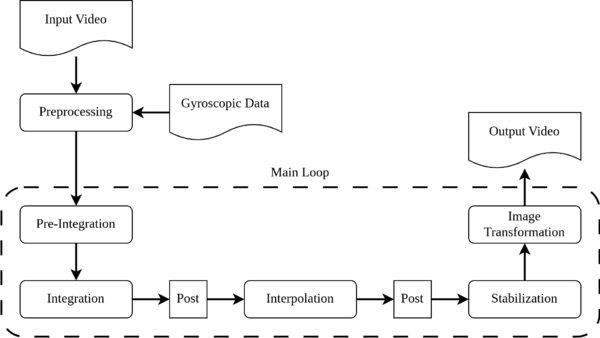

This application consists of two main parts: a preprocessing stage that emulates the inputs available in a real live feed and the main loop that captures frames and gyroscopic samples, as seen in the diagram below.

Next, a summary of the instances and stages is provided.

Instances

This application uses the following library components:

- An Integrator with Integrator Settings.

- An Interpolator with Interpolator Settings.

- A Stabilizer with Stabilizer parameters.

- A Fish Eye Undistort Instance with Null Parameters.

Preprocessing and Preparation

The input video will be downloaded from a remote link when building the project. At the same time, the gyro data is already included in the artifacts subdirectory for this project as a CSV file. The code will check that it is possible to:

- Open the input video.

- Open the gyro data file.

- Create an "output.mp4" file for the final result.

For this example, the video properties, such as codec and frame rate, are extracted from the input file, although the output resolution is fixed to 640x480.

If the previous steps are successful, the program advances into the main loop, which treats each frame.

Main Operation Loop

This loop emulates how a live video would be processed, taking into account that data is available only from the current and past frames.

Pre-Integration

For each frame, there is an unknown number of gyro samples that match. Therefore, the first step is accumulating samples for the current time interval. If the remaining samples were insufficient to fill the interval, it will be considered the video's last frame.

Integration

The integrator transforms the samples into their corresponding timestamp-quaternion pair representation in an accumulation buffer.

Post-Integration

After integration, the timestamps are transposed due to the delay between the frame's capture and measurement.

Interpolation

The interpolator will check if the accumulated buffer has enough samples before interpolating. If not, the example will return to the integrator and extend the buffer. once the interpolation is done, the accumulated buffer is cleaned for the next integration.

Post-Interpolation

An interpolation buffer will take the first sample of the interpolated output, and the previous steps will repeat until there are three samples.

Stabilization

The 3-sized interpolation buffer is used to get a stabilized buffer of the same size. The middle values of the stabilized buffer and the interpolation buffer are used to estimate the correction rotation.

Image Transformation

To correct the image, the input and output frames must be allocated using the image allocator included in the library and the appropriate backend (this example employs OpenCV); and then an undistort instance transforms the image using the rotation obtained from the stabilization step. Finally, the frame is written into the output video.

Additional Details

The application is constructed by default by the building system. Make sure to have -Denable-examples=enabled in the construction.

First, download the video and the raw gyro data using:

wget "https://drive.usercontent.google.com/download?id=1uW2sg3E2W2UOF9rjDaHG8eJus7m531_4&export=download&authuser=0&confirm=f" -O test-video-reencoded.mp4 wget "https://drive.usercontent.google.com/download?id=1LXjqut2c8YIiJg66vH_UyYSdP664OErw&export=download&authuser=0&confirm=f" -O raw-gyro-data.csv

To execute, simply:

BUILDDIR=builddir

./${BUILDDIR}/examples/concept/rvs-complete-offline-concept -f test-video-reencoded.mp4 -g raw-gyro-data.csv -ts 100 -n 500 -b opencv

For more options:

Usage:

rvs-complete-offline-concept [-n NUMBER_OF_FRAMES] [-f INPUT_VIDEO] [-g GYRO_DATA] [-o OUTPUT_VIDEO] [-b BACKEND] [-w WIDTH] [-h HEIGHT] [-s FIELD_OF_VIEW_SCALE] [-l LENS_MODEL] [-c CALIBRATION_FILE.YML] [-z XYZ] [-k0 OFFSET_1] [-k1 OFFSET_1] [-a SMOOTH_FACTOR] [-ts TIMESTEP_SAMPLES] [-us]

Options:

--help: prints this message

-g: raw gyro data in CSV

-f: input video file to stabilise

-o: output video file stabilised. Def: output.mp4

-b: backend. Options: opencv (def), opencl, cuda

-n: number of frames to stabilise

-w: output width (def: 1280)

-h: output height (def: 720)

-s: field of view scale (def: 2.4)

-l: lens model. Options: fisheye (def), brown conrady

-c: calibration file. (def: None)

-z: orientation (def: XYZ)

-ts: timestep samples (def: 1000)

-ff: first frame timestamp in us (def: 0)

-a: smoothing factor (def: 0.209)

-us: use timestamps in us (CSV IMU file)

-k0: IMU offset coefficient 0 (def: -0.02951839)

-k1: IMU offset coefficient 1 (def: 0.04200435)

With this, it is possible to change the location of the files, select a backend, and modify the output resolution for performance testing. The camera matrix is set by default, and it may not lead to the most optimal results. Moreover, the higher the resolution, you may need to lower the field of view scale (-s) to zoom over the image.

If you are looking to compile your application and use our library, you can compile this example using:

RAW_GYRO_FILE=FULL_PATH_TO_RAW_GYRO

VIDEO_FILE=FULL_PATH_TO_VIDEO

g++ -o concept complete-code-example.cpp $(pkg-config rvs --cflags --libs) -DRAW_GYRO_INPUT_FILE=${RAW_GYRO_FILE} -DTEST_VIDEO=${VIDEO_FILE}

Note: Installing the library is a prerequisite. |

Concept: Online Video Stabilization with Live Video Capture and Sensor Data Acquisition

This application makes use of the Gstreamer C API directly to provide performant video capture, video writing, and event loop handling. In order to separate these tasks from the RVS stabilization steps, this application defines 2 general classes: A GstreamerManager and a StabilizationManager. The following sections dive into more detail on the processing steps of both classes.

The GstreamerManager

Here is a diagram that lays out the data flow of the main stages within the GstreamerManager:

The GstreamerManager sets up an input pipeline that captures frames and metadata from the camera and delivers this data to an app sink. The app sink then invokes a callback upon receiving new camera data, which is where the StabilizationManager can work its magic on the camera data to produce a stabilized output. Finally this stabilized output is pushed onto the app source of an output pipeline, which then saves the output to a file.

The Input Pipeline

On the diagram above, some elements of the input pipeline are removed in order to focus on the main steps. However, it is important that the input pipeline can use the rrv4l2src element to be able to read camera Start of Frame (SOF) timestamps (more on how to build this element here). Also, the input pipeline can handle both raw input and MJPEG input, which it then converts to RGBA. Finally, the input pipeline uses a tee to send the data to an app sink and to save the camera capture to an unstabilized output file as a H264 codified MP4 file. This file can be used as a reference to compare against the stabilized output.

OnNewSample Callback

Once new camera data reaches the input pipeline app sink, it emits a new-sample signal, which then calls on the OnNewSample callback. This callback then receives the Gstreamer Sample, where it can extract both the frame buffer and the SOF timestamp from the sample metadata. The GstreamerManager then invokes the StabilizationManager StabilizeFrame method on the frame buffer and SOF timestamp, which results in a stabilized output frame buffer. Finally, the callback packages the output frame buffer into a Gstreamer sample and emits the push-sample signal to send this sample to the app source on the output pipeline.

Output Pipeline

This pipeline receives buffers through its app source, sent from the OnNewSample callback. This pipeline then saves the buffers to a stabilized output file using a H264 encoded MP4 file.

The StabilizationManager

Here is a diagram that illustrates the basic processing stages of the StabilizationManager:

The StabilizationManager runs a thread that focuses on reading from the IMU sensor and pushing the sensor payloads to a queue. In parallel, when the GstreamerManager receives a new frame from the camera, it invokes the StabilizeFrame method of the StabilizationManager. Here is where the RVS library components are used to produce a stabilized output frame.

Sensor Thread

This thread makes use of a RVS ISensor instance to extract sensor payloads, containing gyroscope, accelerometer, and timestamp data. These payloads are pushed to a queue and then the sensor thread sleeps in order to regulate the sensor sampling rate. If the queue reaches a maximum size, then old payloads are dropped. This queue is read from the main loop when executing the StabilizeFrame stage and then the queue is cleared. The sensor thread is expected to run at a higher frequency than the frame stabilization rate, meaning that for each frame iteration, there should be multiple sensor payloads in the queue.

Stabilize Frame

The StabilizeFrame stage runs the main RVS steps, which are explained in detailed here. To quickly summarize, the first step in stabilizing the image is to determine the camera orientation at the time that the capture was taken. The IMU gyroscope data is the main way of determining camera orientations, but this data comes in the form of angular velocities. The Integrate step obtains concrete orientations from angular velocity data. The sensor data will probably not synchronize precisely with the moment that the camera capture is taken, so the Interpolate step determines the likely camera orientation at capture time from the integrated sensor data; this is where the SOF timestamp is used. Once the orientation of the camera at capture time is determined, the Stabilize step finds the necessary rotation to correct the input image movement. Finally, the Undistort step is able to apply corrections to the input image based on the stabilization rotation and the camera calibration matrices in order to produce a stabilized output.

Additional Details

Similar to the offline example application, this application is constructed by default by the building system. Make sure to have -Denable-examples=enabled in the construction. Additionally, this example requires OpenCV, CUDA, and Gstreamer support, during construction these are enabled with -Denable-opencv=enabled -Denabled-cuda=enabled -Denable-gstreamer=enabled.

Here is an example of the usage with every option specified:

rvs-complete-online-concept --help --width 1920 --height 1080 --framerate 30/1 --sensor-name Icm42600 --sensor-device icm42688 --sensor-frequency 200 --sensor-orientation xYz --smoothing-constant 0.3 --field-of-view 1.2 --calibration-file calibration.yml --backend CUDA --unstabilized-output unstabilized_output.mp4 --stabilized-output stabilized_output.mp4 --video-format MJPG --video-device /dev/video0

Here is the complete usage message:

Usage:

rvs-complete-online-concept [--width WIDTH] [--height HEIGHT] [--capture-width CAPT_WIDTH] [--capture-height CAPT_HEIGHT] [--framerate FRAMERATE] [--sensor-name SENSOR_NAME] [--sensor-device SENSOR_DEVICE] [--sensor-frequency SENSOR_FREQUENCY] [--sensor-orientation SENSOR_ORIENTATION] [--smoothing-constant SMOOTHING_CONSTANT] [--field-of-view FIELD_OF_VIEW] [--calibration-file CALIBRATION_FILE] [--backend BACKEND] [--unstabilized-output OUTPUT] [--stabilized-output STABILIZED_OUTPUT] [--video-format VIDEO_FORMAT] [--video-device VIDEO_DEVICE] [--disable-stabilizer] [--enable-log-data] [--frame-time-offset OFFSET_US]

Options:

--help: prints this message

--width: output video width

default: 1920

--height: output video height

default: 1080

--capture-width: input video width

default: 1920

--capture-height: input video height

default: 1080

--framerate: input/output video framerate

default: 30/1

--sensor-name: sensor name to use for IMU data

default: Bmi160

--sensor-device: sensor device to use for IMU data

default: /dev/i2c-7

--sensor-frequency: sensor sampling frequency

default: 300

--sensor-orientation: IMU to camera orientation

default: xzy

--smoothing-constant: stabilization smoothing constant

default: 0.2

--field-of-view: undistort field of view

default: 1.5

--calibration-file: file with camera lens calibration

default: calibration.yml

--backend: undistortion algorithm backend

default: CUDA

--unstabilized-output: path to unstabilized output

default: unstabilized_output.mp4

--stabilized-output: path to stabilized output

default: stabilized_output.mp4

--video-format: format for capture ('MJPG' or 'RAW')

default: MJPG

--video-device: device for capture

default: /dev/video0

--frame-time-offset: offset SoB - SoF in microsecs

default: 0 us

--enable-log-data: saves the timestamps and IMU data

default: disabled.

Saves into imu_log.csv and frame_log.csv

--disable-stabilizer: enables the stabilization output

default: enabled

Setting the --video-format to raw or anything other than MJPG will make the online concept use nvarguscamerasrc this is important when using a camera over MIPI CSI-2 on NVIDIA Jetson. You also need to set the --video-device to something like 0 instead of /dev/video0 since this is the value used by nvarguscamerasrc (sensor-id). For example:

rvs-complete-online-concept --help --width 1920 --height 1080 --framerate 30/1 --sensor-name Icm42600 --sensor-device icm42688 --sensor-frequency 200 --sensor-orientation xYz --smoothing-constant 0.3 --field-of-view 1.2 --calibration-file calibration.yml --backend CUDA --unstabilized-output unstabilized_output.mp4 --stabilized-output stabilized_output.mp4 --video-format raw --video-device 0

The application also accepts the frame-time-offset, which is the offset between the video capture and the IMU data. The calibration process is given here. Given in microseconds.