Library Integration for IMU - Preparing the Video

RidgeRun Video Stabilization Library RidgeRun documentation is currently under development. |

|

|

Relevance of Timestamps

The RidgeRun Video Stabilization Library requires both, the video and IMU data, to have timestamps referenced to the same time reference. We encourage you to have a timestamp coming from a monotonic clock that cannot be modified by NTP. An alternative to meet these conditions on Linux systems is the use of the CLOCK_MONOTONIC_RAW, using the clock_gettime function.

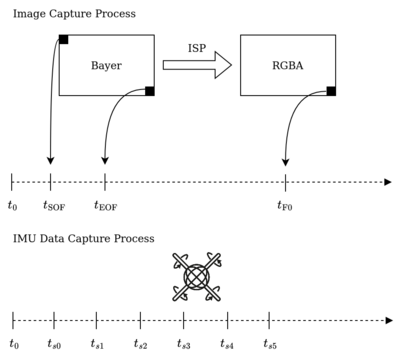

Nevertheless, the timestamping should be referred to the Start of Frame (SOF) and End of Frame (EOF), not to the final time (). This is given that, during the image capture process, the capture times () captures the actual scene. The is out and not representing the actual scene in the real world. These times can be captured from the video subsystem through the Video for Linux 2 (V4L2) framework.

In case of having both, it is recommended to have the middle time of the scene, meaning that, the timestamp assigned to the i-th image frame is computed as

If one of those two times is absent, taking the available time is still a good approximation.

How Can I Get the Proper Timestamp

You can use the following snippet as a reference:

// Header: #include <time.h> // Code: ::timespec time; // Gets time and converts it to microseconds (us) ::clock_gettime(CLOCK_MONOTONIC_RAW, &time); uint64_t timestamp = time.tv_sec * 1000000 + time.tv_nsec / 1000;

Why Timestamps are so Important?

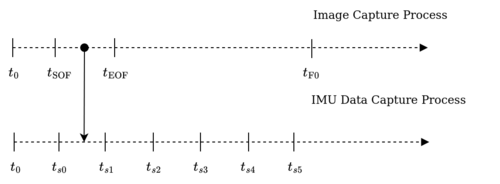

This is because the IMU data is also sampled and discretised. During the samples, the angles read can jump from one value to another. Placing an example, assuming that we have a first sample with angle and timestamp , a second sample with angle and timestamp and the frame timestamp . Thus, the frame's angular position is neither 45 nor 55 degrees. It has a value between that range. Therefore, we need to interpolate between the sample timestamps, taking the image timestamp as the point of interest to determine the actual angle of the frame.

The following picture illustrates the aforementioned detail:

Constructing the Image instance

Once the timestamp and the RGBA image buffer are ready, the RidgeRun Video Stabilization Library offers a wrapper class (Image) to encapsulate both. The following example illustrates how to wrap the image frame:

// Assumption: the image and the timestamp are already filled.

// The following variables are just illustrative.

// The timestamp is the middle point between SOF and EOF from the driver

uint8_t *image_buffer;

uint64_t image_timestamp;

int width = 1920;

int height = 1080;

int pitch = 1920 * 4;

// We are going to assume that the image is in RGBA and used the HostAllocator

using Allocator = HostAllocator;

using ImageFormat = RGBA<uint8_t>;

using ImageType = Image<ImageFormat, Allocator>;

std::shared_ptr<IImage> image =

std::make_shared<ImageType>(width, height, {pitch}, image_buffer, image_timestamp);

// From now on, you can use the image variable.

![{\displaystyle t_{{\text{frame}},j}\in [t_{i},t_{i+1}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d430bc67bee4e82775022123b28e40ea2841befb)