RidgeRun Linux Camera Drivers - Examples - i.MX8

i.MX8 Capture SubSystem

The i.MX 8 series of application processors, is a scalable multicore platform offering single, dual, and quad-core options based on the Arm® Cortex® architecture. The i.MX 8M Plus family by NXP is designed specifically for machine learning applications, integrating advanced multimedia features with high-performance processing tailored for low-power consumption.

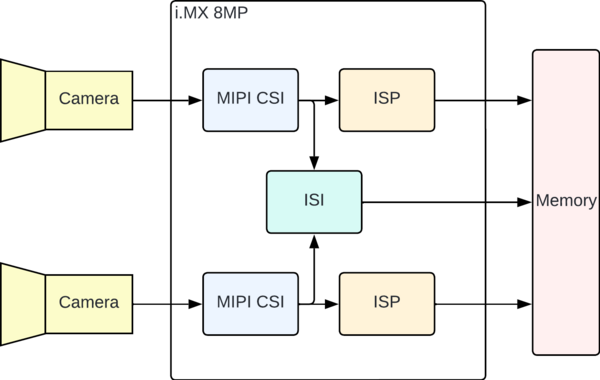

The i.MX 8M Plus Applications Processor features a robust core complex with a quad-core Cortex-A53 cluster, a Cortex-M7 coprocessor, an audio digital signal processor, and accelerators for machine learning and graphics. The following figure shows the main components of the capture subsystem for the i.MX8MP SoC, other versions may have different components or layout.

- MIPI CSI

MIPI Camera Serial Interface (MIPI_CSI2) is the camera interface for this chip. It works with MIPI DPHY module to connect to the host processor. MIPI_CSI2 supports RAW, YUV and RGB image formats when used in conjunction with output to ISI or ISP.

- ISP

The Image Signal Processors (ISP) receive an image from the camera sensor and converts it from raw Bayer to YUV so it can be processed by the chip. The ISP also provides additional processing to improve the image quality.

- ISI

Image Sensor Interface (ISI) module interfaces up to 2 pixel link sources to obtain the image data for processing in its pipeline channels. Each pipeline processes the image line from a configured source and performs one or more functions that are configured by software.

This module is designed to interface with camera sensors and process image data. It can handle tasks like:

- Downscaling

- Color Space Conversion

- Alpha channel insertion for RGB formats

- Mirroring (horizontal and vertical flips)

- Frame awareness and frame skipping

- Flow control

OpenCV Examples

The following examples shows some basic Python code using OpenCV to capture from either a USB camera or a MIPI CSI camera. To use the code make sure you have OpenCV installed in your i.MX8 board, use this command to install it:

sudo apt-get install python3-opencv

After the installation was completed, simply run the command (camera_capture.py contains the code shown below):

python3 camera_capture.py

USB camera

The following Python code shows a basic example using OpenCV to capture from a USB camera:

import cv2

CAMERA_INDEX = 0

TOTAL_NUM_FRAMES = 100

FRAMERATE = 30.0

def is_camera_available(camera_index=0):

cap = cv2.VideoCapture(camera_index)

if not cap.isOpened():

return False

cap.release()

return True

def main():

if is_camera_available(CAMERA_INDEX):

print(f"Camera {CAMERA_INDEX} is available.")

else:

print(f"Camera {CAMERA_INDEX} is not available.")

exit()

cap = cv2.VideoCapture(CAMERA_INDEX)

if not cap.isOpened():

print("Error: Could not open video stream.")

exit()

print("Camera opened successfully.")

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

out = cv2.VideoWriter('output.mp4', fourcc, FRAMERATE, (frame_width, frame_height))

num_frames = 0

while True:

ret, frame = cap.read()

if not ret:

print("Error: Failed to capture image.")

break

out.write(frame)

print(f"Recording: {num_frames} / {TOTAL_NUM_FRAMES}", end='\r')

if num_frames >= TOTAL_NUM_FRAMES:

print("\nCapture duration reached, stopping recording.")

break

num_frames += 1

# Release the camera and the VideoWriter

cap.release()

out.release()

if __name__ == '__main__':

main()

MIPI CSI camera

The following Python code shows a basic example using OpenCV to capture from a MIPI CSI camera:

import cv2

TOTAL_NUM_FRAMES = 100

FRAMERATE = 30.0

PIPELINE = "v4l2src device=/dev/video3 ! video/x-raw,framerate=30/1,width=1920,height=1080,format=BGRx ! videoconvert ! appsink"

def is_camera_available():

cap = cv2.VideoCapture(PIPELINE)

if not cap.isOpened():

return False

cap.release()

return True

def main():

if is_camera_available():

print("A camera is available.")

else:

print("No camera available.")

exit()

cap = cv2.VideoCapture(PIPELINE)

if not cap.isOpened():

print("Error: Could not open video stream.")

exit()

print("Camera opened successfully.")

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

encoder = "appsrc ! videoconvert ! video/x-raw,format=I420,width=1920,height=1080,framerate=30/1 ! v4l2h264enc ! h264parse ! qtmux ! filesink location=output.mp4"

out = cv2.VideoWriter(encoder, cv2.CAP_GSTREAMER, 0, FRAMERATE, (frame_width, frame_height), True)

num_frames = 0

while True:

ret, frame = cap.read()

if not ret:

print("Error: Failed to capture image.")

break

out.write(frame)

print(f"Recording: {num_frames} / {TOTAL_NUM_FRAMES}", end='\r')

if num_frames >= TOTAL_NUM_FRAMES:

print("\nCapture duration reached, stopping recording.")

break

num_frames += 1

# Release the camera and the VideoWriter

cap.release()

out.release()

if __name__ == '__main__':

main()

GStreamer Examples

The following GStreamer pipelines demonstrates how to capture, display and encode video from a MIPI CSI camera:

1080p@30 capture and display

gst-launch-1.0 v4l2src device=/dev/video3 ! video/x-raw,width=1920,height=1080,framerate=30/1 ! queue ! videoconvert ! autovideosink

1080p@30 capture and h264 encoding

FILE=filename.mp4 gst-launch-1.0 v4l2src device=/dev/video3 ! video/x-raw,width=1920,height=1080,framerate=30/1 ! queue ! v4l2h264enc ! h264parse ! qtmux ! filesink location=$FILE -e

1080p@30 capture and h265 encoding

FILE=filename.mp4 gst-launch-1.0 v4l2src device=/dev/video3 ! video/x-raw,width=1920,height=1080,framerate=30/1 ! queue ! v4l2h265enc ! h265parse ! qtmux ! filesink location=$FILE -e

RidgeRun Product Use Cases

RidgeRun provides a suite of tools to enhance your multimedia projects using GStreamer. Below are some practical examples showcasing the capabilities of GstRtspSink and GstSEIMetadata. These pipelines are designed to stream video with embedded metadata over RTSP, offering an overview into how you can leverage these tools in your applications. To obtain these or any other product evaluations free of charge, please feel free to contact us.

Use Case Examples

GstRtspSink

- Surveillance Systems: This product can be used to stream video to multiple monitoring stations simultaneously.

- Live Broadcasting: For live events like sports, concerts, or webinars, GstRtspSink can stream the live video feed to multiple viewers.

- Remote Monitoring in Industrial Applications: GstRtspSink enables streaming of video feeds from cameras monitoring the production line or remote sites.

- Video Conferencing: In video conferencing systems, GstRtspSink can be used to stream the video feed from one participant to others.

- Smart City Infrastructure: GstRtspSink can stream video feeds from cameras to control centers, allowing operators to monitor multiple locations effectively.

GstSEIMetadata

- Drone Surveillance: The GstSEIMetadata element allows embedding the location data directly into the H264/H265 video frames.

- Sports Broadcasting: In live sports broadcasts, data such as player statistics, game scores, or timing information can be embedded directly into the video stream using GstSEIMetadata.

- Medical Imaging: The GstSEIMetadata element enables embedding data such as patient ID or procedure details into the video, ensuring that it stays synchronized with the visual information.

- Augmented Reality (AR) Applications: In AR scenarios, additional information like object coordinates, scene descriptions, or environmental data can be embedded into the video stream using GstSEIMetadata.

- Video Analytics: In security or retail environments where video analytics are performed, the GstSEIMetadata element can be used to inject data such as object detection results or motion tracking information into the video stream.

To install the test evaluation, make sure to follow this section to install GstRtspSink, to install GstSEIMetadata you can follow the same steps with the corresponding .tar file. This pipeline streams a video from a V4L2-compliant camera with embedded SEI metadata over RTSP:

gst-launch-1.0 v4l2src device=/dev/video3 ! video/x-raw,width=1920,height=1080,framerate=15/1,format=YUY2 ! queue ! v4l2h264enc ! queue ! video/x-h264,mapping=/stream1 ! seimetatimestamp ! seiinject ! queue ! rtspsink service=8000

To receive the stream and display the video, use the following pipeline:

gst-launch-1.0 rtspsrc location=rtsp://127.0.0.1:8000/stream1 ! queue ! rtph264depay ! video/x-h264 ! h264parse ! queue max-size-buffers=1 ! h264parse ! v4l2h264dec ! autovideosink

To extract and analyze the embedded SEI metadata from the RTSP stream, use the following pipeline:

GST_DEBUG=*seiextract*:MEMDUMP gst-launch-1.0 rtspsrc location=rtsp://127.0.0.1:8000/stream1 ! queue ! rtph264depay ! video/x-h264 ! h264parse ! seiextract ! fakesink

You should see output messages displaying the transmitted metadata. An example of these messages is shown below:

0:00:04.231346302 2176 0xaaaaf60b8180 MEMDUMP seiextract gstseiextract.c:299:gst_sei_extract_extract_h264_data:<seiextract0> --------------------------------------------------------------------------- 0:00:04.231394805 2176 0xaaaaf60b8180 MEMDUMP seiextract gstseiextract.c:299:gst_sei_extract_extract_h264_data:<seiextract0> The extracted data is: 0:00:04.231430557 2176 0xaaaaf60b8180 MEMDUMP seiextract gstseiextract.c:299:gst_sei_extract_extract_h264_data:<seiextract0> 00000000: 00 67 6e 58 10 02 00 00 .gnX.... 0:00:04.231459059 2176 0xaaaaf60b8180 MEMDUMP seiextract gstseiextract.c:299:gst_sei_extract_extract_h264_data:<seiextract0> ---------------------------------------------------------------------------

All Our Products