GstWebRTC Fundamentals: WebRTC Development Guide

| GstWebRTC | ||||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

| WebRTC Fundamentals | ||||||||

| GstWebRTC Basics | ||||||||

|

||||||||

| Evaluating GstWebRTC | ||||||||

| Getting the code | ||||||||

| Building GstWebRTC | ||||||||

| Examples | ||||||||

|

||||||||

| MCU Demo Application | ||||||||

| Contact Us |

This page gives an overview of the fundamental concepts of WebRTC.

What is WebRTC?

WebRTC is a real-time communication project started by Google in 2011. It defines a series of protocols and APIs to enable peer-to-peer data streaming. WebRTC stands for Web Real Time Communication and its specification is still an ongoing work as a cooperative effort between the W3C defining the APIs and the IETF standardizing the protocols. Developers of the main internet browsers have been working to integrate WebRTC natively. Recently, and with the spread of IoT, several third-party solutions had been released in order to achieve WebRTC streaming in devices other than browsers on a PC, such as embedded devices. A user could stream video taken from its mobile phone camera directly to a Smart TV on the other side of the world leveraging the power of WebRTC.

WebRTC Use Cases

There are many existing streaming solutions to communicate data through the network, yet WebRTC continues to gain popularity. This may be due to the fact that WebRTC architecture was engineered from its origins to overcome the main limitations of existing protocols. The most noticeable ones are:

- Nat Traversal: Embedded in the WebRTC protocol are the mechanisms that allow two computers to communicate, regardless of the network topologies they may be connected to.

- Security: Unlike other protocols, encryption is mandatory in the WebRTC standard. The data transmitted between the peers is secured using DTLS and controlled using SRTP and SCTP.

- Universality: The idea behind WebRTC is to work out-of-the-box on the most popular browsers, without the need of installing external plug-ins, special codecs or additional software.

- Flexible: While the API and protocols are well defined, the negotiating process between two peers is left open to the application to implement according to whatever fits its needs best.

The following sections describe each of these features in more detail.

ICE and NAT Traversal

Likely, the main limitation of many existing streaming solutions is the difficulty to transmit the data between endpoints behind different NATs and/or firewalls. This is especially true when the streaming is meant to be done peer to peer.

Consider the configuration in Figure 1. The computers trying to communicate are each one behind a NAT and most likely a firewall. The first detail to consider is that the IP address assigned to each PC is different from their public IP address. This public IP is probably among multiple computers in that local network. In order for PC 1 to send data to PC 2, it needs a mechanism to translate the public address into the appropriate private one. Similarly, in order to preserve security in the connection, PC 2 needs to have certainty that PC 1 is who it claims to be, considering that its IP address was also translated. This mechanism is called NAT Traversal.

On the other hand, and for security reasons, both PCs are protected by a firewall. This restricts incoming connections to certain, well known, ports for very specific uses. For example, a gateway may typically block everything but ports 80 and 22, allowing solely HTTP and SSH requests respectively. This means that a PC in this network will not be able to receive a stream on a different port, and hence, won't be able to receive the stream at all. Of course, the firewall may be configured to receive data at an alternative port, but this imposes several problems such as:

- Requires the users to have some level of technical knowledge

- Requires prior knowledge of the port to use

- May result unacceptable on security-critical networks such as military, financial, medical, etc...

ICE

To overcome this WebRTC makes use of ICE to establish an out-of-the-box peer to peer connection. ICE, in simple words, is a mechanism that a pair of hosts may use in order to perform NAT traversal and establish communication. Using ICE, WebRTC users can communicate directly with other computers without needing to perform extra configurations or additional steps.

To achieve this, ICE attempts to establish connections using several combinations of IP addresses and ports, called candidates. By the end, ICE will have chosen the candidates that successfully allows communication. In general, the ICE candidates may be broken down in three layers as shown in Table 1.

| Candidate | Description | Provided by |

|---|---|---|

| Host | Host addresses within the NAT | LAN |

| Reflex | Public addresses as seen by an external host | STUN Server |

| Relay | Public addresses of a relay server as transport channel | STUN/TURN Servers |

The following sections provide further detail for each candidate and associated server.

Host Candidates

The host candidates are the IP addresses and ports of a host within the NAT. These candidates will only be visible to computers connected to this LAN. Evidently, if the host is connected to the WAN, these candidates will be its public addresses and hence accessible by other peers directly.

The host candidates are the first candidates to be gathered and tested by ICE. If they happen to work, they will be preferred before the other layers to reduce throughput and latency in the communication.

Reflex Candidates and STUN Server

If the host candidates of PC1 are not accessible to PC2, i.e.: they are on a private, local network, ICE attempts to use reflex candidates. These are the IP addresses and ports as seen by an external host connected to the public network. To gather these candidates, ICE makes use of a STUN Server.

STUN stands for Session Traversal Utilities for NAT and is a lightweight special service that will return the reflex candidates when requested. A general overview of the STUN server usage is shown in Figure 2. PC1 and PC2 send requests to the STUN server (available in the public network) which, in turn, will return the address and port of the hole that was opened during this request. These holes now serve as an open channel to start data streaming and each host can encode these reflex candidates into the protocol specific payload.

Reflex are the second preferred set of candidates, since the communication is still performed peer to peer between the hosts. The STUN server only facilitates the candidates discovery. However, there are several NAT topologies and STUN doesn't work with all of them. The server will attempt to gather the candidates to establish a connection, however many configurations may fail. Table 2 summarizes the most common NAT types and whether the STUN server works or not.

| NAT Type | Yes | No |

|---|---|---|

| Full-cone_NAT Full Cone NAT | X | |

| Address Restricted Cone NAT | X | |

| Port Restricted Cone NAT | X | |

| Symmetric NAT | X |

Relay Candidates and TURN Server

Finally, if reflex candidates do not allow to establish a successful connection, ICE will make use of relay candidates. These candidates are the addresses and ports of a third party server that acts as a relay for communication or, in other words, will forward messages between a pair of peers. Such a relay is known as a TURN Server.

TURN stands for Traversal Using Relays around NAT and is basically a publicly available server that will relay communication when neither host or reflex candidates were successful. TURN servers are guaranteed to work (unless NATs were specifically configured to block them) because they are publicly available however the communication is no longer peer to peer and hence, the other types of candidates should always be preferred. Figure 3. shows a general overview of the TURN server usage. PC1 and PC2 perform authentication in the TURN server - because the data will now go through it - and store the addresses that the server allocated for them. Finally, the peers can communicate though the server.

WebRTC Security

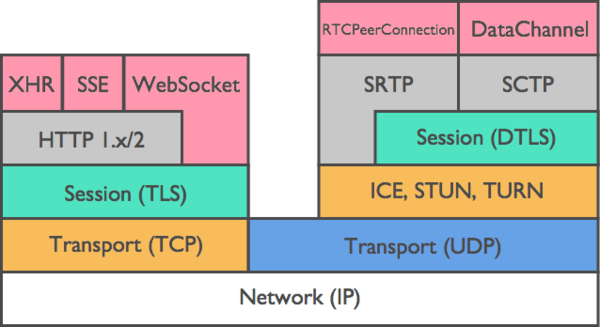

Unlike other streaming protocols, WebRTC forbids streaming data without encryption. As such, security layers are described as part of the standard as exemplified in Figure 4. The stack shows in gray and green the layers related to security and encryption.

SRTP, SRTCP, and SCTP

WebRTC is able to stream audio, video and data. The last one refers to everything that could be transmitted other than the primers, say chat, file sharing, metadata, etc... Each of these streams is transmitted and secured using different mechanisms.

Media streams (audio and video) are delivered through RTP (Real-time Transport Protocol). This protocol was designed to ensure timely and ordered packet arrival while tolerating data loss due to unreliable channels. RTP is usually used in conjunction with RTCP (Real-time Transport Control Protocol), which provides out-of-bands statistics, quality-of-service and synchronization data to the participants of the session. These protocols, however, don't specify security considerations. SRTP and SRTCP are RTP and RTCP profiles, respectively, that allow for secure data transmission. Among others, SRTP enables RTP with authentication and encryption features, and may be disabled if desired, without the need of going back to pure RTP.

Data streams, on the other hand, are delivered through a different protocol: SCTP. Stream Control Transmission Protocol is a transport-layer protocol sitting next to UDP and TCP. It is message-oriented like UDP and ensures reliable, in-sequence transport of messages with congestion control like TCP; it differs from these in providing multi-homing and redundant paths to increase resilience and reliability. [Wikipedia] In the lack of STCP support by the kernel, it can be tunneled over UDP.

DTLS

Regardless of if it's a media stream through SRTP or a data stream through SCTP, both are transmitted over DTLS. Datagram Transport Layer Security is based on TLS and enables communication securities to the streams. DTLS preserves the semantics of the underlying SRTP or SCTP but provides means of authentication, symmetric cryptography, privacy and integrity.

WebRTC Application Signaling

As mentioned earlier, one of the main benefits of WebRTC is that, although public APIs and streaming protocols are thoroughly standardized, the initial negotiation and communication establishment is up to the application to implement. This initial handshaking should take care of simple stuff, such as letting one peer know when the other is attempting to call, as more elaborate topics like establishing a unique session between two peers and sharing the supported capabilities and ICE candidates of the participants. In the WebRTC context, the part of the application that is in charge of these is called the Signaler.

Generally speaking, a Signaler should handle, at least, the following topics:

- Media capabilities: Both peers need to agree in the media formats the session will support.

- ICE candidates: One endpoint needs to know how to deliver the streaming to the other endpoint.

- Authentication: Exchange certificates for proper secure communication.

It must be noted that the signaling is not performed over the NAT traversal channels found by ICE. Contrary, once the ICE candidates are gathered by each peer, they are mutually shared using the Signaler. Therefore, it is up to the application to ensure that this out-of-bounds communication is performed securely and accessible for both endpoints. Although a Signaler almost always involves a server publicly accessible, there is no restriction on how it is implemented. In its simplest (dumbest) case it could be a verbal notification from one room to another, more complex cases could implement the Signaling vía BlueTooth, RF, or USB while the streaming is performed securely over the network. Again, the most typical case is a public server with a custom web service exposed for both peers to negotiate. Figure 5 summarizes the interaction of the Signaler in the whole process.

SDP

WebRTC suggests using the SDP as the way to exchange information in a Signaler. A Session Description Protocol is a standard, extensible way to describe media formats. This protocol is widely used in several existing streaming solutions such as RTSP or SIP (not a streaming protocol per se, but a signaler for VoIP).