GstInference GStreamer pipelines for NVIDIA Xavier

Make sure you also check GstInference's companion project: R2Inference |

| GstInference |

|---|

|

| Introduction |

| Getting started |

| Supported architectures |

|

InceptionV1 InceptionV3 YoloV2 AlexNet |

| Supported backends |

|

Caffe |

| Metadata and Signals |

| Overlay Elements |

| Utils Elements |

| Legacy pipelines |

| Example pipelines |

| Example applications |

| Benchmarks |

| Model Zoo |

| Project Status |

| Contact Us |

|

The following pipelines are deprecated and kept only as reference. If you are using v0.7 and above, please check our sample pipelines on the Example Pipelines section. |

|

|

Tensorflow

Inceptionv4 inference on image file using Tensorflow

- Get the graph used on this example from this link

- You will need an image file from one of ImageNet classes

- Pipeline

IMAGE_FILE=cat.jpg MODEL_LOCATION='graph_inceptionv4_tensorflow.pb' INPUT_LAYER='input' OUTPUT_LAYER='InceptionV4/Logits/Predictions'

GST_DEBUG=*inceptionv4*:6 gst-launch-1.0 \ multifilesrc location=$IMAGE_FILE ! jpegparse ! nvjpegdec ! 'video/x-raw' ! nvvidconv ! 'video/x-raw(memory:NVMM),format=NV12' ! nvvidconv ! queue ! net.sink_model \ inceptionv4 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER -v

- Output

0:00:27.311799544 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:27.392320971 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:27.392530965 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.641151) 0:00:27.392753791 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:27.473382966 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:27.473550590 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.641151) 0:00:27.473744103 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:27.554464931 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:27.554651980 8662 0x5574569c00 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.641151)

Inceptionv4 inference on video file using TensorFlow

- Get the graph used on this example from this link

- You will need a video file from one of ImageNet classes

- Pipeline

VIDEO_FILE='cat.mp4' MODEL_LOCATION='graph_inceptionv4_tensorflow.pb' INPUT_LAYER='input' OUTPUT_LAYER='InceptionV4/Logits/Predictions'

GST_DEBUG=inceptionv4:6 gst-launch-1.0 \ filesrc location=$VIDEO_FILE ! qtdemux name=demux ! h264parse ! omxh264dec ! nvvidconv ! queue ! net.sink_model \ inceptionv4 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER

- Output

0:00:26.066035371 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:26.148041538 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:26.148233387 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.671327) 0:00:26.148416179 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:26.229609892 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:26.229837199 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.671591) 0:00:26.230285188 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:26.312565831 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:26.312736911 8749 0x5561bb6c00 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.671140)

Inceptionv4 inference on camera stream using TensorFlow

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

NVIDIA Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_inceptionv4_tensorflow.pb' INPUT_LAYER='input' OUTPUT_LAYER='InceptionV4/Logits/Predictions'

GST_DEBUG=inceptionv4:6 gst-launch-1.0 \ nvarguscamerasrc sensor-id=$SENSOR_ID ! nvvidconv ! queue ! net.sink_model \ inceptionv4 name=net backend=tensorflow model-location=$MODEL_LOCATION backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER

- Output

0:00:18.988267527 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:19.070172277 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:19.070390174 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 572 : (0.236359) 0:00:19.070572038 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:19.151636754 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:19.151839035 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 572 : (0.219772) 0:00:19.152054147 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:19.233439549 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:19.233609380 9466 0x55ab1bdf70 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 572 : (0.232309)

V4L2

- Pipeline

CAMERA='/dev/video1' MODEL_LOCATION='graph_inceptionv4_tensorflow.pb' INPUT_LAYER='input' OUTPUT_LAYER='InceptionV4/Logits/Predictions'

GST_DEBUG=inceptionv4:6 gst-launch-1.0 \ v4l2src device=$CAMERA ! videoconvert ! videoscale ! queue ! net.sink_model \ inceptionv4 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER

- Output

0:00:26.011511251 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:26.092995813 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:26.093545274 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 692 : (0.183870) 0:00:26.111398950 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:26.193210725 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:26.193724089 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 663 : (0.135397) 0:00:26.212316002 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:199:gst_inceptionv4_preprocess:<net> Preprocess 0:00:26.294116480 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:231:gst_inceptionv4_postprocess:<net> Postprocess 0:00:26.294284935 9419 0x55be091a80 LOG inceptionv4 gstinceptionv4.c:252:gst_inceptionv4_postprocess:<net> Highest probability is label 692 : (0.316769)

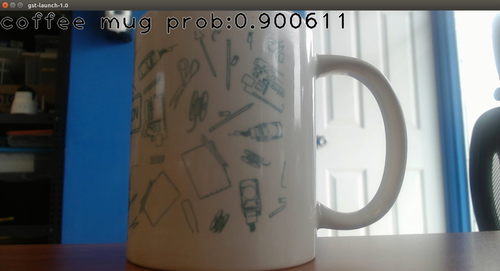

Inceptionv4 visualization with classification overlay Tensorflow

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

NVIDIA Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_inceptionv4_tensorflow.pb' INPUT_LAYER='input' OUTPUT_LAYER='InceptionV4/Logits/Predictions' LABELS='imagenet_labels.txt'

GST_DEBUG=inceptionv4:6 \ gst-launch-1.0 \ nvarguscamerasrc sensor-id=$SENSOR_ID ! 'video/x-raw(memory:NVMM)' ! tee name=t \ t. ! queue max-size-buffers=1 leaky=downstream ! nvvidconv ! 'video/x-raw,format=(string)RGBA' ! net.sink_model \ t. ! queue max-size-buffers=1 leaky=downstream ! nvvidconv ! 'video/x-raw,format=(string)RGBA' ! net.sink_bypass \ inceptionv4 name=net backend=tensorflow model-location=$MODEL_LOCATION backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER \ net.src_bypass ! classificationoverlay labels="$(cat $LABELS)" font-scale=4 thickness=4 ! nvvidconv ! nvoverlaysink sync=false -v

V4L2

- Pipeline

CAMERA='/dev/video1' MODEL_LOCATION='graph_inceptionv4_tensorflow.pb' INPUT_LAYER='input' OUTPUT_LAYER='InceptionV4/Logits/Predictions' LABELS='imagenet_labels.txt'

gst-launch-1.0 \ v4l2src device=$CAMERA ! "video/x-raw, width=1280, height=720" ! tee name=t \ t. ! videoconvert ! videoscale ! queue ! net.sink_model \ t. ! queue ! net.sink_bypass \ inceptionv4 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER \ net.src_bypass ! videoconvert ! classificationoverlay labels="$(cat $LABELS)" font-scale=4 thickness=4 ! videoconvert ! xvimagesink sync=false

- Output

TinyYolov2 inference on image file using Tensorflow

- Get the graph used on this example from this link

- You will need an image file from one of TinyYOLO classes

- Pipeline

IMAGE_FILE='cat.jpg' MODEL_LOCATION='graph_tinyyolov2_tensorflow.pb' INPUT_LAYER='input/Placeholder' OUTPUT_LAYER='add_8'

GST_DEBUG=*tinyyolov2*:6 gst-launch-1.0 \ multifilesrc location=$IMAGE_FILE ! jpegparse ! nvjpegdec ! 'video/x-raw' ! nvvidconv ! 'video/x-raw(memory:NVMM),format=NV12' ! nvvidconv ! queue ! net.sink_model \ tinyyolov2 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER

- Output

0:00:13.688000146 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:13.718464628 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:13.718745089 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:25.599654, y:11.992019, width:425.713685, height:450.001330, prob:15.272157] 0:00:13.718885768 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:13.749664857 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:13.749913956 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:25.599654, y:11.992019, width:425.713685, height:450.001330, prob:15.272157] 0:00:13.750110573 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:13.780493932 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:13.780683893 9079 0x5586b50400 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:25.599654, y:11.992019, width:425.713685, height:450.001330, prob:15.272157]

TinyYolov2 inference on video file using Tensorflow

- Get the graph used on this example from this link

- You will need a video file from one of TinyYOLO classes

- Pipeline

VIDEO_FILE='cat.mp4' MODEL_LOCATION='graph_tinyyolov2_tensorflow.pb' INPUT_LAYER='input/Placeholder' OUTPUT_LAYER='add_8'

GST_DEBUG=tinyyolov2:6 gst-launch-1.0 \ filesrc location=$VIDEO_FILE ! qtdemux name=demux ! h264parse ! omxh264dec ! nvvidconv ! queue ! net.sink_model \ tinyyolov2 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER

- Output

0:00:14.381019732 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:14.411021505 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:14.411177384 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:-44.591707, y:-5.845335, width:442.388362, height:481.775217, prob:14.093070] 0:00:14.411345232 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:14.442502898 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:14.442725660 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:-44.701888, y:-5.632816, width:442.592144, height:481.298278, prob:14.067967] 0:00:14.442917957 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:14.473535727 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:14.473714423 9117 0x557acd2400 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:-44.265085, y:-5.536356, width:441.748600, height:481.168355, prob:14.094460]

TinyYolov2 inference on camera stream using Tensorflow

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

NVIDIA Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_tinyyolov2_tensorflow.pb' INPUT_LAYER='input/Placeholder' OUTPUT_LAYER='add_8'

nvarguscamerasrc sensor-id=$SENSOR_ID ! nvvidconv ! 'video/x-raw,format=BGRx' ! queue ! net.sink_model \ tinyyolov2 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER

- Output

0:00:14.000211619 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:14.030785264 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:14.031252958 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:14, x:254.023574, y:0.996667, width:159.442352, height:348.442927, prob:9.038744] 0:00:14.031711884 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:14.062577890 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:14.062782056 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:14, x:256.071149, y:1.104463, width:156.332928, height:348.299846, prob:8.926831] 0:00:14.062944333 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:14.093773666 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:14.093983657 10096 0x559e4a3ad0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:14, x:256.927108, y:1.150354, width:156.535506, height:348.715533, prob:8.894010]

V4L2

- Pipeline

CAMERA='/dev/video1' MODEL_LOCATION='graph_tinyyolov2_tensorflow.pb' INPUT_LAYER='input/Placeholder' OUTPUT_LAYER='add_8'

GST_DEBUG=tinyyolov2:6 gst-launch-1.0 \ v4l2src device=$CAMERA ! videoconvert ! videoscale ! queue ! net.sink_model \ tinyyolov2 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER

- Output

0:00:28.565124313 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:28.595697567 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:28.595854948 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:8, x:185.249442, y:65.398277, width:105.441149, height:227.889733, prob:9.066236] 0:00:28.597461562 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:28.628034112 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:28.628255335 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:8, x:186.390381, y:66.198741, width:103.368757, height:226.331411, prob:9.443931] 0:00:28.629607125 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:28.660004596 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:28.660173338 10004 0x557d50b8f0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:8, x:186.278082, y:67.236859, width:103.066607, height:225.127201, prob:8.822085]

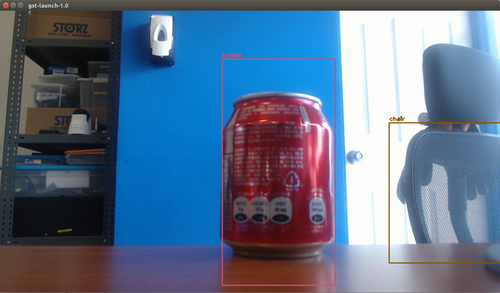

TinyYolov2 visualization with detection overlay Tensorflow

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

NVIDIA Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_tinyyolov2_tensorflow.pb' INPUT_LAYER='input/Placeholder' OUTPUT_LAYER='add_8' LABELS='labels.txt'

GST_DEBUG=tinyyolov2:6 \ gst-launch-1.0 \ nvarguscamerasrc sensor-id=$SENSOR_ID ! 'video/x-raw(memory:NVMM)' ! tee name=t \ t. ! queue max-size-buffers=1 leaky=downstream ! nvvidconv ! 'video/x-raw,format=(string)RGBA' ! net.sink_model \ t. ! queue max-size-buffers=1 leaky=downstream ! nvvidconv ! 'video/x-raw,format=(string)RGBA' ! net.sink_bypass \ tinyyolov2 name=net backend=tensorflow model-location=$MODEL_LOCATION backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER \ net.src_bypass ! detectionoverlay labels="$(cat $LABELS)" font-scale=4 thickness=4 ! nvvidconv ! nvoverlaysink sync=false -v

V4L2

- Pipeline

CAMERA='/dev/video1' MODEL_LOCATION='graph_tinyyolov2_tensorflow.pb' INPUT_LAYER='input/Placeholder' OUTPUT_LAYER='add_8' LABELS='labels.txt'

gst-launch-1.0 \ v4l2src device=$CAMERA ! "video/x-raw, width=1280, height=720" ! tee name=t \ t. ! videoconvert ! videoscale ! queue ! net.sink_model \ t. ! queue ! net.sink_bypass \ tinyyolov2 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER \ net.src_bypass ! videoconvert ! detectionoverlay labels="$(cat $LABELS)" font-scale=1 thickness=2 ! videoconvert ! xvimagesink sync=false

- Output

FaceNet visualization with embedding overlay Tensorflow

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

- LABELS and EMBEDDINGS files are in $PATH_TO_GST_INFERENCE_ROOT_DIR/tests/examples/embedding/embeddings.

NVIDIA Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_facenetv1_tensorflow.pb' INPUT_LAYER='input' OUTPUT_LAYER='output' LABELS='$PATH_TO_GST_INFERENCE_ROOT_DIR/tests/examples/embedding/embeddings/labels.txt' EMBEDDINGS='$PATH_TO_GST_INFERENCE_ROOT_DIR/tests/examples/embedding/embeddings/embeddings.txt'

gst-launch-1.0 \ nvarguscamerasrc sensor-id=$SENSOR_ID ! 'video/x-raw(memory:NVMM)' ! nvvidconv ! 'video/x-raw,format=BGRx,width=(int)1280,height=(int)720' ! videoconvert ! tee name=t \ t. ! queue ! videoscale ! queue ! net.sink_model \ t. ! queue ! net.sink_bypass \ facenetv1 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER \ net.src_bypass ! videoconvert ! embeddingoverlay labels="$(cat $LABELS)" embeddings="$(cat $EMBEDDINGS)" font-scale=4 thickness=4 ! videoconvert ! xvimagesink sync=false

V4L2

- Pipeline

CAMERA='/dev/video1' MODEL_LOCATION='graph_facenetv1_tensorflow.pb' INPUT_LAYER='input' OUTPUT_LAYER='output' LABELS='$PATH_TO_GST_INFERENCE_ROOT_DIR/tests/examples/embedding/embeddings/labels.txt' EMBEDDINGS='$PATH_TO_GST_INFERENCE_ROOT_DIR/tests/examples/embedding/embeddings/embeddings.txt'

gst-launch-1.0 \ v4l2src device=$CAMERA ! "video/x-raw, width=1280, height=720" ! tee name=t \ t. ! videoconvert ! videoscale ! queue ! net.sink_model \ t. ! queue ! net.sink_bypass \ facenetv1 name=net model-location=$MODEL_LOCATION backend=tensorflow backend::input-layer=$INPUT_LAYER backend::output-layer=$OUTPUT_LAYER \ net.src_bypass ! videoconvert ! embeddingoverlay labels="$(cat $LABELS)" embeddings="$(cat $EMBEDDINGS)" font-scale=4 thickness=4 ! videoconvert ! xvimagesink sync=false

- Output

TensorFlow-Lite

Inceptionv4 inference on image file using TensorFlow-Lite

- Get the graph used on this example from this link

- You will need an image file from one of ImageNet classes

- Pipeline

IMAGE_FILE='cat.jpg' MODEL_LOCATION='graph_inceptionv4.tflite' LABELS='labels.txt'

GST_DEBUG=inceptionv4:6 gst-launch-1.0 \ multifilesrc location=$IMAGE_FILE ! jpegparse ! nvjpegdec ! 'video/x-raw' ! nvvidconv ! 'video/x-raw(memory:NVMM),format=NV12' ! nvvidconv ! queue ! net.sink_model \ inceptionv4 name=net model-location=$MODEL_LOCATION backend=tflite labels="$(cat $LABELS)"

- Output

0:02:22.005527960 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:02:22.168796723 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:02:22.168947603 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.627314) 0:02:22.169237202 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:02:22.339393463 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:02:22.339496918 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.627314) 0:02:22.339701878 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:02:22.507804674 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:02:22.507950081 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.627314) 0:02:22.508232128 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:02:22.678740356 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:02:22.678892356 30355 0x5accf0 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.627314)

Inceptionv4 inference on video file using TensorFlow-Lite

- Get the graph used on this example from this link

- You will need a video file from one of ImageNet classes

- Pipeline

VIDEO_FILE='cat.mp4' MODEL_LOCATION='graph_inceptionv4.tflite' LABELS='labels.txt'

GST_DEBUG=inceptionv4:6 gst-launch-1.0 \ filesrc location=$VIDEO_FILE ! qtdemux name=demux ! h264parse ! omxh264dec ! nvvidconv ! queue ! net.sink_model \ inceptionv4 name=net model-location=$MODEL_LOCATION backend=tflite labels="$(cat $LABELS)"

- Output

0:00:11.728307018 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:11.892030154 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:11.892258185 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.686857) 0:00:11.892556808 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:12.065318539 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:12.065467786 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.673300) 0:00:12.065759849 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:12.247159695 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:12.247309295 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.669102) 0:00:12.247612718 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:12.419172436 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:12.419321396 30399 0x5ad000 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 282 : (0.667991)

Inceptionv4 inference on camera stream using TensorFlow-Lite

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

NVIDIA Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_inceptionv4.tflite' LABELS='labels.txt'

GST_DEBUG=inceptionv4:6 gst-launch-1.0 \ nvcamerasrc sensor-id=$SENSOR_ID ! nvvidconv ! queue ! net.sink_model \ inceptionv4 name=net backend=tflite model-location=$MODEL_LOCATION labels="$(cat $LABELS)"

V4L2

- Pipeline

CAMERA='/dev/video0' MODEL_LOCATION='graph_inceptionv4.tflite' LABELS='labels.txt'

GST_DEBUG=inceptionv4:6 gst-launch-1.0 \ v4l2src device=$CAMERA ! videoconvert ! videoscale ! queue ! net.sink_model \ inceptionv4 name=net model-location=$MODEL_LOCATION backend=tflite labels="$(cat $LABELS)"

- Output

0:00:12.199657219 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:12.365172092 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:12.365271548 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 774 : (0.196048) 0:00:12.365421435 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:12.530604726 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:12.530700501 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 774 : (0.179406) 0:00:12.530848565 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:12.697053611 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:12.697147818 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 774 : (0.144033) 0:00:12.697295530 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:12.862007878 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:12.862104134 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 774 : (0.157707) 0:00:12.862252645 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:200:gst_inceptionv4_preprocess:<net> Preprocess 0:00:13.027090881 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:232:gst_inceptionv4_postprocess:<net> Postprocess 0:00:13.027190273 4675 0x10ee590 LOG inceptionv4 gstinceptionv4.c:253:gst_inceptionv4_postprocess:<net> Highest probability is label 774 : (0.142998)

Inceptionv4 visualization with classification overlay TensorFlow-Lite

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

NVIDIA Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_inceptionv4.tflite' LABELS='labels.txt'

gst-launch-1.0 \ nvcamerasrc sensor-id=$SENSOR_ID ! 'video/x-raw(memory:NVMM)' ! tee name=t \ t. ! queue max-size-buffers=1 leaky=downstream ! nvvidconv ! 'video/x-raw,format=(string)RGBA' ! net.sink_model \ t. ! queue max-size-buffers=1 leaky=downstream ! nvvidconv ! 'video/x-raw,format=(string)RGBA' ! net.sink_bypass \ inceptionv4 name=net backend=tflite model-location=$MODEL_LOCATION labels="(cat $LABELS)" \ net.src_bypass ! classificationoverlay labels="$(cat $LABELS)" font-scale=4 thickness=4 ! nvvidconv ! nvoverlaysink sync=false -v

V4L2

- Pipeline

CAMERA='/dev/video1' MODEL_LOCATION='graph_inceptionv4.tflite' LABELS='labels.txt'

gst-launch-1.0 \ v4l2src device=$CAMERA ! "video/x-raw, width=1280, height=720" ! tee name=t \ t. ! videoconvert ! videoscale ! queue ! net.sink_model \ t. ! queue ! net.sink_bypass \ inceptionv4 name=net backend=tflite model-location=$MODEL_LOCATION labels="(cat $LABELS)" \ net.src_bypass ! videoconvert ! classificationoverlay labels="$(cat $LABELS)" font-scale=4 thickness=4 ! videoconvert ! xvimagesink sync=false

- Output

TinyYolov2 inference on image file using TensorFlow-Lite

- Get the graph used on this example from this link

- You will need an image file from one of TinyYOLO classes

- Pipeline

IMAGE_FILE='cat.jpg' MODEL_LOCATION='graph_tinyyolov2.tflite' LABELS='labels.txt'

GST_DEBUG=tinyyolov2:6 gst-launch-1.0 \ multifilesrc location=$IMAGE_FILE ! jpegparse ! nvjpegdec ! 'video/x-raw' ! nvvidconv ! 'video/x-raw(memory:NVMM),format=NV12' ! nvvidconv ! queue ! net.sink_model \ tinyyolov2 name=net backend=tflite model-location=$MODEL_LOCATION labels="(cat $LABELS)"

- Output

0:00:07.137677204 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:07.266928985 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:07.267080761 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:25.820670, y:11.977936, width:425.495203, height:450.224357, prob:15.204609] 0:00:07.267382968 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:07.394225925 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:07.394431653 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:25.820670, y:11.977936, width:425.495203, height:450.224357, prob:15.204609] 0:00:07.394858915 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:07.527547133 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:07.527753020 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:25.820670, y:11.977936, width:425.495203, height:450.224357, prob:15.204609] 0:00:07.528080219 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:07.662473455 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:07.662769998 30513 0x5accf0 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:25.820670, y:11.977936, width:425.495203, height:450.224357, prob:15.204609]

TinyYolov2 inference on video file using TensorFlow-Lite

- Get the graph used on this example from this link

- You will need a video file from one of TinyYOLO classes

- Pipeline

VIDEO_FILE='cat.mp4' MODEL_LOCATION='graph_tinyyolov2.tflite' LABELS='labels.txt'

GST_DEBUG=tinyyolov2:6 gst-launch-1.0 \ filesrc location=$VIDEO_FILE ! qtdemux name=demux ! h264parse ! omxh264dec ! nvvidconv ! queue ! net.sink_model \ tinyyolov2 name=net backend=tflite model-location=$MODEL_LOCATION labels="(cat $LABELS)"

- Output

0:00:07.245722660 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:07.360377432 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:07.360586455 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:-46.105452, y:-9.139365, width:445.139551, height:487.967720, prob:14.592537] 0:00:07.360859318 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:07.489190714 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:07.489382873 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:-46.140270, y:-9.193503, width:445.228762, height:488.028163, prob:14.596972] 0:00:07.489736216 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:07.629190069 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:07.629379733 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:-46.281640, y:-9.164348, width:445.512899, height:487.908826, prob:14.596945] 0:00:07.629717876 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:07.761072493 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:07.761271244 30545 0x5ad000 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:7, x:-46.338202, y:-9.202273, width:445.624841, height:487.954952, prob:14.592540]

TinyYolov2 inference on camera stream using TensorFlow-Lite

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

NVIDIA Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_tinyyolov2.tflite' LABELS='labels.txt'

GST_DEBUG=tinyyolov2:6 gst-launch-1.0 \ nvarguscamerasrc sensor-id=$SENSOR_ID ! nvvidconv ! 'video/x-raw,format=BGRx' ! queue ! net.sink_model \ tinyyolov2 name=net backend=tflite model-location=$MODEL_LOCATION labels="(cat $LABELS)"

V4L2

- Pipeline

CAMERA='/dev/video1' MODEL_LOCATION='graph_tinyyolov2.tflite' LABELS='labels.txt'

GST_DEBUG=tinyyolov2:6 gst-launch-1.0 \ v4l2src device=$CAMERA ! videoconvert ! videoscale ! queue ! net.sink_model \ tinyyolov2 name=net model-location=$MODEL_LOCATION backend=tflite labels="$(cat $LABELS)"

- Output

0:00:39.754924355 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:39.876816786 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:39.876914225 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:4, x:147.260736, y:116.184709, width:134.389472, height:245.113627, prob:8.375733] 0:00:39.877085489 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:39.999699614 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:39.999799198 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:4, x:146.957935, y:117.902112, width:134.883825, height:242.143126, prob:7.982772] 0:00:39.999962206 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:40.118613969 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:40.118712017 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:4, x:147.147349, y:116.562615, width:134.469630, height:244.181931, prob:8.139100] 0:00:40.118882641 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:479:gst_tinyyolov2_preprocess:<net> Preprocess 0:00:40.264861052 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:501:gst_tinyyolov2_postprocess:<net> Postprocess 0:00:40.264964828 5030 0x10ee590 LOG tinyyolov2 gsttinyyolov2.c:384:print_top_predictions:<net> Box: [class:4, x:146.618516, y:117.162739, width:135.454029, height:243.785573, prob:8.112847]

TinyYolov2 visualization with detection overlay TensorFlow-Lite

- Get the graph used on this example from this link

- You will need a camera compatible with Nvidia Libargus API or V4l2.

Nvidia Camera

- Pipeline

SENSOR_ID=0 MODEL_LOCATION='graph_tinyyolov2.tflite' LABELS='labels.txt'

GST_DEBUG=tinyyolov2:6 \ gst-launch-1.0 \ nvcamerasrc sensor-id=$SENSOR_ID ! 'video/x-raw(memory:NVMM)' ! tee name=t \ t. ! queue max-size-buffers=1 leaky=downstream ! nvvidconv ! 'video/x-raw,format=(string)RGBA' ! net.sink_model \ t. ! queue max-size-buffers=1 leaky=downstream ! nvvidconv ! 'video/x-raw,format=(string)RGBA' ! net.sink_bypass \ tinyyolov2 name=net backend=tflite model-location=$MODEL_LOCATION labels="(cat $LABELS)" \ net.src_bypass ! detectionoverlay labels="$(cat $LABELS)" font-scale=4 thickness=4 ! nvvidconv ! nvoverlaysink sync=false -v

V4L2

- Pipeline

CAMERA='/dev/video1' MODEL_LOCATION='graph_tinyyolov2.tflite' LABELS='labels.txt'

gst-launch-1.0 \ v4l2src device=$CAMERA ! "video/x-raw, width=1280, height=720" ! tee name=t \ t. ! videoconvert ! videoscale ! queue ! net.sink_model \ t. ! queue ! net.sink_bypass \ tinyyolov2 name=net backend=tflite model-location=$MODEL_LOCATION labels="(cat $LABELS)" \ net.src_bypass ! videoconvert ! detectionoverlay labels="$(cat $LABELS)" font-scale=1 thickness=2 ! videoconvert ! xvimagesink sync=false

- Output