Image Stitching for NVIDIA Jetson/User Guide/Additional Details: Difference between revisions

m (Add CUDA interpolation algorithm known issue) |

|||

| (11 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<noinclude> | <noinclude> | ||

{{Image_Stitching_for_NVIDIA_Jetson/Head|previous=User Guide/ | {{Image_Stitching_for_NVIDIA_Jetson/Head|previous=User Guide/Gstreamer|next=Resources|metakeywords=Image Stitching, CUDA, Stitcher, OpenCV, Panorama}} | ||

</noinclude> | </noinclude> | ||

{{DISPLAYTITLE:Stitcher element Additional Details|noerror}} | |||

== Additional Details == | == Additional Details == | ||

This wiki holds some additional notes and useful details to consider when using the stitcher element. | This wiki holds some additional notes and useful details to consider when using the stitcher element. | ||

=== 360 Video === | |||

In the case of 360 video stitching, the output video file can be displayed as a 360 degree video. The following command writes the spherical tag in the video file: | |||

<syntaxhighlight lang=bash> | |||

exiftool -XMP-GSpherical:Spherical="true" file.mp4 | |||

</syntaxhighlight> | |||

With the spherical tag video players like VLC will recognize and display the video as a 360 degree video. | |||

=== Video Cropping === | === Video Cropping === | ||

The stitcher element applies multiple transformations to the target sources in order to achieve a seamless match with the target. These transformations can in some cases | The stitcher element applies multiple transformations to the target sources in order to achieve a seamless match with the target. These transformations can in some cases distort the output resolution and provide an image that is a different size and shape than desired. The image below represents an example of this behavior: | ||

[[File:Stitcher_videocrop_before.png|300px|frameless|none|before videocrop example]] | [[File:Stitcher_videocrop_before.png|300px|frameless|none|before videocrop example]] | ||

| Line 17: | Line 30: | ||

Using videocrop after the stitcher on your pipeline can reduce the output to only what is needed. The original image can then turn into: | Using videocrop after the stitcher on your pipeline can reduce the output to only what is needed. The original image can then turn into: | ||

[[File:Stitcher_videocrop_after.png|300px|frameless|none|after videocrop example]] | [[File:Stitcher_videocrop_after.png|300px|frameless|none|after videocrop example|alt=Diagram explaining cropping]] | ||

If you want to crop the output for the stitcher element you should add a videocrop element at the output and determine its parameters. | |||

Obtain the following information: | |||

* <math>width_{full}</math>: the full width of the image without cropping; same as the stitcher element. | * <math>width_{full}</math>: the full width of the image without cropping; same as the stitcher element. | ||

* <math>height_{full}</math>: the full height of the image without cropping; same as the stitcher element. | * <math>height_{full}</math>: the full height of the image without cropping; same as the stitcher element. | ||

* <math>width_{crop}</math>: the cropped width of the image, same as the desired output. | * <math>width_{crop}</math>: the cropped width of the image, the same as the desired output. | ||

* <math>height_{crop}</math>: the cropped height of the image, same as the desired output. | * <math>height_{crop}</math>: the cropped height of the image, the same as the desired output. | ||

| Line 45: | Line 49: | ||

* '''left''': <math>(width_{full}-width_{crop})</math> | * '''left''': <math>(width_{full}-width_{crop})</math>. | ||

* '''right''': <math>(width_{full}-width_{crop})</math> | * '''right''': <math>(width_{full}-width_{crop})</math>. | ||

The formulas for top and bottom parameters assume that the transformation produced deformation symmetrically in the vertical dimension; that is usually the case if cameras are parallel to one another; however depending on your use case; you might need to adjust the proportion between top and bottom accordingly. | The formulas for top and bottom parameters assume that the transformation produced deformation symmetrically in the vertical dimension; that is usually the case if cameras are parallel to one another; however, depending on your use case; you might need to adjust the proportion between the top and bottom accordingly. | ||

That 50/50 assumption is usually a good place to start and adjust from there according to the output. | That 50/50 assumption is usually a good place to start and adjust from there according to the output. | ||

<noinclude> | <noinclude> | ||

{{Image_Stitching_for_NVIDIA_Jetson/Foot|User Guide/ | {{Image_Stitching_for_NVIDIA_Jetson/Foot|User Guide/Gstreamer|Resources}} | ||

</noinclude> | </noinclude> | ||

Latest revision as of 15:27, 3 October 2024

| Image Stitching for NVIDIA®Jetson™ |

|---|

|

| Before Starting |

| Image Stitching Basics |

| Overview |

| Getting Started |

| User Guide |

| Resources |

| Examples |

| Spherical Video |

| Performance |

| Contact Us |

|

Additional Details

This wiki holds some additional notes and useful details to consider when using the stitcher element.

360 Video

In the case of 360 video stitching, the output video file can be displayed as a 360 degree video. The following command writes the spherical tag in the video file:

exiftool -XMP-GSpherical:Spherical="true" file.mp4

With the spherical tag video players like VLC will recognize and display the video as a 360 degree video.

Video Cropping

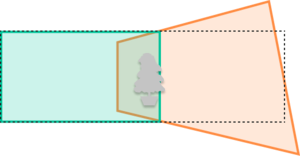

The stitcher element applies multiple transformations to the target sources in order to achieve a seamless match with the target. These transformations can in some cases distort the output resolution and provide an image that is a different size and shape than desired. The image below represents an example of this behavior:

To crop the stitcher's output into the expected resolutions Gstreamer's videocrop element can be used. This element takes 4 main parameters (top, bottom, left, right) specifying the number of pixels to remove from each side respectively.

The basic idea for determining these parameters is to take the original (without cropping) output of the stitcher, and based on its dimensions, subtract from each side accordingly.

Using videocrop after the stitcher on your pipeline can reduce the output to only what is needed. The original image can then turn into:

If you want to crop the output for the stitcher element you should add a videocrop element at the output and determine its parameters.

Obtain the following information:

- : the full width of the image without cropping; same as the stitcher element.

- : the full height of the image without cropping; same as the stitcher element.

- : the cropped width of the image, the same as the desired output.

- : the cropped height of the image, the same as the desired output.

From there the videocrop parameters can be determined:

- top:

- bottom:

- left: .

- right: .

The formulas for top and bottom parameters assume that the transformation produced deformation symmetrically in the vertical dimension; that is usually the case if cameras are parallel to one another; however, depending on your use case; you might need to adjust the proportion between the top and bottom accordingly.

That 50/50 assumption is usually a good place to start and adjust from there according to the output.