RidgeRun and Arducam NVIDIA Jetson Demo - Getting Started - Overview

| RidgeRun and Arducam NVIDIA®Jetson™Demo |

|---|

|

| Getting Started |

| Installing Instructions |

| Executing the demo |

| Contact Us |

Overview of NVIDIA®Jetson™ Orin NX AI Media Sever demo

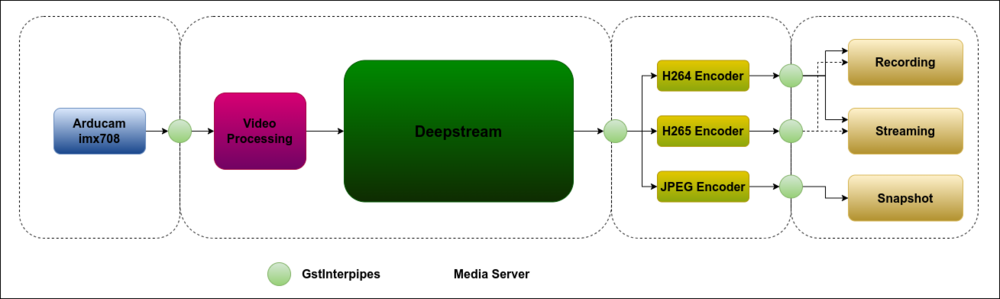

The purpose of the RidgeRun and Arducam NVIDIA Jetson™ Demo is to provide an idea of the simplicity in the overall design of a complex Gstreamer-based application (like for example the media server) when using RidgeRun's GstD and GstInterpipe products.

The implementation of the controller application for the media server, in this case, is a Python script but it may as well be a QT GUI, a C / C++ application, among other alternatives, this provides a great sense of flexibility to your design; The diagram below basically presents the media server as a set of functional blocks that, when interconnected, provide several data (video buffers) flow paths.

Demo requirements

- Hardware

- NVIDIA Jetson Orin NX Developer Board

- Arducam imx708 camera

- Software

- NVIDIA JetPack 5.1.1

- NVIDIA DeepStream 6.2

- IMX708 camera driver

- Docker container

Demo Script Overview

The following sections show a segmented view of the media-server.py script:

Pipeline Class Definition

In the code snippet shown below, the Gstd python client is imported and we create the PipelineEntity object with some helper methods to simplify the pipeline control in the media server. We also create pipeline arrays to group the base pipelines (capture, processing, DeepStream, and display), encoding, recording, and snapshot. Finally, we create the instance of the Gstd client.

#!/usr/bin/env python3

import time

from pygstc.gstc import *

# Create PipelineEntity object to manage each pipeline

class PipelineEntity(object):

def __init__(self, client, name, description):

self._name = name

self._description = description

self._client = client

print("Creating pipeline: " + self._name)

self._client.pipeline_create(self._name, self._description)

def play(self):

print("Playing pipeline: " + self._name)

self._client.pipeline_play(self._name)

def stop(self):

print("Stopping pipeline: " + self._name)

self._client.pipeline_stop(self._name)

def delete(self):

print("Deleting pipeline: " + self._name)

self._client.pipeline_delete(self._name)

def eos(self):

print("Sending EOS to pipeline: " + self._name)

self._client.event_eos(self._name)

def set_file_location(self, location):

print("Setting " + self._name + " pipeline recording/snapshot location to " + location);

filesink_name = "filesink_" + self._name;

self._client.element_set(self._name, filesink_name, 'location', location);

def listen_to(self, sink):

print(self._name + " pipeline listening to " + sink);

self._client.element_set(self._name, self._name + '_src', 'listen-to', sink);

pipelines_base = []

pipelines_video_rec = []

pipelines_video_enc = []

pipelines_snap = []

# Create GstD Python client

client = GstdClient()

Pipelines Creation

Capture Pipelines

In this snippet, we create the capture pipeline and a processing pipeline, in order to convert the camera output to the required format/colorspace required in the DeepStream pipeline input, RGBA with NVMM memory in this case. Notice that these pipelines are appended to the pipelines_base array.

The media server expects the imx708 camera to be identified as /dev/video0. This must be validated and changed if necessary for the media server to work properly.

# Create camera pipelines camera0 = PipelineEntity(client, 'camera0', 'nvarguscamerasrc sensor-id=0 name=video ! \ video/x-raw(memory:NVMM),width=4608,height=2592,framerate=14/1,format=NV12 ! nvvidconv \ ! video/x-raw(memory:NVMM),width=1920,height=1080 ! interpipesink name=camera_src0 \ forward-eos=true forward-events=true async=true sync=false') pipelines_base.append(camera0) camera0_rgba_nvmm = PipelineEntity(client, 'camera0_rgba_nvmm', 'interpipesrc \ listen-to=camera_src0 ! nvvideoconvert ! \ video/x-raw(memory:NVMM),format=RGBA,width=1920,height=1080 ! queue ! interpipesink \ name=camera0_rgba_nvmm forward-events=true forward-eos=true sync=false \ caps=video/x-raw(memory:NVMM),format=RGBA,width=1920,height=1080,pixel-aspect-ratio=1/1,\ interlace-mode=progressive,framerate=14/1') pipelines_base.append(camera0_rgba_nvmm)

DeepStream Pipeline

The snippet below shows the DeepStream pipeline, notice that this pipeline is also appended to the pipelines_base array.

# Create Deepstream pipeline deepstream = PipelineEntity(client, 'deepstream', 'interpipesrc listen-to=camera0_rgba_nvmm \ ! nvstreammux0.sink_0 nvstreammux name=nvstreammux0 batch-size=2 batched-push-timeout=40000 \ width=1920 height=1080 ! queue ! nvinfer batch-size=2 \ config-file-path=../deepstream-models/dstest1_pgie_config.txt ! \ queue ! nvtracker ll-lib-file=../deepstream-models/libnvds_nvmultiobjecttracker.so \ enable-batch-process=true ! queue ! nvmultistreamtiler width=1920 height=1080 rows=1 \ columns=1 ! nvvideoconvert ! nvdsosd ! queue ! interpipesink name=deep forward-events=true \ forward-eos=true sync=false') pipelines_base.append(deepstream)

Encoding Pipelines

The pipelines created in the code below are in charge of encoding the input video stream (DeepStream) with h264, h265 and jpeg codecs.

The h264 and h265 pipelines are appended to the pipelines_video_enc array while the jpeg pipeline is part of the pipelines_snap array.

# Create encoding pipelines h264_deep = PipelineEntity(client, 'h264', 'interpipesrc name=h264_src format=time listen-to=deep \ ! nvvideoconvert ! nvv4l2h264enc name=encoder maxperf-enable=1 insert-sps-pps=1 \ insert-vui=1 bitrate=10000000 preset-level=1 iframeinterval=30 control-rate=1 idrinterval=30 \ ! h264parse ! interpipesink name=h264_sink async=true sync=false forward-eos=true forward-events=true') pipelines_video_enc.append(h264_deep) h265 = PipelineEntity(client, 'h265', 'interpipesrc name=h265_src format=time listen-to=deep \ ! nvvideoconvert ! nvv4l2h265enc name=encoder maxperf-enable=1 insert-sps-pps=1 \ insert-vui=1 bitrate=10000000 preset-level=1 iframeinterval=30 control-rate=1 idrinterval=30 \ ! h265parse ! interpipesink name=h265_sink async=true sync=false forward-eos=true forward-events=true') pipelines_video_enc.append(h265) jpeg = PipelineEntity(client, 'jpeg', 'interpipesrc name=jpeg_src format=time listen-to=deep \ ! nvvideoconvert ! video/x-raw(memory:NVMM),format=NV12,width=1920,height=1080 ! nvjpegenc ! \ interpipesink name=jpeg forward-events=true forward-eos=true sync=false async=false \ enable-last-sample=false drop=true') pipelines_snap.append(jpeg)

Video Recording Pipelines

These pipelines are responsible for writing an encoded stream to file.

Notice that these pipelines are appended to the pipelines_video_rec array.

# Create recording pipelines record_h264 = PipelineEntity(client, 'record_h264', 'interpipesrc format=time \ allow-renegotiation=false listen-to=h264_sink ! h264parse ! matroskamux ! filesink \ name=filesink_record_h264 location=test-h264.mkv') pipelines_video_rec.append(record_h264) record_h265 = PipelineEntity(client, 'record_h265', 'interpipesrc format=time \ allow-renegotiation=false listen-to=h265_sink ! h265parse ! matroskamux ! filesink \ name=filesink_record_h265 location=test-h265.mkv') pipelines_video_rec.append(record_h265)

Image Snapshots Pipeline

The pipeline below saves a jpeg encoded buffer to file. This pipeline is also appended to the pipelines_snap array.

# Create snapshot pipeline snapshot = PipelineEntity(client, 'snapshot', 'interpipesrc format=time listen-to=jpeg \ num-buffers=1 ! filesink name=filesink_snapshot location=test-snapshot.jpg') pipelines_snap.append(snapshot)

Video Display Pipeline

The snippet below shows the display pipeline. This pipeline is appended to the pipelines_base array.

# Create display pipeline display = PipelineEntity(client, 'display', 'interpipesrc listen-to=deep ! \ nvvideoconvert ! nvegltransform ! nveglglessink sync=false') pipelines_base.append(display)

Pipelines Execution

First, we play all the pipelines in the pipelines_base array. This includes the capture, processing, DeepStream, and display pipelines.

# Play base pipelines

for pipeline in pipelines_base:

pipeline.play()

time.sleep(10)

Then the main function is defined. This function will request an option and display a menu that has the following options

def main():

while (True):

print("\nMedia Server Menu\n \

1) Start recording\n \

2) Take a snapshot\n \

3) Stop recording\n \

4) Exit")

option = int(input('\nPlease select an option:\n'))

If option 1 is selected we request the name of the file and set the locations for our first video recordings. Then we play all the recording and encoding pipelines.

if option == 1:

rec_name = str(input("Enter the name of the video recording (without extension):\n"))

#Set locations for video recordings

for pipeline in pipelines_video_rec:

pipeline.set_file_location(rec_name + '_' + pipeline._name + '.mkv')

#Play video recording pipelines

for pipeline in pipelines_video_rec:

pipeline.play()

#Play video encoding pipelines

for pipeline in pipelines_video_enc:

pipeline.play()

time.sleep(20)

If option 2 is selected we request the name of the file and set the location for the first snapshot. Then we play the pipelines in the pipelines_snap array (jpeg encoding and snapshot pipeline that saves the snapshot to file).

elif option == 2:

snap_name = str(input("Enter the name of the snapshot (without extension):\n"))

#Set location for snapshot

snapshot.set_file_location(snap_name + '_' + snapshot._name + '.jpeg')

#Play snapshot pipelines

for pipeline in pipelines_snap:

pipeline.play()

time.sleep(5)

If option 3 is selected the recording will be stopped. An EOS will be sent to the encoding pipelines for proper closing, both the recording and the encoding pipelines will be stopped. This process is performed in order to have the pipelines available for recording another video.

elif option == 3:

#Send EOS event to encode pipelines for proper closing

#EOS to recording pipelines

for pipeline in pipelines_video_enc:

pipeline.eos()

#Stop recordings

for pipeline in pipelines_video_rec:

pipeline.stop()

for pipeline in pipelines_video_enc:

pipeline.stop()

If option 4 is selected all the pipelines will be stopped and deleted, the menu will exit and the user is able to check the videos recorded after closing GstD

elif option == 4:

# Send EOS event to encode pipelines for proper closing

# EOS to recording pipelines

for pipeline in pipelines_video_enc:

pipeline.eos()

# Stop pipelines

for pipeline in pipelines_snap:

pipeline.stop()

for pipeline in pipelines_video_rec:

pipeline.stop()

for pipeline in pipelines_video_enc:

pipeline.stop()

for pipeline in pipelines_base:

pipeline.stop()

# Delete pipelines

for pipeline in pipelines_snap:

pipeline.delete()

for pipeline in pipelines_video_rec:

pipeline.delete()

for pipeline in pipelines_video_enc:

pipeline.delete()

for pipeline in pipelines_base:

pipeline.delete()

break

else:

print('Invalid option')

Then main function is called at the end of the file in order for the menu to be executed after the base pipelines are set to playing state.

main()