CUDA Memory Management Benchmark multi-threaded

| RidgeRun CUDA Optimisation Guide | |||||

|---|---|---|---|---|---|

| |||||

| GPU Architecture | |||||

|

|

|||||

| Optimisation Workflow | |||||

|

|||||

| Optimisation Recipes | |||||

|

|||||

| Common pitfalls when optimising | |||||

|

|

|||||

| Examples | |||||

|

|

|||||

| Empirical Experiments | |||||

|

|

|||||

| Contact Us |

Introduction

This page is the follow-up of Cuda Memory Benchmark, it adds multithreading to the testing of the different memory management modes. It's reduced to test traditional, managed, page-locked memory with and without copy calls and CUDA mapped. The results show that there is a difference on most cases compared to non-threaded benchmark, and also since the memory footprint was greatly increased, it can be seen that on Jetson targets there is a memory usage reduction when using a memory mode that avoids having two copies. At the end on discrete GPU, on a IO-bonud scenario the pinned memory without the copy performs best and on procesing-boud scenario managed performs best. On the Jetson Nano, managed memory performs best on both scenarios and lastly on Orin AGX the pinned memory without the copy performs best on both as well.

Testing Setup

Memory Management Methods

The program tested had the option to use each of the following memory management configurations:

- Traditional mode, using malloc to reserve the memory on the host, then cudaMalloc to reserve it on the device, and then having to move the data between them with cudaMemcpy. Internally, the driver will allocate a non-pageable memory chunk, to copy the data there and after the copy, finally use the data on the device.

- Managed, using cudaMallocManaged and not having to manually copy the data and handle two different pointers.

- Non paging memory, using cudaMallocHost a chunk of page-locked memory can be reserved that can be used directly by the device since its non-pageable

- Non paging memory with discrete copy, using cudaMallocHost and a discrete call to cudaMemcpy, so it's similar to the traditional model with different pointers one for host and another for the device, but according to the NVIDIA docs on the mallocHost, the calls to cudaMemcpy are accelerated when using this type of memory.

- CUDA mapped, using cudaHostAlloc to reserve memory that is page-locked and directly accessible to the device. There are different flags that can change the properties of the memory, in this case, the flags used were cudaHostAllocMapped and cudaHostAllocWriteCombined.

Platforms

- Discrete GPU: desktop pc with RTX 2070s, using CUDA 12 and Ubuntu 20.04.

- Jetson AGX Orin: using CUDA 11.4 and JP 5.0.2.

- Jetson Nano 4GB devkit: using CUDA 10.2 and JP 4.6.3

Program Structure

The program is divided into three main sections, one where the input memory is filled with data, the kernel worker threads, and the verify. The verify reads all the results and uses assert to verify them. Before every test, 10 iterations of the full process were done to warm up and avoid any initialization time penalty. After that, the average of 100 runs was obtained. Each of the sections can be seen in Figure 1.

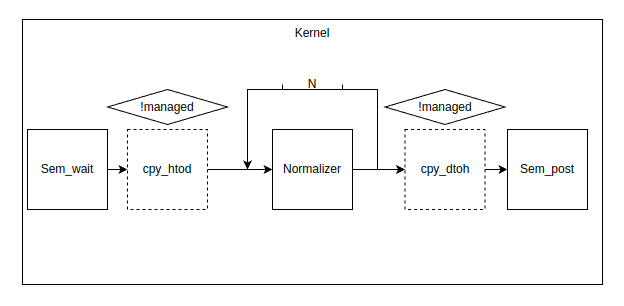

Each kernel block can be seen in Figure 2. Each block has a semaphore to synchronize when there is data available on the input and has another that is raised at the end when the data has been processed. The semaphores are shared between each block, in a chained manner, where the output semaphore is the input of the next block and so on. Also, the kernel block has a condition that checks if the memory mode being used is "managed like" meaning that uses a single pointer to the device and host memory and the driver handles the transfers on the back. If not it calls cudaMemcpy accordingly. And lastly, the kernel itself is called once for the IO bound case and 50 times for the processing bound case.

Used Data

The aim of the tests is to emulate a 4k RGBA frame so the results can be representative of the results on a real world media-handling software. To represent this data the following structure was used:

struct rgba_frame{

float r[SIZE_W*SIZE_H];

float g[SIZE_W*SIZE_H];

float b[SIZE_W*SIZE_H];

float a[SIZE_W*SIZE_H];

};

The macros are SIZE_W=3840 and SIZE_H=2160, the image size of a 4k frame.

Code

The kernel that was tested is:

Normalizer

int blockSize = 256;

int numBlocks = ((SIZE_W*SIZE_H) + blockSize - 1) / blockSize;

__global__

void normalize(int n, float *x, float *y)

{

int index = blockIdx.x * blockDim.x + threadIdx.x;

int stride = blockDim.x * gridDim.x;

for (int i = index; i < n; i += stride)

y[i] = (x[i]*((MAX-MIN)/ABS_MAX))+MIN;

}

void *exec_kernel_cpy(void * arg){

struct sync_struct *args_k = (struct sync_struct *)arg;

rgba_frame *d_frame_in = args_k->d_frame_in;

rgba_frame *d_frame_out = args_k->d_frame_out;

rgba_frame *frame_in = args_k->frame_in;

rgba_frame *frame_out = args_k->frame_out;

for (int i = 0; i < LOOP_CYCLES; i ++){

sem_wait(args_k->lock_in);

if (i > WARM_UP_RUNS){

start_time(args_k);

}

if (d_frame_in != frame_in && mode != 4){

cudaMemcpy(d_frame_in, frame_in, sizeof(struct rgba_frame), cudaMemcpyHostToDevice);

}

for (int j = 0; j < KERNEL_CYCLES; j ++){

normalize<<<numBlocks, blockSize>>>(SIZE_W*SIZE_H, d_frame_in->r, d_frame_out->r);

normalize<<<numBlocks, blockSize>>>(SIZE_W*SIZE_H, d_frame_in->g, d_frame_out->g);

normalize<<<numBlocks, blockSize>>>(SIZE_W*SIZE_H, d_frame_in->b, d_frame_out->b);

normalize<<<numBlocks, blockSize>>>(SIZE_W*SIZE_H, d_frame_in->a, d_frame_out->a);

cudaDeviceSynchronize();

}

if (frame_out != d_frame_out && mode != 4){

cudaMemcpy(frame_out, d_frame_out, sizeof(struct rgba_frame), cudaMemcpyDeviceToHost);

}

if (i > WARM_UP_RUNS){

stop_time(args_k);

}

sem_post(args_k->lock_out);

}

return NULL;

}

This is the kernel that is bound to the worker threads and contains two main cycles, one that is responsible to execute the number of loops to get the average and the inner one that uses the macro KERNEL_CYCLES. The value was changed between 1 and 50 to have an IO-bound case and a processing-bound case, respectively. This can be seen on the figures with the label normalizer 1x and normalizer 50x, respectively.

Apart from this, the code has two sections an initial section and an end section. The initial section takes the array and fills it with 1s. It also contains the cycle responsible for the average.

void *fill_array(void * arg){

struct sync_struct *args_fill = (struct sync_struct *)arg;

for (int i = 0; i < LOOP_CYCLES; i ++){

sem_wait(args_fill->lock_in);

if (i > WARM_UP_RUNS){

start_time(args_fill);

}

for (int j = 0; j < SIZE_W*SIZE_H; j++) {

args_fill->frame_out->r[j] = 1.0f;

}

for (int j = 0; j < SIZE_W*SIZE_H; j++) {

args_fill->frame_out->g[j] = 1.0f;

}

for (int j = 0; j < SIZE_W*SIZE_H; j++) {

args_fill->frame_out->b[j] = 1.0f;

}

for (int j = 0; j < SIZE_W*SIZE_H; j++) {

args_fill->frame_out->a[j] = 1.0f;

}

if (i > WARM_UP_RUNS){

stop_time(args_fill);

}

sem_post(args_fill->lock_out);

}

return NULL;

}

The end section is where the output is read, to force the data to load into host memory, and at the same time, the results are checked to verify that the process is behaving as expected.

void *verify_results(void * arg){

struct sync_struct *args_verf = (struct sync_struct *)arg;

float ref = 1.0f;

for (int i = 0; i < STAGES-1; i++){

ref = (ref*((MAX-MIN)/ABS_MAX))+MIN;

}

for (int i = 0; i < LOOP_CYCLES; i ++){

sem_wait(args_verf->lock_in);

if (i > WARM_UP_RUNS){

start_time(args_verf);

}

for (int j = 0; j < SIZE_W*SIZE_H; j++) {

assert(args_verf->frame_in->r[j] == ref);

}

for (int j = 0; j < SIZE_W*SIZE_H; j++) {

assert(args_verf->frame_in->g[j] == ref);

}

for (int j = 0; j < SIZE_W*SIZE_H; j++) {

assert(args_verf->frame_in->b[j] == ref);

}

for (int j = 0; j < SIZE_W*SIZE_H; j++) {

assert(args_verf->frame_in->a[j] == ref);

}

if (i > WARM_UP_RUNS){

stop_time(args_verf);

}

sem_post(args_verf->lock_out);

}

return NULL;

}

Each section was measured using different timers, and the results of each stage were added to get the total time. As can be seen from the code pieces each worker has a sync_struct associated, this piece most notably, holds the semaphores and the times for each, among other necessary values.

Results

Discrete GPU

Kernel Execution Time

In Figure 3 the fastest mode is CUDA mapped, at 11.85ms on average, followed by pinned memory without copy and managed on the IO-bound case, but on the processing bound case, CUDA mapped and pinned memory without a copy, suffer an increase of around 48 times for both. And in this scenario, the fast mode is managed, and Table 1 sheds some light on the reason.

| Case | Worker | Time avg(ms) |

|---|---|---|

| IO-Bound | W1 | 30.466015 |

| W2 | 0.761422 | |

| W3 | 0.757867 | |

| W4 | 0.757999 | |

| W5 | 29.853945 | |

| Processing-Bound | W1 | 64.937218 |

| W2 | 36.428116 | |

| W3 | 36.336861 | |

| W4 | 29.853945 | |

| W5 | 64.063713 |

It can be seen that in both scenarios the CUDA runtime identifies the chain of buffers and speeds up the data transfers which results in a considerable 40 times, time reduction on the IO-bound case and 2 times, on the Processing-Bound on the inner worker threads. However, it can also be seen that there is a time penalty on the end thread and initial thread as it has to fetch the data from the host memory. No other memory mode showed this behavior.

Full Execution Times

On table 2, it can be seen that the best overall is hostMalloc, however, it's between the variance of the traditional mode. Also the worst overall with a verify time of 58 times more that the best is CUDA mapped.

| Memory mode | Fill time avg(ms) | Verify time avg(ms) |

|---|---|---|

| Traditional | 63.391980 | 51.470143 |

| Managed | 62.733280 | 71.642704 |

| HostMalloc | 61.780409 | 51.822929 |

| HostMalloc W Cpy | 64.2764265 | 51.49177 |

| Pinned | 72.431336 | 3,001.322266 |

When we take the three times and combine them, to get the total execution time, as shown in Figure 4. We see that in the case of the discrete GPU, the best performing for the IO-Bound case is HostMalloc without a discrete copy, and for the Processing-Bound case, the best is Managed memory, since it has that edge on the worker-to-worker transfers.

In general, it seems that in IO-bound cases, it can yield benefits using memory reserved with hostMalloc and not doing the manual copy, but on a processing-bound scenario, the dicrete call to copy is needed. Overall we have slower performance with managed memory and the slowest is with pinned or zero-copy memory.

Jetson Nano

Kernel Execution Time

In Figure 5, we can see that the mode that performs better is CUDA mapped, and the next is pinned memory without a copy, followed by managed, which is the same trend that the dGPU results had on the IO-bound case. But in the Processing-Bound case, the behavior is different, where pinned memory with copy performs the best and it's followed by the same without the copy. Also worth pointing out is that the time difference between kernel workers, is not present on the results. On the Jetson Nano, the results for each kernel are close to each other.

Full Execution Times

In Table 3 it can be seen the increase of almost 6 times, more execution time when using pinned memory and CUDA mapped when reading the results, compared to managed or traditional. And the best performing one being overall the traditional memory model.

| Memory mode | Fill time avg(ms) | Verify time avg(ms) |

|---|---|---|

| Traditional | 352.960114 | 190.085793 |

| Managed | 398.220825 | 241.442940 |

| HostMalloc | 351.453018 | 1,243.674988 |

| HostMalloc W Cpy | 351.932023 | 1,244.144898 |

| Pinned | 351.853775 | 1,243.925659 |

Figure 6, shows that on the Jetson Nano the best on both scenarios is the Managed memory by a margin of almost 150ms on the Procesing-Bound case, and more than 300ms on the IO-Bound case compared to the next in line, the traditional model.

Jetson AGX Orin

Kernel execution time

In kernel execution times, Figure 7, the best overall is the CudaMapped, followed by HostMalloc. Something to point here, there is no difference on the inner threads, same as the Jetson Nano, all of them have very similar times. Those results show the main advantage of using CudaMapped memory, as long as the data is kept on the GPU and doesn't has to come back to the CPU.

Full Execution Times

As for the Jetson AGX Orin, Table 4, shows the overhead that adds the managed memory, and the disadvantage of using CudaMapped when the data has to come back to CPU.

| Memory mode | Fill time avg(ms) | Verify time avg(ms) |

|---|---|---|

| Traditional | 92.576847 | 89.578194 |

| Managed | 132.597275 | 90.108647 |

| HostMalloc | 92.963348 | 89.868675 |

| HostMalloc W Cpy | 92.694069 | 89.474323 |

| Pinned | 92.317101 | 735.748902 |

When looking at the full execution times, Figure 7, the same trend as the non threaded benchmark shows up, where the hostMalloc memory performs the best followed by the HostMalloc with discrete copy.

Resource Usage Jetson

In both Jetson targets, tegrastats was used to monitor the resource utilization, mainly the CPU, GPU usage and the used memory.

- Jetson Nano: Upon inspection, there is virtually no difference from run to run on GPU and CPU usage, however on memory usage, there seems to be a difference when using managed memory, pinned without copy and CUDA mapped compared to traditional and pinned with a discrete copy. With around 600MB more memory in use when using the double-pointer approach. And a difference of around 280MB more memory when using pinned with discrete copy than traditional memory.

- Jetson Orin AGX: In this device the same behavior and memory differences could be observed.

Conclusions

We don't have a definitive management mode that performs best in all cases and all devices, but we can see that in different use cases and devices, one can perform better than the other. However, if we saw different trends compared to the non-threaded benchmark, which shows that there's a difference in memory behavior and management when using a multi-threaded application.

- On a discrete GPU, on a IO-bonud scenario, the pinned memory without the copy performs best and on a procesing-boud scenario, the managed performs best. That later result is different than the result on a non-threaded application, where the pinned with discrete copy performed best.

- On Jetson Nano, on both scenarios use the managed model. This result is different from the non-threaded benchmark since it had the traditional model on the Processing-Bound scenario. In this case, managed is the best for both by a considerable margin.

- On Jetson AGX Orin, on both scenarios use pinned memory without the copy, same trend as the non-threaded benchmark.

For direct inquiries, please refer to the contact information available on our Contact page. Alternatively, you may complete and submit the form provided at the same link. We will respond to your request at our earliest opportunity.

Links to RidgeRun Resources and RidgeRun Artificial Intelligence Solutions can be found in the footer below.