CUDA Memory Management Benchmark

| RidgeRun CUDA Optimisation Guide | |||||

|---|---|---|---|---|---|

| |||||

| GPU Architecture | |||||

|

|

|||||

| Optimisation Workflow | |||||

|

|||||

| Optimisation Recipes | |||||

|

|||||

| Common pitfalls when optimising | |||||

|

|

|||||

| Examples | |||||

|

|

|||||

| Empirical Experiments | |||||

|

|

|||||

| Contact Us |

Introduction

This page presents the execution time results of different CUDA memory management methods on three different CUDA-capable platforms. The objective is to determine if there are performance differences between the methods that a developer can use to control memory transactions using CUDA. For a bit more explanation on the memory management options, check NVIDIA CUDA Memory Management.

The tests presented on this page were executed on a Jetson Nano devkit, a Jetson AGX Orin devkit, and a desktop pc with a discrete GPU, Here the compute capabilities chart. We emulate two scenarios: an IO-bound algorithm and a processing-bound algorithm, using a small program with a simple kernel executed once for the IO-bound scenario and 50 times for the processing-bound scenario. The results show that there are significant performance differences, in some cases, using a memory management method can result in a program execution almost 55 times faster than using another method. We also found differences in results between platforms.

The results show similar outcomes for Jetson AGX Orin and discrete GPU. With cudaMallocHost the best performing on the IO-bound for both targets. But with a processing-bound test, the results for both target change, where the cudaMallocHost management mode performs better on a discrete GPU, as long as there's is a discrete copy but on Orin still the best is the cudaMallocHost. It also shows cudaMallocManaged as the slowest overall for both targets. As for the Jetson Nano the best overall is the cudaMallocManaged in the IO-bound case, otherwise, the best is the traditional memory management.

Testing Setup

Memory Management Methods

The program tested had the option to use each of the following memory management configurations:

- Traditional mode, using malloc to reserve the memory on host, then cudaMalloc to reserve it on the device, and then having to move the data between them with cudaMemcpy. Internally, the driver will allocate a non-pageable memory chunk, to copy the data there and after the copy, finally use the data on the device.

- Managed, using cudaMallocManaged and not having to manually copy the data and handle two different pointers.

- Managed & prefetch, this is one operation that can be done when using managed memory with cudaMemPrefetchAsync, which helps the device to get the data before it's needed.

- Managed & advice, another operation that can be done when using managed memory, using cudaMemAdvise, this hints the driver who is more likely to operate the data. This was divided into two tests, one with the advice target being the CPU and the other the GPU.

- Managed & prefetch & advice, same as the previous two but combined.

- Non paging memory, using cudaMallocHost a chunk of page-locked memory can be reserved that can be used directly by the device since its non-pageable

- Non paging memory with discrete copy, using cudaMallocHost and a discrete call to cudaMemcpy, so its similar to the traditional model with different pointers one for host and another for device, but according to the NVIDIA docs on the mallocHost, the calls to cudaMemcpy are accelerated when using thid type of memory.

- Zero-Copy Memory, using cudaHostAlloc to reserve memory that is page-locked and directly accessible to the device. There are different flags that can change the properties of the memory, in this case, the flags used were cudaHostAllocMapped and cudaHostAllocWriteCombined.

Platforms

- Discrete GPU: desktop pc with RTX 2070s, using CUDA 12 and Ubuntu 20.04.

- Jetson AGX Orin: using CUDA 11.4 and JP 5.0.2.

- Jetson Nano 4GB devkit: using CUDA 10.2 and JP 4.6.3

Program Structure

The program is divided into three sections, one where the input memory is filled with data, the kernel execution, and the verify. The verify reads all the results and uses assert to verify them. Before every test, 10 iterations of the full 3 step process were done to warm up and avoid any initialization time penalty. After that, the average of 100 runs was obtained. Each of the sections can be seen in Figure 1.

Used Data

The aim of the tests is to emulate a 4k RGBA frame so the results can be representative of the results on a real world media-handling software. To represent this data the following structure was used:

struct rgba_frame{

float r[SIZE_W*SIZE_H];

float g[SIZE_W*SIZE_H];

float b[SIZE_W*SIZE_H];

float a[SIZE_W*SIZE_H];

};

The macros are SIZE_W=3840 and SIZE_H=2160, the image size of a 4k frame.

Code

The kernel that was tested is:

Normalizer

int blockSize = 256;

int numBlocks = ((SIZE_W*SIZE_H) + blockSize - 1) / blockSize;

__global__

void normalize(int n, float *x, float *y)

{

int index = blockIdx.x * blockDim.x + threadIdx.x;

int stride = blockDim.x * gridDim.x;

for (int i = index; i < n; i += stride)

y[i] = (x[i]*((MAX-MIN)/ABS_MAX))+MIN;

}

void exec_kernel(rgba_frame *d_frame, rgba_frame *d_out_frame){

for (int i = 0; i < ITERATIONS; i ++){

normalize<<<numBlocks, blockSize>>>(SIZE_W*SIZE_H, d_frame->r, d_out_frame->r);

normalize<<<numBlocks, blockSize>>>(SIZE_W*SIZE_H, d_frame->g, d_out_frame->g);

normalize<<<numBlocks, blockSize>>>(SIZE_W*SIZE_H, d_frame->b, d_out_frame->b);

normalize<<<numBlocks, blockSize>>>(SIZE_W*SIZE_H, d_frame->a, d_out_frame->a);

cudaDeviceSynchronize();

}

}

void start_kernel(){

cudaEventCreate(&estart_kernel);

cudaEventCreate(&estop_kernel);

cudaEventSynchronize(estart_kernel);

cudaEventRecord(estart_kernel);

}

float stop_kernel(){

float time;

cudaEventRecord(estop_kernel);

cudaEventSynchronize(estop_kernel);

cudaEventElapsedTime(&time, estart_kernel, estop_kernel);

cudaEventDestroy(estart_kernel);

cudaEventDestroy(estop_kernel);

return time;

}

void test(){

start_kernel();

exec_kernel(in, out)

stop_kernel();

}

This is a simple kernel that's executed 4 times to process each array that represents a color on the frame struct. A macro was added, ITERATIONS to change the numbers of times the 4 kernels are executed. This is done to increase the compute times, and emulate a processing-bound workload. The value was changed between two values, 1 and 50 to have a IO-bound case and processing-bound case, respectively. This can be seen on the figures with the label normalizer 1x and normalizer 50x, respectively.

Apart from this, the code has two sections an initial section and a end section. The initial section takes the array and fills it with 1s. This is called once after the memory is reserved, right before the exec_kernel method is called.

float fill_array(rgba_frame * in){

float time;

cudaEvent_t start, stop;

cudaEventCreate(&start);

cudaEventCreate(&stop);

cudaEventRecord(start);

for (int i = 0; i < SIZE_W*SIZE_H; i++) {

in->r[i] = 1.0f;

}

for (int i = 0; i < SIZE_W*SIZE_H; i++) {

in->g[i] = 1.0f;

}

for (int i = 0; i < SIZE_W*SIZE_H; i++) {

in->b[i] = 1.0f;

}

for (int i = 0; i < SIZE_W*SIZE_H; i++) {

in->a[i] = 1.0f;

}

cudaEventRecord(stop);

cudaEventSynchronize(stop);

cudaEventElapsedTime(&time, start, stop);

cudaEventDestroy(start);

cudaEventDestroy(stop);

return time;

}

The end section is where the output struct was read inside a cycle with asserts, for each value on the color arrays. This is called once, right after the kernel finishes. The cycle with the asserts also helped to verify the results, to make sure that the kernel was executing properly and processing the full arrays.

float verify_results(rgba_frame * in, float over_ref = 0.0f){

float time;

float ref = over_ref == 0.0f ? (1.0f*((MAX-MIN)/ABS_MAX))+MIN : over_ref;

cudaEvent_t start, stop;

cudaEventCreate(&start);

cudaEventCreate(&stop);

cudaEventRecord(start);

for (int i = 0; i < SIZE_W*SIZE_H; i++) {

assert(in->r[i] == ref);

}

for (int i = 0; i < SIZE_W*SIZE_H; i++) {

assert(in->g[i] == ref);

}

for (int i = 0; i < SIZE_W*SIZE_H; i++) {

assert(in->b[i] == ref);

}

for (int i = 0; i < SIZE_W*SIZE_H; i++) {

assert(in->a[i] == ref);

}

cudaEventRecord(stop);

cudaEventSynchronize(stop);

cudaEventElapsedTime(&time, start, stop);

cudaEventDestroy(start);

cudaEventDestroy(stop);

return time;

}

Each section was measured using different timers, and the results were added to get the total times. This was done to get a better understanding of where the bulk of the time was from, and also to identify if a memory management mode, has an advantage on certain operations.

Results

Discrete GPU

Kernel Execution Time

In Figure 2, we see that the managed has slower times overall, then there's the traditional model, and the fastest is the pinned or hostMalloc but with the copy. Since when using it without, and with a processing-bound case, the time difference is almost 11 times.

Full Execution Times

First, as seen on Table 1, we have the times for the fill and verify operations. In the case of the discrete GPU, we have a time increase of almost 140 times when looking at the pinned or zero-copy memory, compared to the traditional model. If we look at the rest, overall we can see is that the managed results are slower, around twice compared to the others and the one that performs best is the traditional model but the results when using the memory with hostMalloc are pretty close.

| Memory mode | Fill time avg(ms) | Verify time avg(ms) |

|---|---|---|

| Traditional | 61.914536 | 49.771563 |

| Managed | 83.754719 | 69.627804 |

| Managed & prefetch | 83.455475 | 69.727864 |

| Managed & advice as GPU | 83.610943 | 69.602825 |

| Managed & advice as CPU | 84.166066 | 69.229584 |

| Managed & prefetch & advice as GPU | 82.620560 | 69.680351 |

| Managed & prefetch & advice as CPU | 82.900353 | 69.775280 |

| HostMalloc | 61.780409 | 49.442122 |

| HostMalloc W Cpy | 62.273638 | 49.530716 |

| Pinned | 66.052262 | 3,969.123902 |

When we take the three times and combine them, to get the total execution time, as shown in Figure 3. We see that in the case of the discrete GPU, the lowest total time overall goes to malloc host with discrete copy. However, it seems that in an procesing-bound case, the usage of hostMalloc without it yields lower times. The worst performing one is the pinned or zero-copy memory.

In general, it seems that in IO-bound cases, it can yield benefits using memory reserved with hostMalloc and not doing the manual copy, but on a processing-bound scenario, the dicrete call to copy is needed. Overall we have slower performance with managed memory and the slowest is with pinned or zero-copy memory.

Jetson Nano

Kernel Execution Time

For the kernel times, on Figure 4, we have a difference on a performance bound vs IO-bound, were on the first the one that performs best is the memory reserved with hostMalloc with discrete copy, and on the IO-bound case, the managed memory performs notably better that the rest.

In the Jetson Nano, we have a different behavior than a discrete GPU, which is expected since the memory topology is different.

Full Execution Times

On the Jetson Nano we can see that the overall best, Table 2, is the traditional model. Also, it can be seen that there is a time increase from using both modes, mallocHost and pinned, this is different from the discrete GPU, where only the pinned performed poorly.

| Memory mode | Fill time avg(ms) | Verify time avg(ms) |

|---|---|---|

| Traditional | 355.4375 | 181.965027 |

| Managed | 399.8251645 | 231.341667 |

| Managed & prefetch | 400.890045 | 231.1730195 |

| Managed & advice as GPU | 400.677246 | 231.1446 |

| Managed & advice as CPU | 399.785202 | 230.9549945 |

| Managed & prefetch & advice as GPU | 399.7821045 | 230.913635 |

| Managed & prefetch & advice as CPU | 399.8194735 | 232.494896 |

| HostMalloc | 355.0729065 | 1326.459168 |

| HostMalloc W Cpy | 354.795273 | 1328.617737 |

| Pinned | 354.804642 | 1327.90094 |

Figure 5, shows that the Jetson Nano has a different trend, where the managed actually performs well. We have the same behavior as the discrete GPU for the pinned or zero copy. But the hostMalloc performs slowly, compared to the discrete GPU results.

Overall it seems that the managed performs better on Jetson Nano than on the discrete GPU. In this case, it does not make sense to use pinned or zero copy nor memory reserved with hostMalloc. In the IO-bound case, managed memory can perform better than traditional, but on a processing-bound program, the traditional performs best.

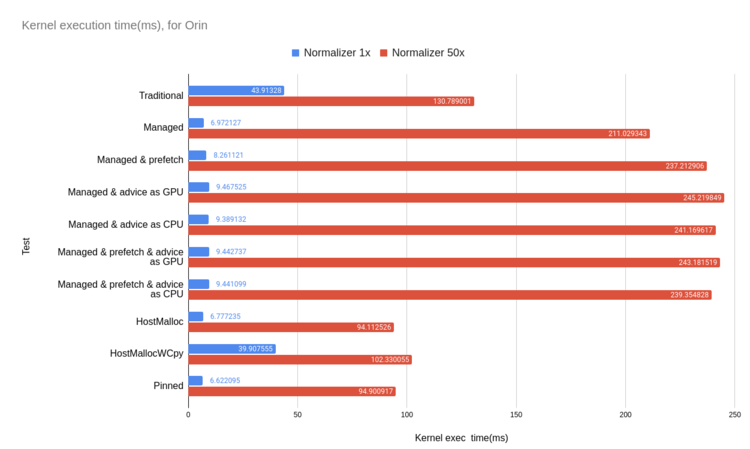

Jetson AGX Orin

Kernel execution time

In kernel execution times, Figure 6, there is a clear time reduction when using hostMalloc, where it performs better than traditional memory management. It can be seen that with managed memory there is a bit more gain to be had at around 2ms less than either of them.

For the Jetson AGX Orin, we have results that look more like the discrete GPU results, but the main difference is that using memory with hostMalloc does achieve better results always.

Full Execution Times

As for the Jetson AGX Orin, Table 3, the results from the fill and verify operations, show that there is a similar trend as the discrete GPU, where the managed performs slower compared to the rest, but as for the pinned, there is not as much time increase compared to the discrete GPU, since its around 3 times more, but still, it performs the worst.

| Memory mode | Fill time avg(ms) | Verify time avg(ms) |

|---|---|---|

| Traditional | 96.3693465 | 93.119007 |

| Managed | 141.320404 | 90.6683695 |

| Managed & prefetch | 140.552361 | 90.991768 |

| Managed & advice as GPU | 141.023903 | 91.376148 |

| Managed & advice as CPU | 141.235008 | 91.276241 |

| Managed & prefetch & advice as GPU | 141.092499 | 91.323822 |

| Managed & prefetch & advice as CPU | 140.9354705 | 91.141693 |

| HostMalloc | 96.887695 | 99.5928345 |

| HostMalloc W Cpy | 96.2297175 | 98.4038125 |

| Pinned | 96.558895 | 792.3588565 |

When looking at the full execution times, Figure 7, there is a different behavior than the Jetson Nano, but it's similar to the discrete GPU. Where the managed performs notably slower overall, and the hostMalloc performs better.

In the case of the Jetson Orin AGX, there is one memory management mode that performs better without regarding if its an IO o processing bound scenario, that being the memory reserved with hostMalloc and without the need of handling the discrete transfers, compared to the discrete GPU.

Resource Usage Jetson

In both Jetson targets, tegrastats was used to monitor the resource utilization, mainly the CPU and GPU usage and the used memory. Upon inspection, there is virtually no difference from run to run. Where the different memory management tests, used the same amount of memory. As for the general system usage, there is also nothing worthy of attention.

Conclusions

We don't have a definitive management mode that performs best in all cases and all devices, but we can see that in different use cases and devices, one can perform better than the other. However, if you are looking for consistency and control, the traditional memory model is the way to go. But if you need to have the best execution times, we have some points that might help:

- On a discrete GPU, use the hostMalloc memory model, but remember to use manual transfers when in a processing bound case.

- On Jetson Nano, on an IO-bound scenario, use managed memory, otherwise, use the traditional memory model.

- On Jetson AGX Orin we do have a one for all, in this case, use hostMalloc, this performs the best regardless, and with the bonus of not having to handle dual pointers for device and host memory.

For direct inquiries, please refer to the contact information available on our Contact page. Alternatively, you may complete and submit the form provided at the same link. We will respond to your request at our earliest opportunity.

Links to RidgeRun Resources and RidgeRun Artificial Intelligence Solutions can be found in the footer below.