Usage and examples

Starting the Demo Application

If it is the first time you will run the demo, please start in step 1; if not, you can skip steps 1, 2, and 3 and start in step 4.

1. The first step is to get the demo source code. Do it by running:

git clone https://github.com/RidgeRun/smart-seek-360.git

The content of the repository should look as follows:

. ├── config │ ├── agent │ │ ├── api_mapping.json │ │ └── prompt.txt │ ├── analytics │ │ └── configuration.json │ ├── demo-nginx.conf │ └── vst │ ├── vst_config.json │ └── vst_storage.json ├── docker-compose.yaml └── README.md

2. Update Ingress Configuration

cd smart-seek-360 sudo cp config/demo-nginx.conf /opt/nvidia/jetson/services/ingress/config/

3. Update VST Configuration

cd smart-seek-360 sudo cp config/vst/* /opt/nvidia/jetson/services/vst/config/

4. Launch Platform Services

sudo systemctl start jetson-redis sudo systemctl start jetson-ingress sudo systemctl start jetson-vst

5. Add at least one stream to VST. You can follow VST documentation for instructions on how to do it.

6. Launch Smart Seek 360

cd smart-seek-360 docker compose up -d

Demo Usage

If you followed the previous steps to start the application, you should have a stream available at rtsp://BOARD_IP:5021/ptz_out. You can open it from the host computer with the following command:

vlc rtsp://BOARD_IP:5021/ptz_out

Just replace BOARD_IP with the actual IP address of the board running the demo.

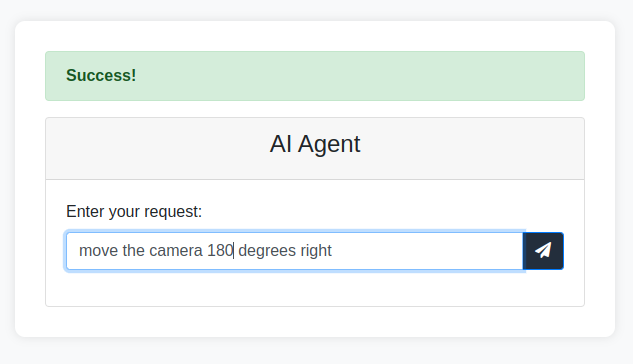

The demo is controlled through the AI-Agent, which comes with a web interface that can be accessed at BOART_IP:30080/agent.

The page looks as follows:

Through that interface, the application can be controlled using natural language. The 2 available commands are: move camera and find objects.

Move Camera

The available options are:

- move the camera X degrees left: This will move the camera X degrees specified to the left.

- move the camera X degrees right: This will move the camera X degrees specified to the right.

Find Objects

With this feature, you can instruct the application to look for any object on the input stream, and 2 actions will be performed.

1. The camera will point to that object once it is found.

2. A clip will be recorded of that event (disabled by default).

Demo in action

The following video shows how to start and run the demo.