Performance of the Holoscan Sensor Bridge

| Holoscan Platform |

|---|

|

| Holoscan Sensor Bridge |

| Holoscan SDK |

| Contact Us |

Introduction

To account for the performance of the Holoscan Sensor Bridge, we aim to quantify the computational resources consumed by the Holoscan Software during glass-to-glass latency measurements.

Currently, the Holoscan Sensor Bridge is compatible with the NVIDIA Jetson Orin AGX and NVIDIA Orin IGX. We are going to cover the Jetson Orin AGX.

Jetson Orin AGX Performance

Setup

The Holoscan Sensor Bridge is connected as specified in the Holoscan Sensor Bridge/Hardware Connection using a 10 Gbit/s Ethernet connection.

Initial Clarifications

We are running the application as specified in the Holoscan Sensor Bridge/Running the Demo. We use the unaccelerated version of the IMX274 example, given that the Jetson Orin AGX does not support DPDK[1]. It uses UDP communication over Ethernet for the Holoscan Sensor Bridge Jetson communication. The results might dramatically change for the NVIDIA Orin IGX platforms, provided they support NVIDIA ConnectX expansion cards for network communication.

The camera is configured as in the example, providing 60 fps. The display is a Samsung TV whose refresh rate is 60 Hz.

Results

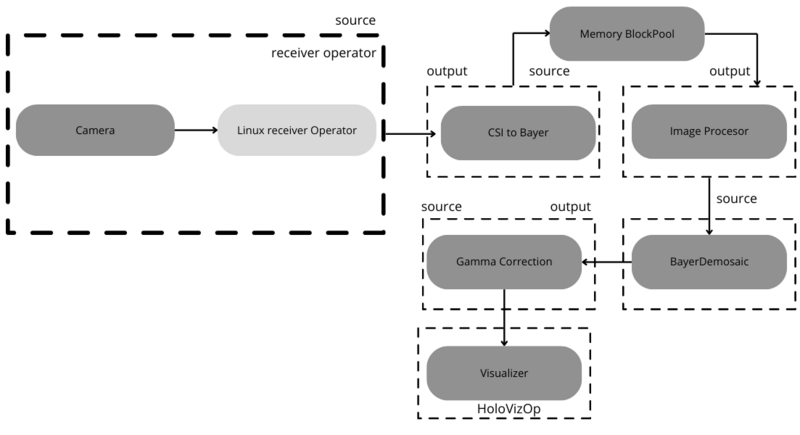

The following results correspond to the baseline pipeline provided by the Holoscan Sensor Bridge framework as an example. This pipeline is illustrated by:

For experimentation purposes, we have tried different setups and configurations:

| Configurations | |

|---|---|

| DP | Display Port |

| HDMI | HDMI Port |

| JC | Jetson Clocks |

| ED | Exclusive Display |

| NED | Non Exclusive Display |

| MAXN | Maximum Power |

| CISP-GW | CudaISP GrayWorld |

| CISP-HS | CudaISP HistogramStretch |

| DBOnly | Only Debayer without ISP |

which generates the following results:

| Statistics | MAXN + JC + ED + DP | MAXN + ED + DP | 15W + ED + DP |

|---|---|---|---|

| GPU | 12% | 50% | 73.60% |

| GPU Freq | 1.3 GHz | 306 MHz | 400 MHz |

| GPU Mem | 595M | 595M | 565M |

| CPU Mem | 99.1M | 96.3M | 101M |

| CPU | 3.80% | 5% | 23.50% |

| CPU Freq | 2.2 GHz | 729 MHz | 900 MHz |

| Power | 17 W | 12.9 W | 12.1 W |

A second camera captured the glass-to-glass latency using video mirroring (sensor capturing at a screen with a timer). The (total) CPU usage represents the percentage of the entire CPU, whereas the core is the use percentage relative to the entire CPU.

Typical Jetson Camera Subsystem Results

Typically, the NVIDIA Jetson has an MIPI capture subsystem equipped with an ISP. To control the ISP and get access to the camera driver, NVIDIA proposes the Argus library, which includes:

- Control for the ISP: auto-exposure, auto-white-balancing, and color correction.

- An interface to get camera buffers in device memory that is friendly for CUDA-based applications.

- Camera control.

In some applications, it is possible to extend the MIPI capture to support SerDes-based capture, i.e., GSML3.

MIPI-based Capture

For the MIPI-based capture, the following conditions are considered:

- Maximum Performance Profile: MAXN and Jetson Clocks

- Display Exclusive

- Auto Exposure and Auto White Balancing Enabled

- GStreamer capture

- Using a Jetson AGX Orin (same as in the Holoscan)

- Jetpack 6.2

It uses the following pipeline:

The GStreamer pipeline:

gst-launch-1.0 nvarguscamerasrc ! queue ! nvvidconv ! queue ! nvdrmvideosink

Leading to the following results:

| Camera | Mean Latency (ms) | Uncertainty (ms) |

|---|---|---|

| IMX274 | 134.984 | 8.35 |

SerDes-based Capture

For the SerDes capture, the following conditions are considered:

- Maximum Performance Profile: MAXN and Jetson Clocks

- Display Exclusive

- Auto Exposure and Auto White Balancing Enabled

- GStreamer capture

- Using a Jetson AGX Orin

- Jetpack 5.x

The capture pipeline is similar to the following one:

In GStreamer, it is:

gst-launch-1.0 nvarguscamerasrc ! queue ! nvvidconv ! queue ! nvdrmvideosink

It leads to the following results:

| Camera - SerDes | Mean Latency (ms) | Uncertainty (ms) |

|---|---|---|

| IMX542 - MAX96793/MAX96792A (GSML3) | 185 | 8.35 |

CUDA ISP Results

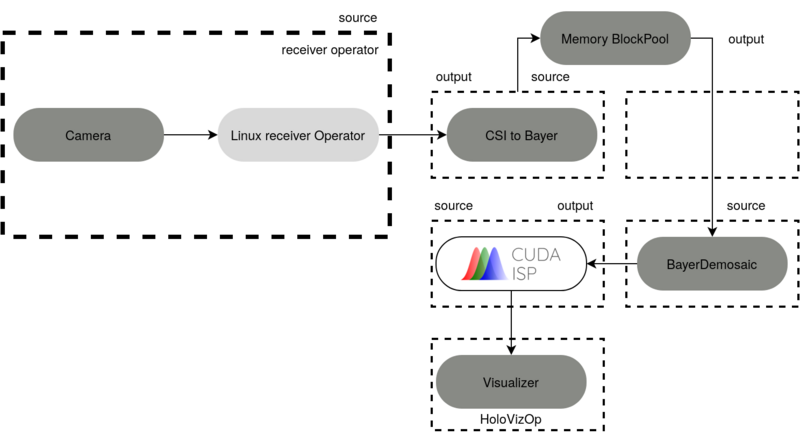

We have optimized our application by integrating CUDA ISP into the Holoscan Sensor Bridge. CUDA ISP integrates an outstanding algorithm for colour correction and auto-white balancing with the RGB space. It adjusts the histograms of each color channel within a confidence interval, leading to a more complete color balancing. Recalling the baseline pipeline, our optimization implies dropping the ISP Processor block and replacing the Gamma Correction block with the CUDA ISP block, leading to the following pipeline:

Each of these blocks is executed in parallel for pipeline-like acceleration. Removing one of the blocks will shorten the frame processing time (latency), and further optimizing any of these blocks will also decrease the latency. In this case, the CUDA ISP manages to reduce one block.

On the other hand, an important consideration is that CUDA ISP does not offer RGBA64, needed for HoloViz. This integrates the necessary conversions for RGBA64 to RGBA32 back and forth.

The following table highlights the results by using the Holoscan Sensor Bridge, the NVIDIA Jetson AGX Orin, and the IMX274 imager:

| Configuration | Mean Latency (ms) | Uncertainty (ms) |

|---|---|---|

| Baseline | 41.61 | 8.35 |

| DP+ED+MAXN+JC+CISP-HS | 37.93 | 8.35 |

| DP+ED+MAXN+JC+CISP-GW | 49.76 | 8.35 |

| DP+ED+MAXN+JC+DBOnly | 35.96 | 8.35 |

This involves configuring the Jetson into a maximum performance mode, with the CUDA ISP in 24-bit colour depth. The latency lowered from 41.61 to 37.93 ms by optimizing the pipeline, leading to an 8.8% reduction.

More improvement can be applied by offloading the image signal processing to the FPGA, reducing the pressure on the Jetson system. The FPGA can potentially reduce the latency, given the dataflow execution pattern offered by FPGA Hardware Acceleration. The minimum latency obtained without altering the FPGA design is 35.96%, defining the floor of the latency by just adding a debayer to the image signal processing pipeline.

Latency results summary

As a summary of the latency results obtained in different tests, you can find below the different configurations with the corresponding latency measured. Holoscan Sensor Bridge proves to be a strong and reliable option to achieve a low-latency solution in NVIDIA Jetson systems.

| Configuration | Mean Latency (ms) | Uncertainty (ms) |

|---|---|---|

| MIPI: IMX274 | 134.984 | 8.35 |

| MIPI-GMSL3: IMX542 with MAX96793/MAX96792A | 185 | 8.35 |

| Holoscan Sensor Bridge: Baseline | 41.61 | 8.35 |

- ↑ Holoscan IMX274 Example: https://docs.nvidia.com/holoscan/sensor-bridge/1.0.0/examples.html#imx274-player-example