Exploring NVIDIA Jetson Orin Nano Super Mode performance using Generative AI

|

NVIDIA Jetson Orin Nano Super Mode

Introduction

RidgeRun is always looking for the newest technologies to improve the service that we offer to our clients and the community by staying ahead of industry trends. So, in this post, we are exploring the new NVIDIA Jetson Orin Nano™ Super Developer Kit and its potential by running some NVIDIA’s Jetson Generative AI examples.

This post explores the Text Generation and Image Generation NVIDIA Jetson AI Lab tutorials. You’ll find information regarding the CPU, GPU and memory performance metrics when executing the examples in 15 watts power mode versus running the Generative AI at the MAX power mode, that means, Super Developer Kit mode. The reader will be able to check CPU, GPU and memory performance metrics when running the examples using the 15 watts power mode versus the max power mode which operates at 25 watts.

Furthermore, at the end of this wiki, you can find the instructions on how to enable the Jetson Orin Nano Super Mode Configuration and run the Text Generation with ollama client and Stable Diffusion Image Generation tutorials.

Environment

The tutorials explored in this post are Text Generation and Image Generation. They were executed in a Jetson Orin Nano 8 GB with a 1TB M 2 NVMe SSD. This Jetson Orin has an Ampere GPU architecture and 6 CPU ARM cores.

The JetPack used is version 6.1., which is the latest JetPack 6 version delivered by NVIDIA as of the date of writing this post and includes the Orin Super Developer Kit support.

The Jetson Orin supports different power modes. Two modes were used for this blog, the 15-Watts and the maximum power mode which has no power budget and is related to the Super Developer Kit release.

Super Developer Kit mode capabilities

The Jetson Orin Nano Super Developer Kit configuration comparison with the original modes can be found in the following table, adapted from [1],[2]:

| Original NVIDIA Jetson Orin Nano Developer Kit | NVIDIA Jetson Orin Nano Super Developer Kit | |

|---|---|---|

| GPU | NVIDIA Ampere architecture

635 MHz |

NVIDIA Ampere architecture

1,020 MHz |

| AI PERF | 40 INT8 TOPS (Sparse)

20 INT8 TOPS (Dense) 10 FP16 TFLOPs |

67 TOPS (Sparse)

33 TOPS (Dense) 17 FP16 TFLOPs |

| CPU | 6-core Arm Cortex-A78AE v8.2 64-bit CPU

1.5 GHz |

6-core Arm Cortex-A78AE v8.2 64-bit CPU

1.7 GHz |

| Memory | 8GB 128-bit LPDDR5

68 GB/s |

8GB 128-bit LPDDR5

102 GB/s |

| Module Power | 15W | 15W | 25W |

Table 1. Jetson Orin Nano Super Developer Kit capabilities comparison

Some capabilities can be checked by executing jetson_clocks and tegrastats on the board. The following are for the Original NVIDIA Jetson Orin Nano Developer Kit:

RAM 5933/7620MB (lfb 21x1MB) SWAP 5/3810MB (cached 0MB) CPU [0%@1497,0%@1497,0%@1497,0%@1497,0%@1497,0%@1497] EMC_FREQ 0%@2133 GR3D_FREQ 0%@[611] NVDEC off NVJPG off NVJPG1 off VIC off OFA off APE 200 cpu@49.906C soc2@48.875C soc0@49.5C gpu@49.468C tj@50.062C soc1@50.062C VDD_IN 5313mW/5313mW VDD_CPU_GPU_CV 943mW/943mW VDD_SOC 1731mW/1731mW

On the other hand, the next execution is for NVIDIA Jetson Orin Nano Super Developer Kit:

RAM 929/7620MB (lfb 3x4MB) SWAP 0/3810MB (cached 0MB) CPU [0%@1728,0%@1728,0%@1728,0%@1728,0%@1728,0%@1728] EMC_FREQ 0%@3199 GR3D_FREQ 0%@[1007] NVDEC off NVJPG off NVJPG1 off VIC off OFA off APE 200 cpu@51.531C soc2@50.5C soc0@50.812C gpu@50.718C tj@51.531C soc1@51.468C VDD_IN 6848mW/6848mW VDD_CPU_GPU_CV 1495mW/1495mW VDD_SOC 2637mW/2637mW

Notice that the CPU frequency increased from 1.5 MHz to 1.7 MHz approximately, which is an improvement of 11.76%. The GPU frequency went from being in 611 MHz to 1007 MHz, an improvement of 39.32%.

Results summary

Detailed instructions on how to enable the NVIDIA Jetson Orin Nano Super Developer kit mode and run the NVIDIA generative AI tutorials can be found below this wiki.

In addition, the performance metrics’ details can be taken from the figures in the next sections, which are summarized in the following bullet points:

- The duration taken for the text generation output when using the Super Developer kit mode decreased by

26.74%and while the tokens/s increased by31.38%in comparison with the 15 watts mode.

- The duration taken for the text generation output when using the Super Developer kit mode decreased by

- The GPU average usage increased by

1.25%when using the MAX power mode and its frequency during the inference reached a maximum value of1015 MHz. While the CPU and the memory usage decreased by0.2%and15.23%on average, respectively when testing the text generation example.

- The GPU average usage increased by

- The duration taken for the generation of the image when using the Super Developer kit mode decreased by

34.37%while the iterations/s increased by34.63%in comparison with the 15 watts mode.

- The duration taken for the generation of the image when using the Super Developer kit mode decreased by

- For image generation, the GPU average usage increased

5.07%when using the MAX power mode in comparison with the 15 watts mode and its frequency during the inference reached a maximum value of1016 MHz. The CPU increased by1.36%while the memory usage decreased by0.37%on average.

- For image generation, the GPU average usage increased

- Also, by running the bandwidthTest and taking the

Device to Device Bandwidthwhen running the intense mode, the test reported101.9 GB/sin the Super Developer Mode.

- Also, by running the bandwidthTest and taking the

Results and Performance

One of the main purposes of this post is exploring the performance that can be achieved for generative models when using the NVIDIA Jetson Orin Nano Super Developer Kit and comparing them with the 15 watts mode. There are plenty of metrics that can be extracted from these executions, the ones measured here are:

- CPU usage

- GPU usage

- RAM usage

- Tokens/s or iterations/s

The first three parameters are taken by parsing the NVIDIA tegrastats utility and plotting the measurements to have a better understanding of resource consumption. The execution time is reported by the application itself.

Text Generation with ollama client

The text generation model was tested on its capabilities for generating a response on what approach to follow to prevent race conditions in an application. The following prompt was used:

“What approach would you use to detect and prevent race conditions in a multithreaded application?”

Here are some questions that the user can ask the AI model.

15 watts mode results

This request took 49.97 seconds to be completed and the output was generated at the rate of 9.84 tokens/s when using the 15-Watts mode

This same execution was used to measure performance and profile resource usage for the model on the Jetson. The resources of interest are GPU, CPU and RAM consumption. The following plots show a summary of the performance achieved by the model.

These plots show the impact on CPU, GPU, and memory usage when the model starts; on the GPU, usage reached an average of 93.88% during inference. On the CPU, however, we see a smaller increase with an average usage of 4.45%, with some spikes during inference execution. Regarding memory usage, the average was 86.35% and we see no significant change with a variation of 2% approximately during the inference; this is caused by the model being already loaded beforehand.

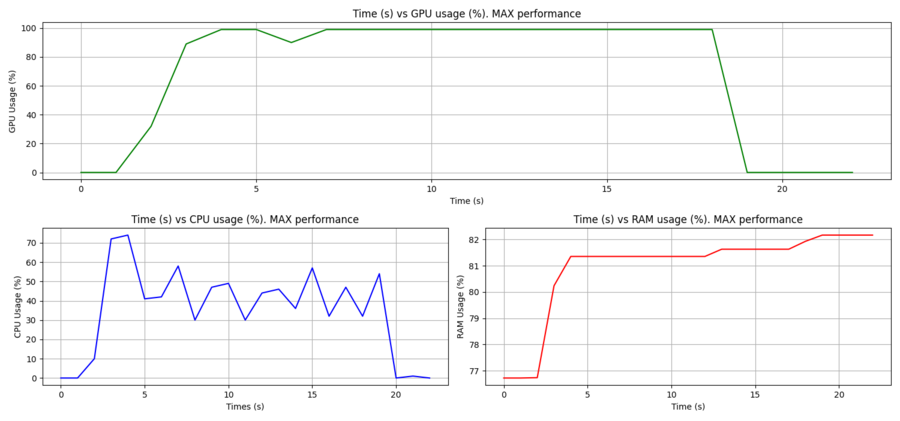

MAX mode results

When using the MAX mode, the request output was generated at the rate of 14.36 tokens/s and took 36.61 seconds to be accomplished.

The resource usage plots that summarize the performance are the following:

The GPU usage reached an average of 96.41% during inference. On the CPU side, we see an average usage of 3.74%. Regarding memory usage, the average was 72.75% and the usage increased with a variation of 1.75% approximately during the inference, this is caused by the model being already loaded beforehand.

We can see, that the duration taken for the text generation of the output when using the Super Developer kit mode decreased by 26.74% while the tokens/s increased by 31.38% in comparison with the 15 watts mode.

On the resource consumption side, the GPU average usage increased by 2.53% when using the MAX power mode and the GPU reached a frequency of 1015 MHz. While the CPU and the memory usage decreased by 0.71% and 13.6% on average, respectively.

Image Generation

The Image Generation generates an image based on a text input prompt. Here is the prompt used to create the following image and measure resource consumption:

Futuristic city with sunset, high quality, 4K image

15-Watts mode results

For the example executed in this mode, the model was loaded in 12.8s, the image was generated in 16 seconds with a rate of 1.34 iterations/s.

The performance and the profile resource usage for the model were measured during this same execution on the Jetson, the following plots show a summary of the performance achieved by this power mode.

These plots show the CPU, GPU and memory percentage resource usage when the tutorial is run. The GPU usage reached an average of 93.93% during inference. On the CPU side, the average was 43.94%. Regarding memory average usage was 81.13% with an increase of 5.2%, this is caused by the model being already loaded beforehand.

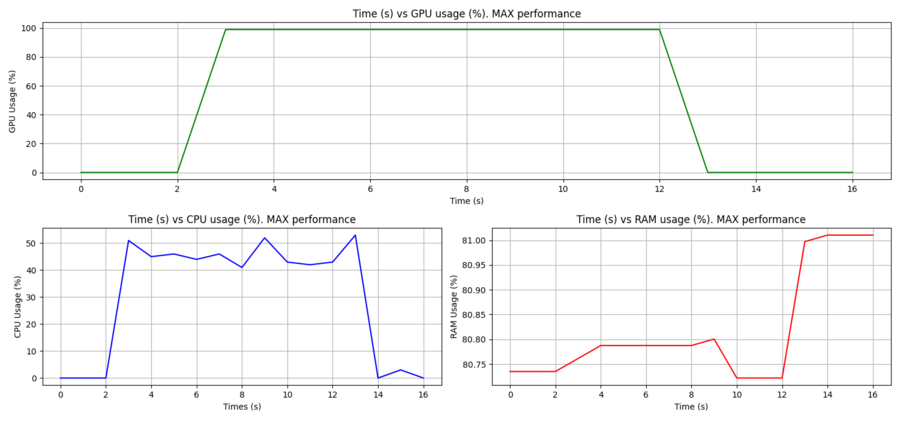

MAX mode results

On the Super Developer Kit mode side, the model was loaded in 9.4s, the image was generated in 10.5 seconds at a rate of 2.05 iterations per second.

The plots that summarize the resource consumption are shown as follows.

The GPU usage reached an average of 99% during inference. On the CPU side, the average was 45.3%. The memory usage increased by 1% approximately, this is caused by the model being already loaded beforehand, while the average usage was 80.76%.

We can see, that the duration taken for the generation of the image when using the Super Developer kit mode decreased by 34.37% while the iterations/s increased by 34.63% in comparison with the 15 watts mode.

On the resource consumption side, the GPU average usage increased by 5.07% when using the MAX power mode in comparison with the 15 watts mode and its frequency reached a maximum value of 1016 MHz. The CPU increased by 1.36% while the memory usage decreased by 0.37% on average, respectively.

Conclusions

With the results generated in this blog, the NVIDIA Jetson Orin Nano Super Developer Kit was enabled with success and some Generative AI examples were executed.

On the metrics performance side, when using the Super Developer Kit mode, the text generation duration and the tokens/s had an improvement of 26.74% and 31.38% respectively. While the GPU usage increased by 1.25% with a maximum frequency of 1015 MHz reached during the inference. However, the CPU and memory usage decreased by 0.2% and 15.23% on average respectively.

For the image generation example in the Super Developer Kit mode, the iterations/s and generated process duration had an improvement of 34.63% and 34.37% respectively. The GPU average usage increased by 5.07% with a maximum frequency of 1016 MHz reached during the inference. Also, the CPU increased by 1.36% while the memory usage decreased by 0.37% on average.

Furthermore, the bandwidthTest was executed to measure the memory Device to Device Bandwidth intensive mode. The test reported 101.9 GB/s in the Super Developer Kit Mode. In addition to this, the CPU frequency increased from 1.5 MHz to 1.7 MHz approximately, which is an improvement of 11.76%. The GPU frequency went from being in 611 MHz to 1007 MHz, which is an improvement of 39.32%.

Finally, at RidgeRun we see an improvement when enabling the NVIDIA Jetson Orin Nano Super Developer Kit Mode and running the Jetson Generative AI examples. There are still a lot more things to explore in depth to adapt this technology to custom applications, but this survey allows us to explore the new NVIDIA Jetson Orin Nano capabilities. Watch out for more blogs on the topic and technical documentation in our developer’s wiki.

Jetson Orin Nano Super mode enabling and Generative AI running instructions

Find below the instructions to enable the Jetson Orin Nano Super mode configuration and the guidance on running the Jetson Generative AI Labs tutorials.

Enable the Super Mode Configuration

Step 1: Download the sources from the SDKManager

Download the sources from the SDKManager. Please, make sure that the configuration shown in the following image is being used:

The user can enable the Additional SDKS fields if they are needed.

Step 2: Define the Environment Variables

Make sure to run the following commands in the terminal that you will use to flash the Orin Nano.

export JETPACK=$HOME/nvidia/nvidia_sdk/JetPack_6.1_Linux_JETSON_ORIN_NANO_8GB_DEVKIT/Linux_for_Tegra

Step 3: Set Board in Recovery Mode

In order to flash the Orin, we must set it in recovery mode so that it can accept the files. The procedure to put the Orin in recovery mode is:

- Turned off and disconnected from the power supply the Jetson Orin Nano devkit.

- Get the USB-A to USB-C or USB-C to USB-C cable and connect the USB-C end to your Orin Nano and the USB-A or USB-C end to your computer (the computer where you installed Jetpack)

- Connect a jumper between FC-REC pin and any GND pin.

- Connect the power supply.

At this point, the Orin Nano should be in recovery mode. To verify, you can run the following command on your host computer:

lsusb

If the Orin is in recovery mode, you should see a line similar to the following among the command output:

Bus 001 Device 011: ID 0955:7523 NVidia Corp

Step 4: Apply binaries

These commands are intended to prepare the files for a correct flash.

cd $JETPACK sudo ./apply_binaries.sh sudo ./tools/l4t_flash_prerequisites.sh

Step 5 (Optional): Create a Default User

The default user/password can be configured after flashing if you can connect a display, keyboard, and mouse to the Orin Nano to complete the <codeOEM configuration during the first boot. If you wish to save time and create a default user/password for your Orin before flashing, you can execute the commands below, make sure to replace <user_name> and <password> for a username and password of your choice.

cd $JETPACK/tools sudo ./l4t_create_default_user.sh -u <user_name> -p <password>

Step 5: Execute the Flash Script

NVIDIA provides a script for flashing the Orin in the Jetpack directory. This script takes two arguments, the target board, and the root device:

The following subsections show the command to flash each of the storage options available.

Option #1: NVMe

Execute the following commands:

cd $JETPACK sudo ./tools/kernel_flash/l4t_initrd_flash.sh --external-device nvme0n1p1 \ -c tools/kernel_flash/flash_l4t_t234_nvme.xml -p "-c bootloader/generic/cfg/flash_t234_qspi.xml" \ --showlogs --network usb0 jetson-orin-nano-devkit-super internal

The flashing process will take a while, and should print a success message if the flash is finished successfully. After the flash script finishes successfully, the Orin will boot automatically.

Option #2: SD card

Execute the following commands:

cd $JETPACK sudo ./tools/kernel_flash/l4t_initrd_flash.sh --external-device mmcblk0p1 \ -c tools/kernel_flash/flash_l4t_t234_nvme.xml -p "-c bootloader/generic/cfg/flash_t234_qspi.xml" \ --showlogs --network usb0 jetson-orin-nano-devkit-super internal

The flashing process will take a while and should print a success message if the flash is finished successfully. After the flash script finishes successfully, the Orin will boot automatically.

Option #3: USB Drive

sudo ./tools/kernel_flash/l4t_initrd_flash.sh --external-device sda1 \ -c tools/kernel_flash/flash_l4t_t234_nvme.xml -p "-c bootloader/generic/cfg/flash_t234_qspi.xml" \ --showlogs --network usb0 jetson-orin-nano-devkit-super internal

The flashing for any of the methods used above should finished by showing the following messages:

Flash is successful Reboot device Cleaning up...

Testing the MaxN mode

A way to confirm that the board was flashed with the super mode is by checking the /etc/nvpmodel.conf file. Based on Supported Modes and Power Efficiency, the MaxN mode is available only when flashing with the `jetson-orin-nano-devkit-super` configurations. This file shows:

POWER_MODEL ID=2 NAME=MAXN

For changing to the MaxN mode, execute the following command:

sudo /usr/sbin/nvpmodel -m 2

Then reboot the board and check it as:

sudo /usr/sbin/nvpmodel -q NV Power Mode: MAXN 2

Also, tegrastats can be run and checked as:

sudo tegrastats --readall RAM 929/7620MB (lfb 3x4MB) SWAP 0/3810MB (cached 0MB) CPU [0%@1728,0%@1728,0%@1728,0%@1728,0%@1728,0%@1728] EMC_FREQ 0%@3199 GR3D_FREQ 0%@[1007] NVDEC off NVJPG off NVJPG1 off VIC off OFA off APE 200 cpu@51.531C soc2@50.5C soc0@50.812C gpu@50.718C tj@51.531C soc1@51.468C VDD_IN 6848mW/6848mW VDD_CPU_GPU_CV 1495mW/1495mW VDD_SOC 2637mW/2637mW

Noticed that the CPU frequency reported is 1.7 MHz while the GPU frequency is 1007 MHz, which are values reported in NVIDIA Jetson Orin Nano Developer Kit Gets a “Super” Boost

Furthermore, cuda-samples/Samples/1_Utilities/bandwidthTest can be executed to measure the memcopy bandwidth of the GPU and memcpy bandwidth across PCI-e. To execute this, follow the next instructions.

Clone the [ttps://github.com/NVIDIA/cuda-samples.git cuda-samples] repository.

git clone https://github.com/NVIDIA/cuda-samples.git

Compile the bandwidthTest sample.

cd cuda-samples/Samples/1_Utilities/bandwidthTest/ make

Maximize jetson performance by running the jetson_clocks script.

sudo jetson_clocks

Execute the test as follows to get more detailed information regarding the memory bandwidth.

./bandwidthTest --mode=shmoo --device=all

Check the Device to Device Bandwidth. For the next example, we got:

1000000 101.9

Found the full output below.

Expand ⇒(at right) to see the collapsible section:

./bandwidthTest --mode=shmoo --device=all [CUDA Bandwidth Test] - Starting... !!!!!Cumulative Bandwidth to be computed from all the devices !!!!!! Running on... Device 0: Orin Shmoo Mode ................................................................................. Host to Device Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(GB/s) 1000 0.8 2000 0.4 3000 0.7 4000 0.9 5000 1.1 6000 1.3 7000 1.5 8000 1.7 9000 1.9 10000 2.0 11000 2.2 12000 2.4 13000 2.5 14000 2.8 15000 2.9 16000 3.1 17000 3.3 18000 3.4 19000 3.6 20000 3.7 22000 4.1 24000 4.4 26000 8.0 28000 8.8 30000 9.4 32000 9.6 34000 10.5 36000 11.1 38000 11.9 40000 12.1 42000 12.8 44000 13.6 46000 14.0 48000 14.7 50000 14.8 60000 16.5 70000 17.2 80000 18.2 90000 19.1 100000 19.9 200000 23.6 300000 25.1 400000 25.8 500000 22.8 600000 23.7 700000 24.4 800000 24.7 900000 25.2 1000000 25.3 2000000 27.0 3000000 27.6 4000000 27.8 5000000 28.0 6000000 28.1 7000000 28.1 8000000 28.3 9000000 28.3 10000000 28.3 11000000 28.3 12000000 28.4 13000000 28.4 14000000 28.4 15000000 28.4 16000000 28.5 18000000 28.5 20000000 28.6 22000000 28.6 24000000 28.6 26000000 28.6 28000000 28.6 30000000 28.6 32000000 28.6 36000000 28.6 40000000 28.6 44000000 28.6 48000000 28.6 52000000 28.7 56000000 28.7 60000000 28.7 64000000 28.7 68000000 28.7 ................................................................................. Device to Host Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(GB/s) 1000 0.3 2000 0.7 3000 1.0 4000 1.3 5000 1.7 6000 1.9 7000 2.3 8000 2.7 9000 3.0 10000 3.4 11000 3.6 12000 4.1 13000 4.4 14000 4.7 15000 5.0 16000 5.4 17000 5.8 18000 6.1 19000 6.6 20000 6.9 22000 7.4 24000 8.4 26000 9.0 28000 9.8 30000 10.5 32000 11.3 34000 11.8 36000 12.5 38000 13.4 40000 13.9 42000 14.7 44000 15.5 46000 16.2 48000 17.0 50000 18.0 60000 19.4 70000 20.3 80000 21.7 90000 22.5 100000 23.1 200000 25.7 300000 26.6 400000 27.0 500000 23.4 600000 24.7 700000 24.7 800000 25.7 900000 25.4 1000000 26.9 2000000 26.6 3000000 27.7 4000000 28.0 5000000 28.1 6000000 28.3 7000000 28.2 8000000 28.4 9000000 28.4 10000000 28.4 11000000 28.4 12000000 28.4 13000000 28.5 14000000 28.4 15000000 28.5 16000000 28.5 18000000 28.5 20000000 28.6 22000000 28.6 24000000 28.6 26000000 28.6 28000000 28.6 30000000 28.6 32000000 28.6 36000000 28.6 40000000 28.6 44000000 28.7 48000000 28.7 52000000 28.6 56000000 28.6 60000000 28.7 64000000 28.7 68000000 28.6 ................................................................................. Device to Device Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(GB/s) 1000 0.5 2000 0.8 3000 1.1 4000 1.4 5000 1.8 6000 2.1 7000 2.4 8000 2.8 9000 3.2 10000 3.5 11000 3.9 12000 4.3 13000 4.6 14000 5.0 15000 5.4 16000 5.7 17000 6.1 18000 6.3 19000 6.6 20000 7.1 22000 7.9 24000 8.5 26000 9.1 28000 9.8 30000 10.6 32000 11.3 34000 11.9 36000 12.5 38000 13.3 40000 14.0 42000 14.6 44000 15.2 46000 16.2 48000 16.8 50000 17.1 60000 20.2 70000 15.8 80000 17.8 90000 20.3 100000 22.4 200000 38.8 300000 52.5 400000 69.0 500000 71.8 600000 83.6 700000 86.3 800000 90.7 900000 97.3 1000000 101.9 2000000 63.2 3000000 69.6 4000000 72.3 5000000 74.8 6000000 76.0 7000000 77.7 8000000 78.2 9000000 79.3 10000000 79.9 11000000 80.1 12000000 80.5 13000000 80.7 14000000 80.4 15000000 81.1 16000000 81.6 18000000 82.1 20000000 82.1 22000000 82.8 24000000 82.5 26000000 82.9 28000000 83.1 30000000 82.8 32000000 83.5 36000000 83.5 40000000 83.6 44000000 83.7 48000000 83.6 52000000 83.9 56000000 84.0 60000000 84.1 64000000 84.5 68000000 84.1 Result = PASS NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Set up the Jetson Orin Nano

Follow the next instructions from NVIDIA Jetson AI Lab / Tips—SSD + Docker to set up the Jetson Orin Nano before running some Generative AI examples to measure performance. Below are the instructions for you to follow.

To install the SSD, the following video can be used as a guide Jetson Orin Nano Tutorial: SSD Install, Boot, and JetPack Setup - Full Guide!

When the SSD is installed and the Jetson is up, the SSD can be verified on PCI bus as a new memory controller:

lspci

The output should look like the following:

0004:01:00.0 Non-Volatile memory controller: Sandisk Corp Device 5019 (rev 01)

Format and set up auto-mount

1. Run the following command to find the SSD device

lsblk

The output should look like the following:

nvme0n1 259:0 0 931,5G 0 disk

In this case, the device is nvme0n1

2. Format the SSD, create a mount point, and mount it to the filesystem.

sudo mkfs.ext4 nvme0n1

sudo mkdir /ssd

3. To ensure that the mount persists after boot, add an entry to the fstab file:

3.1 Identify the SSD UUID:

lsblk -f

Then, add a new entry to the fstab file:

sudo vi /etc/fstab

Insert UUID value found from lsblk -f. For example:

UUID=d390172d-1e75-43d4-a66f-6c719d102329 /ssd/ ext4 defaults 0 2

Finally, change the ownership of the /ssd directory.

sudo chown ${USER}:${USER} /ssd

Set up Docker

1. Install nvidia-container package. For <code<Jetson Linux (L4T) R36.x (JetPack 6.x) users follow the next instructions.

sudo apt update sudo apt install -y nvidia-container curl curl https://get.docker.com | sh && sudo systemctl --now enable docker sudo nvidia-ctk runtime configure --runtime=docker

2. Restart the Docker service and run Docker as Non-Root User (No Sudo).

sudo systemctl restart docker sudo usermod -aG docker $USER newgrp docker

3. Add default runtime in /etc/docker/daemon.json.

3.1. First open the daemon.json file with sudo

sudo vi /etc/docker/daemon.json

3.2. Add the "default-runtime": "nvidia" line as:

{

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

},

"default-runtime": "nvidia"

}

4. Restart docker.

sudo systemctl daemon-reload && sudo systemctl restart docker

Migrate Docker directory to SSD

Use the SSD as an extra storage capacity to hold the storage-demanding Docker directory by following the below instructions.

1. Stop the Docker service.

sudo systemctl stop docker

2. Move the existing Docker directory to the SSD.

sudo du -csh /var/lib/docker/ && \

sudo mkdir /ssd/docker && \

sudo rsync -axPS /var/lib/docker/ /ssd/docker/ && \

sudo du -csh /ssd/docker/

3. Edit /etc/docker/daemon.json with sudo to set the data-root path as following:

{

"runtimes": {

"nvidia": {

"path": "nvidia-container-runtime",

"runtimeArgs": []

}

},

"default-runtime": "nvidia",

"data-root": "/ssd/docker"

}

4. Rename the old Docker data directory.

sudo mv /var/lib/docker /var/lib/docker.old

5. Restart the Docker daemon.

sudo systemctl daemon-reload && \

sudo systemctl restart docker && \

sudo journalctl -u docker

Verification

1. Pull the nvcr.io/nvidia/l4t-base:r35.2.1 image.

docker pull nvcr.io/nvidia/l4t-base:r35.2.1

2. When the above command finishes, check the /ssd as follows:

docker info | grep Root Docker Root Dir: /ssd/docker

sudo ls -l /ssd/docker/ total 44 drwx--x--x 4 root root 4096 Mar 22 11:44 buildkit drwx--x--- 2 root root 4096 Mar 22 11:44 containers drwx------ 3 root root 4096 Mar 22 11:44 image drwxr-x--- 3 root root 4096 Mar 22 11:44 network drwx--x--- 13 root root 4096 Mar 22 16:20 overlay2 drwx------ 4 root root 4096 Mar 22 11:44 plugins drwx------ 2 root root 4096 Mar 22 16:19 runtimes drwx------ 2 root root 4096 Mar 22 11:44 swarm drwx------ 2 root root 4096 Mar 22 16:20 tmp drwx------ 2 root root 4096 Mar 22 11:44 trust drwx-----x 2 root root 4096 Mar 22 16:19 volumes

docker info | grep -e "Runtime" -e "Root" Runtimes: io.containerd.runtime.v1.linux nvidia runc io.containerd.runc.v2 Default Runtime: nvidia Docker Root Dir: /ssd/docker

Run Text Generation with ollama client tutorial

Remember to go to the directory allocated to the NVMe and run the following commands.

1. Install the Ollama's official support for Jetson and CUDA.

curl -fsSL https://ollama.com/install.sh | sh

It creates a ollama serve. Check the usage as:

ollama --help Large language model runner Usage: ollama [flags] ollama [command] Available Commands: serve Start ollama create Create a model from a Modelfile show Show information for a model run Run a model stop Stop a running model pull Pull a model from a registry push Push a model to a registry list List models ps List running models cp Copy a model rm Remove a model help Help about any command Flags: -h, --help help for ollama -v, --version Show version information Use "ollama [command] --help" for more information about a command

2. Then set up a container for running the text generation with ollama client tutorial.

cd /ssd git clone https://github.com/dusty-nv/jetson-containers bash jetson-containers/install.sh

The install.sh installs some python dependencies for running the Jetson AI Labs docker images. The jetson-containers/build.sh, run and <code.autotag scripts are responsible for finding this compatible docker image based on the example the user wants to run. In this case the dustynv/ollama:0.5.1-r36.4.0 image.

3. Run the docker container for ollama using jetson-containers as:

jetson-containers run --name ollama $(autotag ollama) ollama run mistral

mistral is a 7B model released by Mistral AI.

After running the above command. The following output should be shown up:

jetson-containers run --name ollama $(autotag ollama) ollama run mistral Namespace(packages=['ollama'], prefer=['local', 'registry', 'build'], disable=[''], user='dustynv', output='/tmp/autotag', quiet=False, verbose=False) -- L4T_VERSION=36.4.2 JETPACK_VERSION=5.1 CUDA_VERSION=12.6 -- Finding compatible container image for ['ollama'] dustynv/ollama:0.5.1-r36.4.0 V4L2_DEVICES: + docker run --runtime nvidia -it --rm --network host --shm-size=8g --volume /tmp/argus_socket:/tmp/argus_socket --volume /etc/enctune.conf:/etc/enctune.conf --volume /etc/nv_tegra_release:/etc/nv_tegra_release --volume /tmp/nv_jetson_model:/tmp/nv_jetson_model --volume /var/run/dbus:/var/run/dbus --volume /var/run/avahi-daemon/socket:/var/run/avahi-daemon/socket --volume /var/run/docker.sock:/var/run/docker.sock --volume /ssd/jetson-containers/data:/data -v /etc/localtime:/etc/localtime:ro -v /etc/timezone:/etc/timezone:ro --device /dev/snd -e PULSE_SERVER=unix:/run/user/1000/pulse/native -v /run/user/1000/pulse:/run/user/1000/pulse --device /dev/bus/usb --device /dev/i2c-0 --device /dev/i2c-1 --device /dev/i2c-2 --device /dev/i2c-4 --device /dev/i2c-5 --device /dev/i2c-7 --device /dev/i2c-9 -v /run/jtop.sock:/run/jtop.sock --name ollama dustynv/ollama:0.5.1-r36.4.0 ollama run mistral >>> Send a message (/? for help)

The message sent was: What approach would you use to detect and prevent race conditions in a multithreaded application?.

4. To check the tokens per second calculation, check the ollama API.

4.1. Run the ollama container as:

jetson-containers run --name ollama $(autotag ollama)

4.2. Do a request to get a response in one reply as:

curl http://localhost:11434/api/generate -d '{

"model": "mistral",

"prompt": "What approach would you use to detect and prevent race conditions in a multithreaded application?",

"stream": false

}'

When the response is generated, check the eval_count and eval_duration parameters, which are the number of tokens in the response and time in nanoseconds spent generating the response respectively. To calculate the tokens per second (tokens/s), divide eval_count / eval_duration * 10^-9. Check an example output below.

Expand ⇒(at right) to see the collapsible section:

root@nvidia-desktop:/# curl http://localhost:11434/api/generate -d '{

"model": "mistral",

"prompt": "What approach would you use to detect and prevent race conditions in a multithreaded application?",

"stream": false

}'

{"model":"mistral","created_at":"2024-12-23T22:35:48.572961262Z","response":"1. Use Synchronized Blocks or Methods: In Java, the `synchronized` keyword can be used to ensure that only one thread can access a block of code or a method at a time. This can help avoid race conditions by ensuring exclusive access to shared resources.\n\n2. Atomic Variables: Use atomic variables whenever possible for simple operations on shared data. In Java, the `AtomicInteger`, `AtomicLong`, etc., are examples of atomic variables. They provide thread-safe operations that can be updated without the need for explicit synchronization.\n\n3. Lock Objects: Instead of using the `synchronized` keyword at the method level, you can use custom lock objects. This allows finer-grained control over the locked resources and can help reduce contention.\n\n4. Immutable Objects: Whenever possible, make your shared objects immutable. If an object cannot be modified after it is created, there is no need for synchronization when accessing that object.\n\n5. Use Libraries with Concurrency Support: Consider using libraries designed to handle concurrent programming, such as Java's ExecutorService or ConcurrentHashMap. These libraries provide built-in concurrency support and can help prevent race conditions.\n\n6. Data Race Detection Tools: Use static analysis tools like JThreadScope, Helgrind, or Valgrind's thread tool to detect potential data races in your code. These tools can help find race conditions during development that may be difficult to identify manually.\n\n7. Testing and Design Patterns: Write tests to verify the correctness of your multithreaded application under various scenarios, paying special attention to areas where race conditions might occur. Use design patterns like producer-consumer, barrier, or double-checked locking to manage concurrent access to shared resources in a more structured way.\n\n8. Avoid Shared State: Minimize the use of shared state by using message passing instead. This can help reduce the likelihood of race conditions and make your code easier to reason about.\n\n9. Use Wait, Notify, and Condition Variables Correctly: In Java, these constructs can be used to coordinate the actions of threads. Misuse can lead to race conditions; ensure you understand how they work and use them appropriately.\n\n10. Keep Code Simple: Complex code is more likely to contain race conditions. Strive for simplicity in your multithreaded design, and focus on clear and concise logic.","done":true,"done_reason":"stop","context":[3,29473,2592,5199,1450,1136,1706,1066,7473,1072,6065,6709,5099,1065,1032,3299,1140,1691,1054,5761,29572,4,1027,29508,29491,6706,1086,15706,2100,9831,29481,1210,10779,29481,29515,1328,12407,29493,1040,2320,29481,15706,2100,29600,24015,1309,1115,2075,1066,6175,1137,1633,1392,6195,1309,3503,1032,3492,1070,3464,1210,1032,2806,1206,1032,1495,29491,1619,1309,2084,5229,6709,5099,1254,20851,15127,3503,1066,7199,6591,29491,781,781,29518,29491,2562,8180,15562,3329,29515,6706,19859,9693,12094,3340,1122,4356,7701,1124,7199,1946,29491,1328,12407,29493,1040,2320,3935,8180,8164,7619,2320,3935,8180,8236,7619,5113,2831,1228,10022,1070,19859,9693,29491,2074,3852,6195,29501,8016,7701,1137,1309,1115,9225,2439,1040,1695,1122,10397,15709,2605,29491,781,781,29538,29491,18350,5393,29481,29515,8930,1070,2181,1040,2320,29481,15706,2100,29600,24015,1206,1040,2806,2952,29493,1136,1309,1706,3228,5112,7465,29491,1619,6744,1622,1031,29501,1588,2506,3370,1522,1040,12190,6591,1072,1309,2084,8411,1447,2916,29491,781,781,29549,29491,3004,14650,5393,29481,29515,28003,3340,29493,1806,1342,7199,7465,1271,14650,29491,1815,1164,2696,4341,1115,12220,1792,1146,1117,4627,29493,1504,1117,1476,1695,1122,15709,2605,1507,3503,1056,1137,2696,29491,781,781,29550,29491,6706,1161,17932,1163,2093,17482,11499,29515,12540,2181,24901,6450,1066,5037,1147,3790,17060,29493,2027,1158,12407,29510,29481,12431,1039,3096,1210,2093,3790,21120,29491,3725,24901,3852,5197,29501,1030,1147,17482,2528,1072,1309,2084,6065,6709,5099,29491,781,781,29552,29491,6052,20824,1152,23588,27026,29515,6706,1830,6411,7808,1505,1243,7005,9920,29493,6192,1588,1275,29493,1210,4242,1588,1275,29510,29481,6195,4689,1066,7473,5396,1946,17277,1065,1342,3464,29491,3725,7808,1309,2084,2068,6709,5099,2706,4867,1137,1761,1115,4564,1066,9819,23828,29491,781,781,29555,29491,4503,1056,1072,9416,27340,29481,29515,12786,8847,1066,12398,1040,5482,2235,1070,1342,3299,1140,1691,1054,5761,1684,4886,22909,29493,10728,3609,5269,1066,5788,1738,6709,5099,2427,6032,29491,6706,3389,12301,1505,14644,29501,17893,1031,29493,20412,29493,1210,4347,29501,13181,5112,1056,1066,9362,1147,3790,3503,1066,7199,6591,1065,1032,1448,29197,1837,29491,781,781,29551,29491,27806,2063,2095,4653,29515,3965,1089,1421,1040,1706,1070,7199,2433,1254,2181,3696,10488,4287,29491,1619,1309,2084,8411,1040,25045,1070,6709,5099,1072,1806,1342,3464,7857,1066,3379,1452,29491,781,781,29542,29491,6706,13886,29493,3048,2343,29493,1072,29005,15562,3329,3966,3891,1114,29515,1328,12407,29493,1935,5890,29481,1309,1115,2075,1066,18005,1040,7536,1070,18575,29491,22065,2498,1309,2504,1066,6709,5099,29513,6175,1136,3148,1678,1358,1539,1072,1706,1474,7350,2767,29491,781,781,29508,29502,29491,11107,8364,14656,29515,27735,3464,1117,1448,4685,1066,7769,6709,5099,29491,1430,6784,1122,26001,1065,1342,3299,1140,1691,1054,3389,29493,1072,4000,1124,3849,1072,3846,1632,12176,29491],"total_duration":36658984876,"load_duration":31889330,"prompt_eval_count":24,"prompt_eval_duration":9000000,"eval_count":526,"eval_duration":36616000000}

Run Stable Diffusion Image generation tutorial

There were some issues when running the tutorial by following the instructions found in the Jetson AI Lab At the moment of writing this wiki page. So, a workaround for executing this tutorial is to run the container as follows:

docker run --runtime nvidia -it --name=text_generation --network host --volume /tmp/argus_socket:/tmp/argus_socket --volume /etc/enctune.conf:/etc/enctune.conf --volume /etc/nv_tegra_release:/etc/nv_tegra_release --volume /tmp/nv_jetson_model:/tmp/nv_jetson_model --volume /home/nvidia/jetson-containers/data:/data --device /dev/snd --device /dev/bus/usb dustynv/text-generation-webui:r36.2.0

Then, the instructions to create images can be found in NVIDIA_Jetson_Generative_AI_Lab_RidgeRun_Exploration#Create_images.

Furthermore, in the terminal where the docker container is running, the tutorial is going to show some useful information regarding the model loading time, image generated information, time taken, and iteration per second to generate that image. Check the example below.

Expand ⇒(at right) to see the collapsible section:

Model loaded in 9.4s (load weights from disk: 1.8s, create model: 0.8s, apply weights to model: 5.6s, apply half(): 0.1s, load textual inversion embeddings: 0.5s, calculate empty prompt: 0.3s). Steps: 20, Sampler: DPM++ 2M Karras, CFG scale: 7, Seed: 1017573880, Size: 512x512, Model hash: 6ce0161689, Model: v1-5-pruned-emaonly, Version: v1.7.0 Time taken: 10.5 sec. A: 2.57 GB, R: 3.37 GB, Sys: 6.4/7.44141 GB (86.0%) Total progress: 100%|███████████████████████████████████████████████████| 20/20 [00:09<00:00, 2.05it/s]

References

For direct inquiries, please refer to the contact information available on our Contact page. Alternatively, you may complete and submit the form provided at the same link. We will respond to your request at our earliest opportunity.

Links to RidgeRun Resources and RidgeRun Artificial Intelligence Solutions can be found in the footer below.