What is the PVA?

What is the PVA?

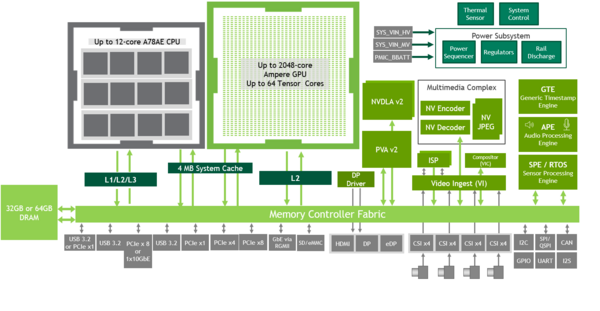

The NVIDIA Jetson Orin series integrates several specialized hardware accelerators designed to optimize performance for AI, machine learning, and multimedia applications. Here's an overview of the processing units and accelerators:

- ARM-based Central Processing Unit (CPU)

- Graphics Processing Unit (GPU)

- Deep Learning Accelerator (DLA)

- Programmable Vision Accelerator (PVA)

- Video Encoder (NVENC) and Video Decoder (NVDEC)

- Optical Flow Accelerator (OFA)

The Programmable Vision Accelerator (PVA) is a hardware accelerator featured in the NVIDIA Jetson Orin NX and AGX modules. Designed to execute computer vision algorithms with high performance and low power consumption, it is an ideal solution for embedded AI applications requiring energy efficiency, low latency, and real-time responsiveness.

PVA Architecture Overview

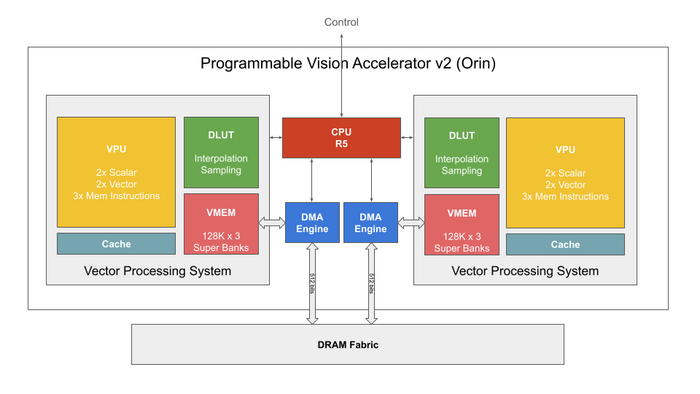

Unlike the CPU and GPU, the PVA offers a fixed-function processing pipeline tailored for specific image and vision-related tasks. These include filtering, pyramids, edge detection, and optical flow operations. In many edge computing scenarios, especially those involving vision workloads, the PVA achieves superior performance per watt compared to conventional CPU or GPU solutions.

Each PVA is equipped with two Vector Processing Systems with a VPU capable of processing 2xScalar, 2xVector, and 3xMemory operations simultaneously (7-way VLIW).

The Vector Processing Unit (VPU) is the PVA architecture's primary compute engine. It is a vector SIMD (Single Instruction, Multiple Data) VLIW (Very Long Instruction Word) digital signal processor (DSP) optimized for computer vision workloads.

The Decoupled Lookup Unit (DLUT) is integrated as a specialized hardware component to enhance parallel data processing. It enables high-throughput parallel lookup operations using a single copy of a lookup table, operating independently from the main VPU pipeline.

The VMEM offers local data storage tailored for high-speed access by the VPU. By minimizing latency, it plays a critical role in the execution of image processing and vision algorithms.

The R5 CPU orchestrates each VPU task. It is responsible for configuring the DMA, optionally prefetching VPU programs into the I-cache, and initiating execution by coordinating the VPU-DMA pair.

Two DMA engines are incorporated to manage data movement across various memory spaces. These DMA engines can each reach transfer rates of up to 15 GB/s under light system loads and approximately 10 GB/s under heavy loads and work independently from the VPS, allowing parallel memory transactions as the VPS computes.

In terms of compute capability, each PVA instance delivers 2048 GMACs (Giga Multiply-Accumulate Operations per Second) for INT8 operations (excluding DLUT usage), and 32 GMACs for FP32 operations [1].

What's PVA for?

The PVA shines in continuous, low-power scenarios such as:

- Surveillance

- Robotics

- Automotive perception systems

where it can remain active for extended periods without draining power reserves.

More Information

For reference, please visit the official NVIDIA PVA Docs.