Library Usage

| GPU Accelerated Motion Detector |

|---|

|

| Overview |

| GStreamer Plugin |

| Getting Started |

| Examples |

| Performance |

| Troubleshooting |

| FAQ |

| Contact Us |

GPU Accelerated Motion Detection provides a C++ library that includes several algorithms to allow motion detection. The architecture of the library is based in the Abstract Factory which allows abstracting the platform specifics.

The process of setting up the processing pipeline is made of 5 simple steps as shown in the following picture:

In order for the library to be used, you need to include the following headers:

#include <rr/common.hpp> // Datatypes and common classes #include <rr/frameworks.hpp> // Framework specific objects #include <rr/platforms.hpp> // Platform specific objects

Create Factory

The first step is to create the factory that will be used to create algorithms. That can be done as follows:

std::shared_ptr<rr::IMotionFactory> factory = std::make_shared<rr::JetsonMotionDetection>();

This will create a factory for creating algorithms for a Jetson Board.

Create Parameters

The parameters for each algorithm are passed using the Params class. We need to create a Params object for each algorithm, and that can be done as follows:

#define ROI_X1 0.25 #define ROI_X2 0.75 #define ROI_Y1 0 #define ROI_Y2 1 #define ROI_MIN_AREA 0.01 #define LEARNING_RATE 0.01 #define FILTER_SIZE 9 #define MIN_THRESHOLD 128 #define MAX_THRESHOLD 255 /*Define ROI*/ rr::Coordinate<float> roi_coord(ROI_X1, ROI_X2, ROI_Y1, ROI_Y2); rr::ROI<float> roi(roi_coord, ROI_MIN_AREA, "roi"); /*Motion detection (Mog algorithm)*/ std::shared_ptr<rr::MogParams> motion_params = std::make_shared<rr::MogParams>(); motion_params->setLearningRate(LEARNING_RATE); motion_params->addROI(roi); /*Denoise algorithm*/ std::shared_ptr<rr::GaussianParams> denoise_params = std::make_shared<rr::GaussianParams>(); denoise_params->setSize(FILTER_SIZE); denoise_params->setThresholdLimit(MIN_THRESHOLD); denoise_params->setThresholdMax(MAX_THRESHOLD); denoise_params->addROI(roi); /*Blob detection*/ std::shared_ptr<rr::BlobParams> blob_params = std::make_shared<rr::BlobParams>(); blob_params->setMinArea(ROI_MIN_AREA); blob_params->addROI(roi);

The library provides a Params object for each of the available algorithms. The options are as follows:

- Motion Detection: MogParams

- Denoise: GaussianParams

- Blob Detection: BlobParams

Create Algorithms

Once the parameters have been defined, we can proceed to create the algorithms as follows:

/*Motion Algorithm*/ std::shared_ptr<rr::IMotionDetection> motion_detection = factory->getMotionDetector(rr::IMotionDetection::Algorithm::MOG2, motion_params); /*Denoise algorithm*/ std::shared_ptr<rr::IDenoise> denoise = factory->getDenoise(rr::IDenoise::Algorithm::GaussianFilter, denoise_params); /*Blob detection*/ std::shared_ptr<rr::IBlobDetection> blob = factory->getBlobDetector(rr::IBlobDetection::Algorithm::BRTS, blob_params);

The available algorithms for each stage are the following:

- Motion Detection: MOG, MOG2

- Denoise: GaussianFilter

- Blob Detection: BRTS, CONTOURS, HA4

Create Intermediate Frames

Then, to hold the data for the intermediate steps we need to provide buffers. The factory gives you a method for that purpose.

#define WIDTH 640

#define HEIGHT 480

/*Create intermediate frames*/

std::shared_ptr<rr::Frame> mask = factory->getFrame(rr::Resolution(WIDTH, HEIGHT), rr::Format::FORMAT_GREY);

std::shared_ptr<rr::Frame> filtered = factory->getFrame(rr::Resolution(WIDTH, HEIGHT), rr::Format::FORMAT_GREY);

if (!mask || !filtered) {

std::cout << "Failed to allocate frames" << std::endl;

return 1;

}Be careful with the format you use. We support different formats in the different algorithms.

- Motion Detection: Input RGBA and GREY, output GREY

- Denoise: Input/Output GREY

- Blob Detection: Input GREY

Process Frames

Once everything is set up, we can start processing frames as follows:

#define CHECK_RET_VALUE(ret) \

if (ret.IsError()) { \

std::cout << ret << std::endl; \

break; \

}

while(true) {

/*Get input data using one of the provided ways*/

/*Apply algorithms*/

CHECK_RET_VALUE(motion_detection->apply(input, mask, motion_params))

CHECK_RET_VALUE(denoise->apply(mask, filtered, denoise_params))

CHECK_RET_VALUE(blob->apply(filtered, motion_list, blob_params))

/*Check for motion*/

if (motion_list.size() != 0) {

/*Do something with motion objects*/

}

}The returned motion_list is a vector with Motion objects that contain the coordinates of each moving object.

In order to feed the algorithms with the input data, the library provides wrapper classes around commonly used data types that are described in the following sections.

Create a Frame and fill it with your data

std::shared_ptr<rr::Frame> input = factory->getFrame(rr::Resolution(WIDTH, HEIGHT), rr::Format::FORMAT_RGBA); /*Get data pointer. It is an array with a pointer for each plane of the video format*/ std::vector<void*> data = input->getData(); /*Fill data*/

use one of the provided wrappers around different data types

For easier usage, we provide wrappers around common data types. The available are:

- GstFrame: Wrapper around a GstVideoFrame.

GstVideoFrame videoframe; std::shared_ptr<rr::Frame> input = std::make_shared<rr::GSTFrame>(&videoframe);

- GSTCudaDataFrame: Wrapper around a GstCudaData from GstCuda

GstCudaData data; std::shared_ptr<rr::Frame> input = std::make_shared<rr::GSTCudaDataFrame>(&data);

- CVFrame: Wrapper around OpenCV Mat object

cv::Mat matrix; std::shared_ptr<rr::Frame> input = std::make_shared<rr::CVFrame>(matrix);

- GpuMatFrame: Wrapper around OpenCV GpuMat object

cv::cuda::CpuMat matrix; std::shared_ptr<rr::Frame> input = std::make_shared<rr::GpuMatFrame>(matrix);

Serialize

Finally, as an optional step, you can serialize the obtained motion objects list using the provided serializer. The following code will return a JSON string with the moving objects:

rr::JsonSerializer<float> serializer; std::string json_str = serializer.serialize(motion_list);

The generated JSON string will look like this:

{

"ROIs": [{

"motion": [{

"x1": 0,

"x2": 0.048437498509883881,

"y1": 0.25208333134651184,

"y2": 0.34375

}, {

"x1": 0.92343747615814209,

"x2": 0.99843752384185791,

"y1": 0.44374999403953552,

"y2": 0.58541667461395264

}],

"name": "roi",

"x1": 0,

"x2": 1,

"y1": 0,

"y2": 1

}]

}

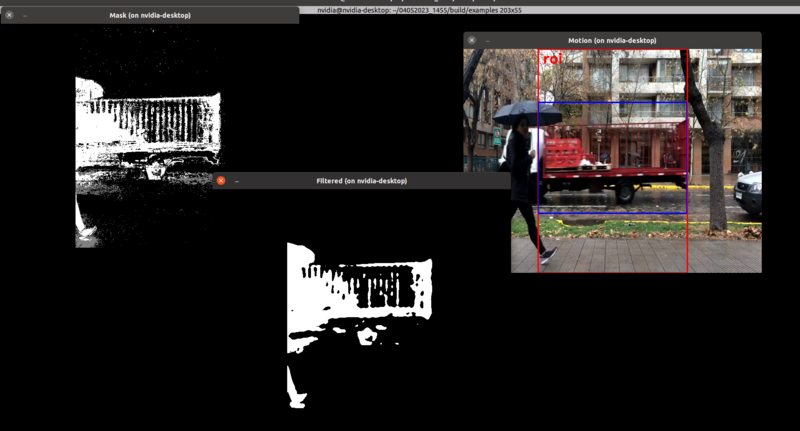

Example application

With the library we include a C++ application showing its usage. It can be found in the repository under examples/cvexample.cpp.

In the application, the usage of the min area and ROI properties are shown. For this example, the ROI was defined as (x1, x2, y1, y2) = (0.25, 0.75, 0 1) and the minimum area as 0.01 (1%) of the total area of the image, so any object outside of the defined ROI or with an area smaller than 0.01 will be discarded.

In order to get it working

- Build the library following these instructions.

- Install GTK dependencies

sudo apt install libcanberra-gtk-module libcanberra-gtk3-module

- Go to the examples build directory:

cd build/examples

- Execute the application with

./cvexample

You should get an output like this:

The 3 windows show the 3 stages of motion detection, and the color window shows in RED the configured region of interest (ROI) and in BLUE the regions where movement is detected.