DeepStream Reference Designs - Reference Designs - Automatic Parking Lot Vehicle Registration

| DeepStream Reference Designs |

|---|

|

| Getting Started |

| Project Architecture |

| Reference Designs |

| Customizing the Project |

| Contact Us |

Introduction

The following section provides a detailed explanation and demonstration of the various components that make up this reference design called "Automatic Parking Lot Vehicle Registration" (APLVR). The goal of this application is to demonstrate the operation of the DeepStream Reference Designs, through the development of an application that works for the monitoring and surveillance of a parking lot system. The application context selected (Parking Lot Environment), is just an arbitrary example, but in this way, you can check the capabilities of this project, and the flexibility offered by the system to be adapted to different applications that require IA processing for computer vision tasks.

System Components

Next, an explanation of the main components that make up the APLVR application will be provided. As you will notice, the structure of the system is based on the design presented in the High-Level Design section, reusing the components of the main framework and including custom modules to meet the business rules required by the context of this application.

Camera Capture

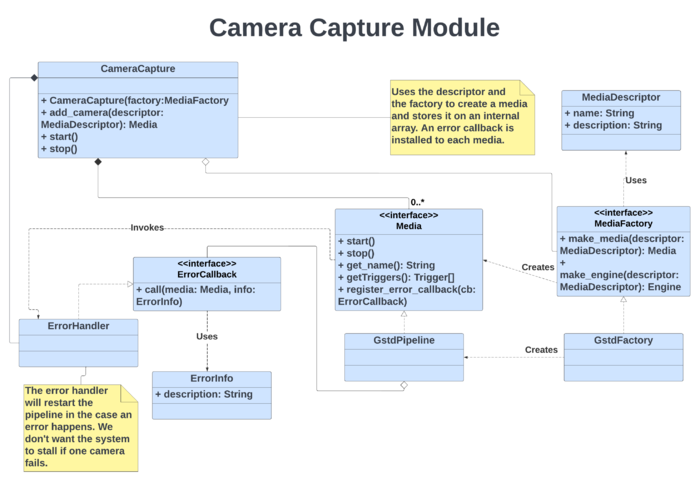

Class Diagram

Below is the class diagram that represents the design of the Camera Capture subsystem for the APLVR application.

Media Entity

For handling the media, APLVR relies on the use of the methods provided by the Python API of GStreamer Daemon. This library allows us to easily manipulate the GStreamer pipelines that transmit the information received by the system's cameras. In this way, the Media and Media Factory entities are used to abstract the methods that allow creating, starting, stopping, and deleting pipeline instances. In addition, the parking system uses three cameras that transmit video information through the Real-Time Streaming Protocol (RTSP). Each of these cameras represents the entrance, exit, and parking sectors of the parking lot system. The video frames received from the three cameras are processed simultaneously in the DeepStream pipeline, which is in charge of carrying out the inference process. To do this, the media are described using the GstInterpipe, which is a GStreamer plug-in that allows pipeline buffers and events to flow between two or more independent pipelines.

AI Manager

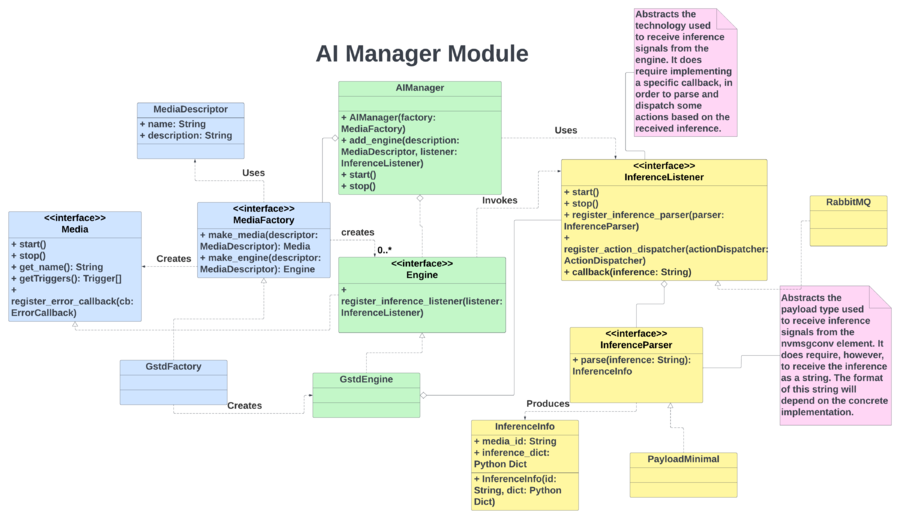

Class Diagram

Below is the class diagram that represents the design of the AI Manager for the APLVR application. As can be seen in the diagram, the AI Manager bases its operation on the entity called engine, which is an extension of the media module used by the Camera Capture subsystem. This extension allows the media entity to handle the inference operations required for the application. Also, the media descriptor for the engine abstracts the components of the DeepStream Pipeline.

DeepStream Models

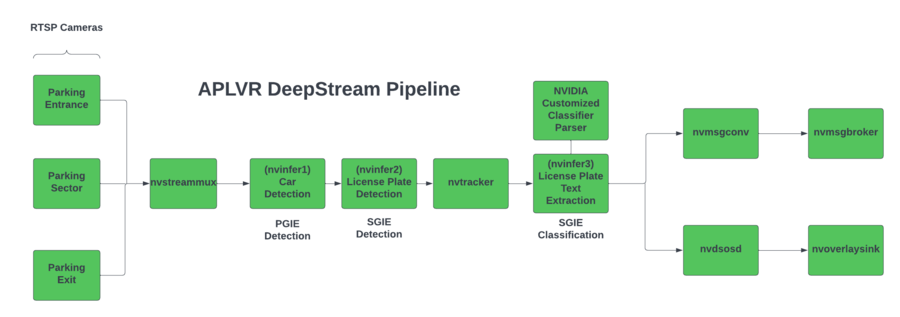

The following figure shows the general layout of the DeepStream pipeline used by the APLVR application. For simplicity of the diagram, some components are excluded to give emphasis to the most important ones.

As can be seen in the pipeline, the input information comes from the three RTSP cameras used by the APLVR parking system. These data are multiplexed by the plugin called nvstreammux, which is in charge of forming a batch of frames from multiple input sources. Then will come the inference process, where APLVR relies on the use of three models to achieve its goal. The models that are included are part of version 3.0 of the TAO Toolkit, which is a tool that allows you to combine pre-trained NVIDIA models with your own data, to create custom AI models that can be used in computer vision applications. The models shown in the pipeline are:

- Car Detection Model: It corresponds to the first inference made on the data, to make the first object detection, although for APLVR purposes we focus on the detected vehicles. This model is based on the TrafficCamNet neural network.

- License Plate Detection: Once the vehicles have been detected in the input frames, a vehicle license plate detection is carried out. For this, the LPDNet network is used. In the diagram, you can see that at the output of this model, the information obtained is passed through a tracker. This allows us to assign a unique identifier to each of the detected license plate instances, and then we can perform specific tasks within the context of the problem that APLVR seeks to solve.

- License Plate Recognization: Finally, once the license plate detection process is finished, this model is used to provide us with textual information on the characters presented by each of the detected license plates. This model is based on the LPRNet network, and it is also combined with a custom parser, which performs the text extraction based on the character dictionary that is included along with the configuration files.

Note: The diagram shows the PGIE and SGIE notations for each of the models. These notations stand for "Primary GPU Inference Engine" and "Secondary GPU Inference Engine". It is also important to note that for the operation of these models, we want to enter a series of hyperparameters that the neural network will use in its configuration. All these configuration parameters are provided in files in txt format and will be explained later in the Config Files section. |

Once the inference process has been carried out, the information obtained can be displayed graphically, and in the case of APLVR, the metadata is sent to the nvmsgconv and nvmsgbroker plugins, which allow us to manipulate the information generated. It is out of the scope of this Wiki to provide a more detailed explanation of each of the components shown in the APLVR DeepStream pipeline, however, if you are interested, you can review the Plugin Guide provided by the DeepStream SDK in its documentation.

Inference Listener

In the case of the inference listener, the APLVR application uses a DeepStream plugin called Gst-nvmsgbroker. Basically, when inserted in the DeepStream pipeline, this element will be in charge of sending the payload messages (after the inference process) to the server in charge of receiving them, for which a specified communication protocol is used, in this case, the AMQP protocol. On the server-side, APLVR locally deploys a rabbitmq broker, which can be installed via the rabbitmq-server package. On the client-side, there is an application developed in Python, which represents the APLVR custom inference listener, and is supported by the methods provided by the Pika library, to consume the broker's messages asynchronously. To establish communication, it is necessary that, both the client and the server, use the same credentials and configuration parameters of the broker, which are established in the configuration files used by the application. If you want to know more details about these configuration files, we invite you to read the APLVR Config Files section.

Inference Parser

This custom module is responsible for parsing the information received by the inference listener. The format of the messages transmitted by the used message broker is defined by the DeepStream plugin called Gst-nvmsgconv, which is responsible for converting metadata into message payloads based on schema. From DeepStream 5.0 release, there is support for two variations of this schema, full and minimal. APLVR uses the format provided by the minimal schema since it includes the most relevant information needed by the application to apply its respective logic. Below is an example of the format provided by the payload minimal schema of this plugin:

{

"version": "4.0",

"id": 60,

"@timestamp": "2022-03-28T23:49:46.377Z",

"sensorId": "CAMERA_ID",

"objects": [

"18446744073709551615|357.758|285.072|411.014|317.846|lpd|#|90MZ205|0.972624",

"0|579.805|133.696|639.437|244.258|Car",

"1|212.615|102.074|513.669|400.402|Car"

]

}

The inference parser receives this format as a parameter, and after processing it, it returns a dictionary that is stored in an object of type Inference Info, which can be interpreted by any component of the system. Note the difference in the context and format of the stored information, which shows the importance of this module, since it facilitates the interpretation of the inferences received:

{

"sensorId": "Exit",

"date": "2022-05-25",

"time": "22:44:25.019",

"instances": [

{

"id": "10",

"category": "lpd",

"bbox": {

"topleftx": 280.683,

"toplefty": 155.192,

"bottomrightx": 379.966,

"bottomrighty": 206.743

},

"extra": [

"#",

"P370651",

"0.979938"

]

},

{

"id": "13",

"category": "car",

"bbox": {

"topleftx": 137.766,

"toplefty": 0.0,

"bottomrightx": 494.419,

"bottomrighty": 309.661

},

"extra": []

}

]

}

Actions

The following actions are available to the user of the APLVR application. Although the actions are specific to the parking lot context, they could be adapted for use in other designs, so these ideas can serve as a basis for different projects.

- Stdout Log: This action represents printing relevant information on the processed inferences to the system's terminal, using the standard output. This information can serve as a kind of runtime log about what is happening in the managed parking lot. An example of the information format shown in the standard output is as follows:

A vehicle with license plate: XYZ123 is in sector "Zone A". Status: Parked. 2022-06-02, 20:56:04.143

- Dashboard: The dashboard action is very similar to the Stdout Log in terms of the content and format of the information it handles. The difference is that in this case, the information is not printed on the terminal using the standard output but is sent to a web dashboard, using an HTTP request to the API that controls the operations where the web dashboard is located. The APLVR application provides an API and a basic design that represents the web dashboard that an administrator of this parking system would use. Later, in the Additional Features section of this wiki, you can find more information about the implementation of this web dashboard.

- Snapshot: What this action seeks is to create a snapshot, of the flow of information that the media pipeline in question is transmitting. Like the other ones, this action is linked to the camera on which the business rules (policies) will be applied, and eventually, they will activate the execution of the corresponding task, depending on certain conditions. The snapshot will be stored in an image file in "jpeg" format and with the generic name of "snapshot_parkingSector_Date:Time.jpeg". The path where the image will be stored can be entered as a configuration parameter of the aplvr.yaml file. The following figure shows an example of a snapshot captured during the execution of the application using some sample videos of a parking lot. In this case, the snapshot was triggered because a parked vehicle was detected in the area of the camera assigned to the parking spaces.

Important Note: Remember that if you want a more detailed explanation of what are actions in the DeepStream Reference Designs project, including visualization of their class diagrams with code examples, we recommend reading the sections of High-Level Design and Implementing Custom Actions. |

Policies

The following policies are available to the user of the APLVR application. Given the nature of policies, the examples shown depend on the context of a parking system. APLVR uses a monitoring system with three cameras, which represent the entry, exit, and parking area sectors. So the policies explained below are based on the inferences received through each of these sector cameras, to apply the required business rules.

- Parking Entrance/Exit Policy: This policy is responsible for determining the exact moment a vehicle enters the parking lot and leaves it. Once the logic of the policy has established that one of these two conditions is met, one of the previously explained actions can be executed, where the user can see information about the license plate of the vehicle that entered or left the parking lot, with its respective date and hour.

- Parking Sector Policy: As its name indicates, the idea of this policy is to verify the status of the vehicles that are captured by the camera in the parking area, whether they are parked or have left the said area and are about to leave the parking lot. The logic of this policy bases its operation on the use of timers and filters. The filters are used to validate the accuracy of the information received, while the timers allow determining the moment to make a change of state, after not receiving any type of inference in the parking sector, which is an indicator that a vehicle left that area.

Important Note: Remember that if you want a more detailed explanation of what are the policies in the DeepStream Reference Designs project, including visualization of their class diagrams with code examples, we recommend reading the sections of High-Level Design and Implementing Custom Policies. |

Config Files

Configuration files allow you to adjust the required parameters prior to running the application. Each one serves a specific purpose, depending on the component it references, and they are all located in the project's config files directory. Although APLVR provides a base configuration, you are free to change the parameters that you consider necessary, however, you must make sure that the parameters that you are going to modify are correct and will not cause a malfunction of the system. If you have questions or problems during your experiments with the configuration parameters, you can contact our support team.

APLVR Config

APLVR provides a general configuration file in YAML format. This file has different sections that are explained below. Initially, we have a DeepStream configuration section, where you can enter as a parameter the path where the configuration files of the models are located. In addition, the platform on which the application will run can be entered as a parameter, where the options are "xavier" and "nano". This way, APLVR knows which model configuration file to use according to the specific hardware platform.

deepstream: config_files_path: "/home/nvidia/automatic-vehicle-registration/config_files/" jetson_platform: "xavier"

Another section that this configuration file presents is that of Gstreamer Daemon or Gstd. The parameters that can be entered include the IP address and the port where the service will be launched.

gstd: ip: "localhost" port: 5000

In the RabbitMQ section, it is where all the parameters required by the AMQP protocol are configured to establish communication with the message broker. In this way, APLVR can consume the messages generated by the DeepStream pipeline.

Important Note: These configuration parameters must match the ones entered in the AMQP configuration file. |

rabbitmq: url: "amqp://guest:guest@192.168.55.1:5672/" exchange: "ds_exchange" queue: "ds_queue" routing_key: "ds_topic"

Next, this section shows how the cameras that send the information through the RTSP protocol are configured. The label used in this section is "streams", and through this information, APLVR is responsible for generating the respective media instances. Each stream has a unique identifier, the URL of the RTSP stream, and the triggers that will be activated on each one.

Important Note: The stream identifiers should match the ones inserted on the Msgconv Config file, which is also in the config files directory. |

streams:

- id: "Entrance"

url: "rtsp://192.168.55.100:8554/videoloop"

triggers:

- "vehicle_dashboard"

- "vehicle_stdout"

- id: "Exit"

url: "rtsp://192.168.55.100:8555/videoloop"

triggers:

- "vehicle_snapshot"

- "vehicle_dashboard"

- id: "SectorA"

url: "rtsp://192.168.55.100:8556/videoloop"

triggers:

- "vehicle_snapshot"

- "vehicle_dashboard"

In the next part, it is where you can configure the parameters that the system policies will use. The application logic knows how to interpret this information, to build the respective policies. The threshold value and the category will be contrasted with respect to the values generated during the inference process, in this way the received metadata can be filtered depending on the application requirements.

policies:

- name: "vehicle_policy"

categories:

- "lpd"

threshold: 0.7

As its name indicates, this section is where the actions that APLVR can use are determined, in case the assigned policies are activated. For the dashboard action, it is necessary to indicate the URL of the API where the web page is hosted, in order to establish the respective communication. And in the case of the snapshot action, the user can indicate the path where the generated image file will be stored.

actions:

- name: "dashboard"

url: "http://192.168.55.100:4200/dashboard"

- name: "stdout"

- name: "snapshot"

path: "/tmp/"

Finally, there is the section where the system triggers are configured. Each trigger is made up of a name, a list of actions, and policies. The name of the trigger is the one assigned to each configured stream so that the defined policies will be processed, and if activated, the installed actions will be executed on each stream.

triggers:

- name: "vehicle_dashboard"

actions:

- "dashboard"

policies:

- "vehicle_policy"

- name: "vehicle_snapshot"

actions:

- "snapshot"

policies:

- "vehicle_policy"

- name: "vehicle_stdout"

actions:

- "stdout"

policies:

- "vehicle_policy"

Msgconv Config

For the purposes of the APLVR application, this file allows providing an identifier to each of the system's input cameras. Remember that this module is in charge of transforming the metadata into the payload messages that the broker will transmit, so with this assigned identifier, it is possible to determine which camera the generated inference comes from. The format of the content of this configuration file is shown below:

[sensor0] enable=1 type=Camera id=Entrance [sensor1] enable=1 type=Camera id=Exit [sensor2] enable=1 type=Camera id=SectorA

AMQP Config

This file will be loaded by the nvmsgbroker plugin, and its purpose is to establish the parameters of the AMQP protocol, which allow us to make a connection between the message broker and the application that consumes the messages (in this case APLVR). It is important to note that the configuration parameters of this file must match those shown in the APLVR configuration file, in the RabbitMQ section, since in this way it is possible to make the connection between the producer and consumer that this protocol requires. The format of the content of this configuration file is shown below:

[message-broker] password = guest hostname = 192.168.55.1 username = guest port = 5672 exchange = ds_exchange topic = ds_topic

Supported Platforms

The APLVR application offers support for the NVIDIA Jetson Nano and Jetson Xavier NX platforms. However, this does not limit that the project can be ported to another Jetson platform, it is simply that the provided configuration files cover parameters that were tested on those two platforms. As explained previously in this Wiki, APLVR relies on the use of three models to carry out the inference process, so configuration files in txt format are included for the TrafficCamNet, LPD, and LPR models. In addition, APLVR provides a label file required by the TrafficCamNet model and a dictionary of characters that the custom parser uses when extracting the text with the information of the license plates found.

Model Accuracy: In the case of the Trafficcamnet and LPD models, the possibility of performing a network calibration with 8-bit integer values (INT8) is offered. This calibration and models are set up by default in the configuration files of the Jetson Xavier NX, since this platform does support it, unlike the Jetson Nano, where the first two models are configured using a floating-point precision with 16 bits (FP16). According to our tests, better accuracy was obtained with the detected license plates when using models calibrated with INT8 on the Jetson Xavier NX compared to models calibrated with FP16 on the Jetson Nano, although the difference obtained does not affect the general operation of the system. In the case of the LPR network, both platforms are configured using FP16.

Additional Features

Web Dashboard

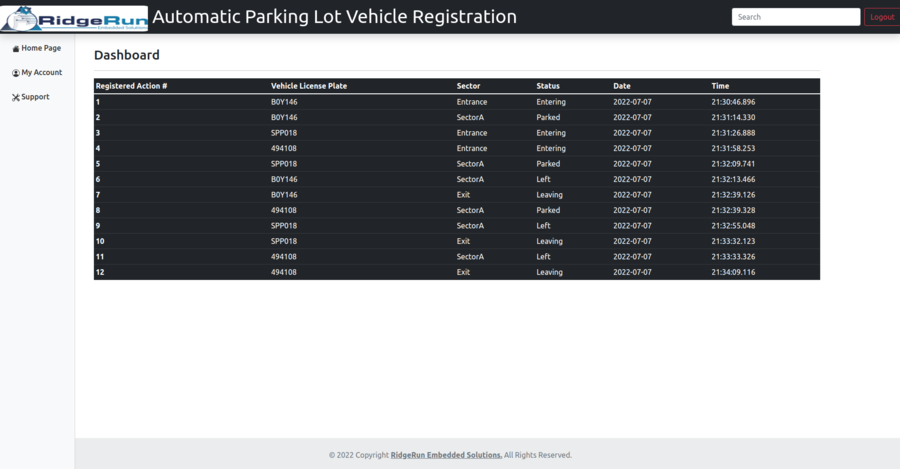

APLVR provides within its features a Web Dashboard, which can be used by the parking system administrator, to check in real-time the logs generated with information on the status (entering, leaving, parked, etc) of the vehicles that enter or leave the parking lot. The Dashboard as such consists of a table that shows each registered action, the vehicle license plate, the parking sector (associated camera) where the report was generated, and the status, date, and time of registration. To configure the operation of this Web Dashboard, it is necessary to modify the parameters of the Dashboard action located in the aplvr.yaml configuration file. For instructions on how to run this Web Dashboard, you can refer to the Evaluating the Project section. The following figure shows an example of what the Web Dashboard looks like, taking as a sample a sequence of vehicles in a parking lot.

Important Note: This functionality of the Web Dashboard serves as an example to demonstrate possible ways in which the user can take advantage of the information generated as a result of the inference, in their own designs or applications. This Web Dashboard does not constitute a Web page with full navigation features, it simply represents the use of the Dashboard. |

Automation Script

APLVR provides you with an automation script, which handles the process initialization and termination tasks required by the application. APLVR depends on its operation, that the RabbitMQ server is up and that the Gstreamer Daemon is running. Therefore, to simplify the work when executing the application, the script does this work for you, in addition, it is responsible for capturing any interruption detected during the execution of the system, to cleanly end the processes that have been started. The script is named run_aplvr.sh , and all you have to do is run the script as shown below:

$ sudo ./run_aplvr.sh

It is necessary to execute the script with privileged permissions (sudo command) since it is a requirement of the command necessary to start the RabbitMQ server.

Demonstration