Pruning for AI on FPGA

FPGA Minutes to Become an Expert RidgeRun documentation is currently under development. |

| FPGA Minutes to Become an Expert |

|---|

|

| Introduction |

| FPGA Knowledge |

|

Synthesis Flows

|

| Xilinx FPGAs |

|

Evaluation boards Development workflow and tools Getting Started |

| Lattice FPGAs |

|

Evaluation boards |

| Simulation Tools |

| CocoTB |

| AI and Machine Learning |

| Contact Us |

Introduction

Pruning is a model optimisation technique that removes weights that do not significantly impact the inference process. It allows for reducing the storage and computations required for the inference tasks. However, it requires specialised data structures and hardware for the computations.

This wiki will explain the sparse data representations, acceleration considerations and how we can use the FPGA to accelerate sparse operations.

Data Representations

Regarding the data structures, sparse matrix representations are used for matrix computations, encoding the valid rows and columns with a value. However, there are multiple ways to encode the rows, columns and values:

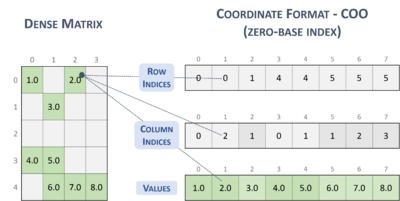

Coordinate (COO)

It encodes the dense matrices into sparse matrices through three different arrays:

- Row indices

- Column indices

- Values

The three arrays are same-sized and the arrays' positions are accessed using the same index.

So, to access the dense matrix index from a sparse representation, it is possible to use the following pseudocode:

index_1d = rows_indices[i] * leading_dimension + column_indices[i]

RidgeRun Services

RidgeRun has expertise in offloading processing algorithms using FPGAs, from Image Signal Processing to AI offloading. Our services include:

- Algorithm Acceleration using FPGAs.

- Image Signal Processing IP Cores.

- Linux Device Drivers.

- Low Power AI Acceleration using FPGAs.

- Accelerated C++ Applications.

And it includes much more. Contact us at https://www.ridgerun.com/contact.