ON Semiconductor AR0231 Linux driver

|

|

|

ON Semiconductor AR0231 image sensor features

The ON Semiconductor AR0231 is an image sensor with the following features:

- Key Technologies:

- Automotive Grade Backside Illuminated Pixel

- LED Flicker Mitigation Mode

- Sensor Fault Detection for ASIL−B Compliance

- Up to 4−exposure HDR at 1928 × 1208 and 30 fps or 3−exposure HDR at 1928 × 1208 and 40 fps

- Latest 3.0 mm Back Side Illuminated (BSI) Pixel with ON Semiconductor DR−Pixt Technology

- Data Interfaces: up to 4−lane MIPI CSI−2, Parallel, or up to 4−lane High Speed Pixel Interface (HiSPi) Serial Interface (SLVS and HiVCM)

- Advanced HDR with Flexible Exposure

- Ratio Control

- LED Flicker Mitigation (LFM) Mode

- Selectable Automatic or User Controlled Black Level Control

- Frame to Frame Switching among up to 4 Contexts to Enable Multi−function Systems

- Spread−spectrum Input Clock Support

- Multi−Camera Synchronization Support

- Multiple CFA Options

RidgeRun has developed a driver for the Jetson TX2 platform with the following support:

- L4T 28.2.1 and Jetpack 3.3

- V4l2 Media controller driver

- Tested resolution 1928 x 1208 @ 30 fps

- Capture with nvcamerasrc using the ISP.

- Tested with the Sekonix SF3326 and SF3325

Enabling the driver

In order to use this driver, you have to patch and compile the kernel source using JetPack:

- Follow the instructions in Compiling_Jetson_TX1/TX2_source_code#Downloading_the_code to get the kernel source code.

- Once you have the source code, apply the following three patches in order to add the changes required for the AR0231 camera at kernel and dtb level.

3.3_ar0231.patch

- Follow the instructions in Compiling_Jetson_TX1/TX2_source_code#Build_Kernel for building the kernel, and then flash the image.

Make sure to enable AR0231 driver support:

make menuconfig

-> Device Drivers

-> Multimedia support

-> Encoders, decoders, sensors, and other helper chips

-> <*> ar0231 camera sensor support

Using the driver

GStreamer examples

Capture

gst-launch-1.0 nvcamerasrc ! "video/x-raw(memory:NVMM),width=(int)1928,height=(int)1208,format=(string)I420,framerate=(fraction)30/1" ! autovideosink

The sensor will capture in the 3840x2160@30 mode

Setting pipeline to PAUSED ... Available Sensor modes : 1928 x 1208 FR=30.000000 CF=0x1209208a10 SensorModeType=4 CSIPixelBitDepth=12 DynPixelBitDepth=12 Pipeline is live and does not need PREROLL ... NvCameraSrc: Trying To Set Default Camera Resolution. Selected sensorModeIndex = 0 WxH = 1928x1208 FrameRate = 30.000000 ... Setting pipeline to PLAYING ... New clock: GstSystemClock

Video Encoding

CAPS="video/x-raw(memory:NVMM), width=(int)1928, height=(int)1208, format=(string)I420, framerate=(fraction)30/1" gst-launch-1.0 nvcamerasrc sensor-id=0 num-buffers=500 ! $CAPS ! omxh264enc ! mpegtsmux ! filesink location=test.ts

The sensor will capture in the 1928x1208@30 mode and the pipeline will encode the video and save it into test.ts file.

Performance

ARM Load

Tegrastats display the following output when capturing with the sensor:

RAM 1717/7855MB (lfb 1310x4MB) CPU [2%@2035,off,off,2%@2035,7%@2035,4%@2035] BCPU@46C MCPU@46C GPU@44C PLL@46C Tboard@41C Tdiode@44.5C PMIC@100C thermal@45.2C VDD_IN 3198/3217 VDD_CPU 456/456 VDD_GPU 228/228 VDD_SOC 762/761 VDD_WIFI 38/38 VDD_DDR 806/806 RAM 1717/7855MB (lfb 1310x4MB) CPU [4%@2035,off,off,2%@2035,3%@2035,3%@2035] BCPU@46C MCPU@46C GPU@44C PLL@46C Tboard@41C Tdiode@44.5C PMIC@100C thermal@45.4C VDD_IN 3236/3223 VDD_CPU 456/456 VDD_GPU 228/228 VDD_SOC 686/736 VDD_WIFI 38/38 VDD_DDR 806/806 RAM 1717/7855MB (lfb 1310x4MB) CPU [4%@2035,off,off,1%@2035,5%@2035,5%@2035] BCPU@46C MCPU@46C GPU@44C PLL@46C Tboard@41C Tdiode@44.5C PMIC@100C thermal@45.2C VDD_IN 3236/3226 VDD_CPU 456/456 VDD_GPU 228/228 VDD_SOC 762/742 VDD_WIFI 38/38 VDD_DDR 806/806 RAM 1717/7855MB (lfb 1310x4MB) CPU [6%@2035,off,off,1%@2035,3%@2035,2%@2035] BCPU@46C MCPU@46C GPU@44C PLL@46C Tboard@41C Tdiode@44.5C PMIC@100C thermal@45.2C VDD_IN 3198/3220 VDD_CPU 456/456 VDD_GPU 228/228 VDD_SOC 762/746 VDD_WIFI 38/38 VDD_DDR 806/806 RAM 1717/7855MB (lfb 1310x4MB) CPU [5%@2035,off,off,1%@2035,3%@2035,3%@2035] BCPU@46C MCPU@46C GPU@44C PLL@46C Tboard@41C Tdiode@44.5C PMIC@100C thermal@45.2C VDD_IN 3236/3223 VDD_CPU 456/456 VDD_GPU 228/228 VDD_SOC 762/749 VDD_WIFI 38/38 VDD_DDR 806/806 RAM 1717/7855MB (lfb 1310x4MB) CPU [5%@2035,off,off,1%@2035,2%@2035,2%@2035] BCPU@46C MCPU@46C GPU@44.5C PLL@46C Tboard@41C Tdiode@44.5C PMIC@100C thermal@45.2C VDD_IN 3198/3219 VDD_CPU 456/456 VDD_GPU 228/228 VDD_SOC 762/751 VDD_WIFI 38/38 VDD_DDR 806/806 RAM 1717/7855MB (lfb 1310x4MB) CPU [5%@2035,off,off,1%@2035,4%@2035,2%@2035] BCPU@46C MCPU@46C GPU@44C PLL@46C Tboard@41C Tdiode@44.5C PMIC@100C thermal@45.2C VDD_IN 3198/3217 VDD_CPU 456/456 VDD_GPU 228/228 VDD_SOC 762/752 VDD_WIFI 38/38 VDD_DDR 787/803

Framerate

Using the next pipeline we were able to measure the framerate for single capture with the perf element:

gst-launch-1.0 nvcamerasrc sensor-id=0 ! 'video/x-raw(memory:NVMM), width=(int)1928, height=(int)1208, format=(string)I420, framerate=(fraction)30/1' ! perf ! fakesink

perf: perf0; timestamp: 1:35:55.751602001; bps: 193879.384; mean_bps: 193879.384; fps: 29.994; mean_fps: 29.994 INFO: perf: perf0; timestamp: 1:35:56.761051137; bps: 192104.776; mean_bps: 192992.080; fps: 29.719; mean_fps: 29.856 INFO: perf: perf0; timestamp: 1:35:57.770470774; bps: 192110.390; mean_bps: 192698.183; fps: 29.720; mean_fps: 29.811 INFO: perf: perf0; timestamp: 1:35:58.779928270; bps: 192103.185; mean_bps: 192549.434; fps: 29.719; mean_fps: 29.788 INFO: perf: perf0; timestamp: 1:35:59.789433771; bps: 192094.050; mean_bps: 192458.357; fps: 29.718; mean_fps: 29.774 INFO: perf: perf0; timestamp: 1:36:00.798881673; bps: 192105.011; mean_bps: 192399.466; fps: 29.719; mean_fps: 29.765 INFO: perf: perf0; timestamp: 1:36:01.808314377; bps: 192107.903; mean_bps: 192357.814; fps: 29.720; mean_fps: 29.758 INFO: perf: perf0; timestamp: 1:36:02.817684397; bps: 192119.833; mean_bps: 192328.066; fps: 29.722; mean_fps: 29.754 INFO: perf: perf0; timestamp: 1:36:03.827163605; bps: 192099.053; mean_bps: 192302.621; fps: 29.718; mean_fps: 29.750 INFO: perf: perf0; timestamp: 1:36:04.836652768; bps: 192097.159; mean_bps: 192282.074; fps: 29.718; mean_fps: 29.747

The results show the framerate constant at just below 30FPS that use nvcamerasrc and passing frames through the ISP to convert from Bayer to YUV.

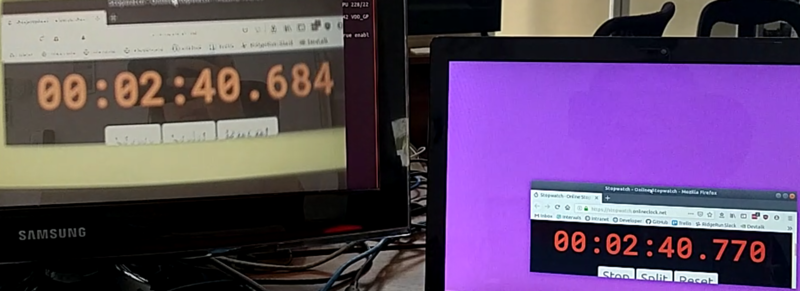

Latency

The glass to glass latency measured as described in Jetson_glass_to_glass_latency is about ~88 ms with nvcamerasrc.

gst-launch-1.0 nvcamerasrc queue-size=6 sensor-id=0 fpsRange='30 30' ! \ 'video/x-raw(memory:NVMM), width=(int)1928, height=(int)1208, format=(string)I420, framerate=(fraction)30/1' ! \ nvoverlaysink sync=true enable-last-sample=false

For direct inquiries, please refer to the contact information available on our Contact page. Alternatively, you may complete and submit the form provided at the same link. We will respond to your request at our earliest opportunity.

Links to RidgeRun Resources and RidgeRun Artificial Intelligence Solutions can be found in the footer below.