User Guide On Homography estimation

| Image Stitching for NVIDIA®Jetson™ |

|---|

|

| Before Starting |

| Image Stitching Basics |

| Overview |

| Getting Started |

| User Guide |

| Resources |

| Examples |

| Spherical Video |

| Performance |

| Contact Us |

Homography

A homography in the context of image stitching describes a relation between two images, that relation transforms a target image based on a reference image. This provides a way to specify the arrangement and adjustments between images for stitching.

These adjustments and transformations are represented in a homography matrix that synthesizes the mapping between two image planes. For a two-dimension homography, the matrix looks like the following, where x and y are the original coordinates while x' and y' are the new ones.

Homography decomposition

This mapping mentioned above can be decomposed into translation, rotation, shear, and scale. each one represented by its own transformation matrix.

From that original homography matrix, the translation can be factored out like so:

Where and are the x and y axis translations respectively.

The reduced resulting matrix

can then be decomposed into three more matrices representing rotation, shear and scale; however that process is not as intuitive as the previous one; therefore it is not recommended to manually define the homography matrix unless the transformation is only a translation.

Even with simple homographies it is highly recommended to use the included homography estimation tool since it is easier and yields better results.

Homography estimation tool

The following section will introduce a way to estimate an initial homography matrix that can be used in the cudastitcher element. This method consists of a Python script that estimates the homography between two images. Find the script in the scripts directory of the rrstitcher project.

Dependencies

- Python 3.6

- OpenCV 4.0 or later.

- Numpy

Installing the dependencies using a virtual environment

The above dependencies can be installed making use of a Python virtual environment. A virtual environment is useful for installing Python packages without damaging some other environment in your machine. To create a new virtual environment, run the following command:

ENV_NAME=calibration-env python3.6 -m venv $ENV_NAME

A new folder will be created with the name ENV_NAME. To activate the virtual environment, run the following command:

source $ENV_NAME/bin/activate

Source the virtual environment each time you want to use it. To install the packages in the virtual environment:

pip install numpy pip install opencv-contrib-python

Script estimation flow

The steps performed by the script are the following:

- Load the images.

- Remove the distortion of the images. (Optional)

- Perform a preprocessing to the images removing the noise using a Gaussian filter.

- Extract the key points and the corresponding descriptors with the SIFT algorithm.

- Find the correspondences among the key points of the two images.

- With the resulting key points, estimate the homography.

Lens distortion correction

In case there is a need to apply distortion correction on the camera, follow the camera calibration process found in CUDA_Accelerated_GStreamer_Camera_Undistort/User_Guide/Camera_Calibration to obtain the camera matrix and distortion parameters.

These parameters are then set in the configuration JSON file.

Script usage

The script has two main operation modes:

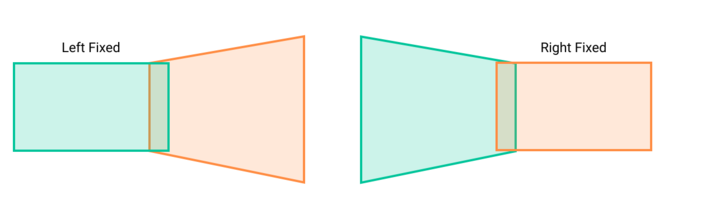

- left_fixed: where the left image is fixed and the right image will be transformed by the homography.

- right_fixed: where the right image is fixed and the left image will be transformed by the homography.

This modes must be specified as the first parameter of the command.

The following image shows the difference between those modes.

Both operation modes can then be used in two ways:

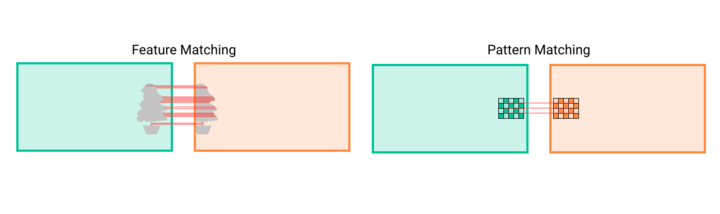

- feature matching: Finds matching features in the overlap between both images and from those matching key points estimates the homography (Default).

- pattern matching: Uses a calibration pattern that needs to be present in both images to estimate the homography (Use

--patternflag).

The default calibration pattern to use can be found in the ![]() GitHub Link.

GitHub Link.

The following image shows the difference between those modes.

- non cropping mode: This mode doesn't affect the homography, it just displays the whole image after applying the homography without cropping to a defined size, it can be used with the

--non_cropflag. This is useful since the stitcher doesn't crop the output, so it allows you to see a more realistic result. If the homography produces an image that is too large, this may slow down the interactive mode. - corner mode: This mode allows the user to move each corner of the target image individually, or all at once. It is activated by adding the

--cornersflag. Each corner is selected with numbers from 1 to 4, starting from the top left corner and incrementing clockwise.

- Make sure to have the window displaying the image in focus since there is where the keyboard events are captured.

- Key events:

- 1: select top left corner.

- 2: select top right corner.

- 3: select bottom left corner.

- 4: select bottom right corner.

- Make sure to have the window displaying the image in focus since there is where the keyboard events are captured.

- h: move left.

- j: move down.

- k: move up.

- l: move right.

- a: move all corners simultaneously.

- f: make the corners move faster when adjusted.

- s: make the corners move slower when adjusted.

- q: exit the program.

- interactive mode: Every mode allows the user to further edit the homography interactively by using the

--interactiveflag. - manual mode: In some cases, automatic homography estimation is not very practical or produces unexpected results, in those cases, you can use the

--manualflag to modify it according to your needs. This behaves just like the interactive mode but the images are not initially transformed by the script, they start just side by side.

The interactive or manual modes are used as follows:

First, move between the homography element and select one to edit by pressing [ i ], then move from left to right to the digit you want to change, and finally, change its value with the up and down keys.

- Make sure to have the window displaying the image in focus since there is where the keyboard events are captured.

- Key events:

- h: move left.

- j: move down.

- k: move up.

- l: move right.

- Make sure to have the window displaying the image in focus since there is where the keyboard events are captured.

- i: enter edit mode.

- w: save homography element changes.

- q: discard homography element changes if editing, exit interactive mode otherwise.

These are the complete options of the script.

python homography_estimation.py --help

usage: ./homography_estimation.py [-h] [--config CONFIG] --reference_image

REFERENCE_IMAGE --target_image TARGET_IMAGE

[--scale_width SCALE_WIDTH]

[--scale_height SCALE_HEIGHT]

[--chessboard_dimensions ROWS COLUMNS]

[--pattern] [--interactive] [--non_crop]

[--manual]

{left_fixed,right_fixed} ...

Tool for estimating the homography between two images.

positional arguments:

{left_fixed,right_fixed}

left_fixed estimates the homography between two images, with the

left one as reference.

right_fixed estimates the homography between two images, with the

right one as reference.

optional arguments:

-h, --help show this help message and exit

--config CONFIG path of the configuration file

--reference_image REFERENCE_IMAGE

path of the reference image

--target_image TARGET_IMAGE

path of the target image

--scale_width SCALE_WIDTH

scale width dimension of the input images

--scale_height SCALE_HEIGHT

scale height dimension of the input images

--chessboard_dimensions ROWS COLUMNS

dimensions of the calibration pattern to look for

--pattern flag to indicate to look for a calibration pattern

--interactive flag to indicate to use interactive mode

--non_crop flag to indicate to crop the output

--manual flag to indicate to use manual editor

--corners flag to indicate to use manual corner editor

Configuration file

The script uses a configuration file to access the values of the different variables needed to set up the algorithm before performing the homography estimation. The configuration file contains the following parameters:

- targetCameraMatrix: Array with the values corresponding to the camera matrix of the target source.

- targetDistortionParameters: Array with the values corresponding to the distortion coefficients of the target source.

- referenceCameraMatrix: Array with the values corresponding to the camera matrix of the reference source.

- referenceDistortionParameters: Array with the values corresponding to the distortion coefficients of the reference source.

- reprojError: Reprojection error for the homography calculation.

- matchRatio: Max distance ratio for a possible match of keypoints.

- sigma: Sigma value for the Gaussian filter.

- overlap: Degrees of overlap between the two images.

- crop: Degrees of the crop to apply in the sides of the image corresponding to the seam.

- fov: Field of view in degrees of the cameras.

- targetUndistort: Bool value to enable the distortion removal of the target source.

- referenceUndistort: Bool value to enable the distortion removal of the reference source.

Example

The following example will estimate the homography of two images, with the left one fixed in multiple modes. In all cases, the following configuration file is used:

// config.json

{

"targetCameraMatrix":[1, 0, 0, 0, 1, 0, 0, 0, 1],

"targetDistortionParameters":[0, 0, 0, 0, 0, 0],

"referenceCameraMatrix":[1, 0, 0, 0, 1, 0, 0, 0, 1],

"referenceDistortionParameters":[0, 0, 0, 0, 0, 0],

"reprojError":5,

"matchRatio":0.9,

"sigma":1.5,

"overlap":23,

"crop":0,

"fov":50,

"targetUndistort":false,

"referenceUndistort":false

}

Since the left_fixed mode is used, the --reference_image option corresponds to the left image, and the --target_image corresponds to the image that will be transformed (right).

Feature matching mode

The command to perform the estimation is:

python homography_estimation.py left_fixed --config /path/to/config.json --reference_image /path/to/cam1.png --target_image /path/to/cam2.png

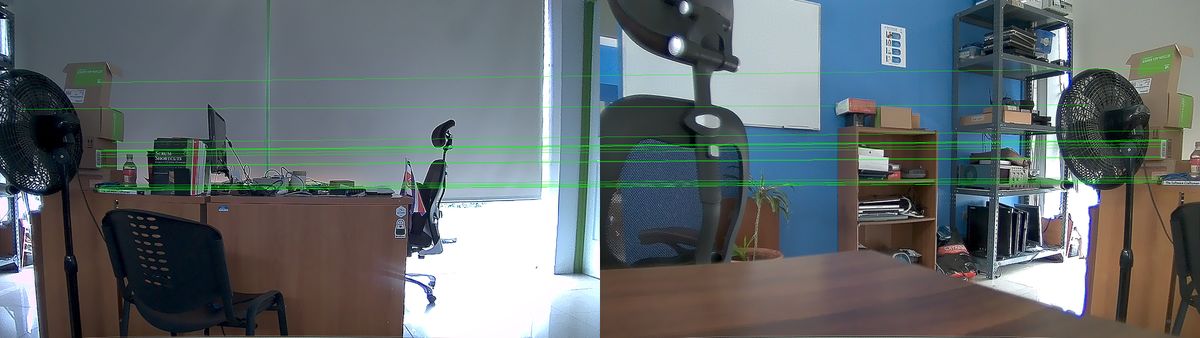

The output will be something like this:

matches: 69

"matrix":{"h00":1.1762953900821504,"h01":0.18783005980813444, "h02":1382.1180307642137, "h10":0.019908986235761796, "h11":1.0951514369488284, "h12":0.6427298229101379, "h20":6.676211791348554e-05, "h21":8.14441129307644e-05, "h22":1.0}

Also, the script will generate some images to evaluate the quality of the homography:

Pattern matching mode

The command to perform the estimation is:

python homography_estimation.py left_fixed --config /path/to/config.json --reference_image /path/to/cam1.png --target_image /path/to/cam2.png --pattern

The output will be something like this:

"matrix":{"h00":1.0927425025492448, "h01":0.023052134950741838, "h02":1424.5561222498761, "h10":-0.0004828679916927615, "h11":0.9910972565366281, "h12":26.804866722848878, "h20":6.0874737519702665e-05, "h21":-2.0064071552078915e-05, "h22":1.0}

Also, the script will generate some images to evaluate the quality of the homography:

Interactive mode

The command to perform the estimation is:

python homography_estimation.py left_fixed --config /path/to/config.json --reference_image /path/to/cam1.png --target_image /path/to/cam2.png --pattern --interactive

The following gif demonstrates its usage, click the file if not playing.