How to use OpenCV CUDA Streams

|

|

Introduction to OpenCV CUDA Streams

Dependencies

- G++ Compiler

- CUDA

- OpenCV >= 4.1 (compiled with CUDA Support)

- NVIDIA Nsight

OpenCV CUDA Streams example

The following example uses a sample input image and resizes it in four different streams.

Compile the example with:

g++ testStreams.cpp -o testStreams $(pkg-config --libs --cflags opencv4)

testStreams.cpp

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/core/cuda.hpp>

#include <vector>

#include <memory>

#include <iostream>

std::shared_ptr<std::vector<cv::Mat>> computeArray(std::shared_ptr<std::vector< cv::cuda::HostMem >> srcMemArray,

std::shared_ptr<std::vector< cv::cuda::HostMem >> dstMemArray,

std::shared_ptr<std::vector< cv::cuda::GpuMat >> gpuSrcArray,

std::shared_ptr<std::vector< cv::cuda::GpuMat >> gpuDstArray,

std::shared_ptr<std::vector< cv::Mat >> outArray,

std::shared_ptr<std::vector< cv::cuda::Stream >> streamsArray){

//Define test target size

cv::Size rSize(256, 256);

//Compute for each input image with async calls

for(int i=0; i<4; i++){

//Upload Input Pinned Memory to GPU Mat

(*gpuSrcArray)[i].upload((*srcMemArray)[i], (*streamsArray)[i]);

//Use the CUDA Kernel Method

cv::cuda::resize((*gpuSrcArray)[i], (*gpuDstArray)[i], rSize, 0, 0, cv::INTER_AREA, (*streamsArray)[i]);

//Download result to Output Pinned Memory

(*gpuDstArray)[i].download((*dstMemArray)[i],(*streamsArray)[i]);

//Obtain data back to CPU Memory

(*outArray)[i] = (*dstMemArray)[i].createMatHeader();

}

//All previous calls are non-blocking therefore

//wait for each stream completetion

(*streamsArray)[0].waitForCompletion();

(*streamsArray)[1].waitForCompletion();

(*streamsArray)[2].waitForCompletion();

(*streamsArray)[3].waitForCompletion();

return outArray;

}

int main (int argc, char* argv[]){

//Load test image

cv::Mat srcHostImage = cv::imread("1080.jpg");

//Create CUDA Streams Array

std::shared_ptr<std::vector<cv::cuda::Stream>> streamsArray = std::make_shared<std::vector<cv::cuda::Stream>>();

cv::cuda::Stream streamA, streamB, streamC, streamD;

streamsArray->push_back(streamA);

streamsArray->push_back(streamB);

streamsArray->push_back(streamC);

streamsArray->push_back(streamD);

//Create Pinned Memory (PAGE_LOCKED) arrays

std::shared_ptr<std::vector<cv::cuda::HostMem >> srcMemArray = std::make_shared<std::vector<cv::cuda::HostMem >>();

std::shared_ptr<std::vector<cv::cuda::HostMem >> dstMemArray = std::make_shared<std::vector<cv::cuda::HostMem >>();

//Create GpuMat arrays to use them on OpenCV CUDA Methods

std::shared_ptr<std::vector< cv::cuda::GpuMat >> gpuSrcArray = std::make_shared<std::vector<cv::cuda::GpuMat>>();

std::shared_ptr<std::vector< cv::cuda::GpuMat >> gpuDstArray = std::make_shared<std::vector<cv::cuda::GpuMat>>();

//Create Output array for CPU Mat

std::shared_ptr<std::vector< cv::Mat >> outArray = std::make_shared<std::vector<cv::Mat>>();

for(int i=0; i<4; i++){

//Define GPU Mats

cv::cuda::GpuMat srcMat;

cv::cuda::GpuMat dstMat;

//Define CPU Mat

cv::Mat outMat;

//Initialize the Pinned Memory with input image

cv::cuda::HostMem srcHostMem = cv::cuda::HostMem(srcHostImage, cv::cuda::HostMem::PAGE_LOCKED);

//Initialize the output Pinned Memory with reference to output Mat

cv::cuda::HostMem srcDstMem = cv::cuda::HostMem(outMat, cv::cuda::HostMem::PAGE_LOCKED);

//Add elements to each array.

srcMemArray->push_back(srcHostMem);

dstMemArray->push_back(srcDstMem);

gpuSrcArray->push_back(srcMat);

gpuDstArray->push_back(dstMat);

outArray->push_back(outMat);

}

//Test the process 20 times

for(int i=0; i<20; i++){

try{

std::shared_ptr<std::vector<cv::Mat>> result = std::make_shared<std::vector<cv::Mat>>();

result = computeArray(srcMemArray, dstMemArray, gpuSrcArray, gpuDstArray, outArray, streamsArray);

//Optional to show the results

//cv::imshow("Result", (*result)[0]);

//cv::waitKey(0);

}

catch(const cv::Exception& ex){

std::cout << "Error: " << ex.what() << std::endl;

}

}

return 0;

}

Profiling with NVIDIA Nsight

Profile the testStreams program with the NVIDIA Nsight program

- Add the command line and working directory

- Select Collect CUDA trace

- Select Collect GPU context switch trace

As seen in the following image:

Click start to init the profiling process. Manual stop is also needed when profiling has ended.

Analyze the output

You will get an output similar to the following:

Information

Each color represents the operation that is being executed at some point

- Green box: Memory copy operations from Host to Device

- Blue box: Execution of the kernel in the Device

- Red box: Memory copy operations from Device to Host

Understanding CUDA Streams pipelining

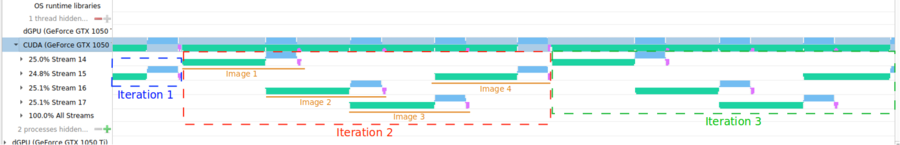

CUDA Streams help in creating an execution pipeline therefore when a Host to Device operation is being performed then another kernel can be executed, as well as for the Device to Host operations. In the following image, the pipeline can be analyzed.

Each iteration of the "for" clause in the example is represented in the image as a box.

- Blue box: Iteration 1

- Red box: Iteration 2

- Green box: Iteration 3

Inside each iteration, 4 images are computed with a pipelined structure.

Contact Us

For direct inquiries, please refer to the contact information available on our Contact page. Alternatively, you may complete and submit the form provided at the same link. We will respond to your request at our earliest opportunity.

Links to RidgeRun Resources and RidgeRun Artificial Intelligence Solutions can be found in the footer below.