Gstreamer QT Overlay

|

|

GStreamer QT Overlay Overview

GstQtOverlay is a GStreamer plug-in that renders QT graphics on top of a video stream. This rendering occurs directly into the GStreamer video frame buffer, rather than in physical graphics memory, with the resultant video having the QT graphics image merged into the video image. This brings together the best of the two worlds:

- Modularity and extensibility of GStreamer

- Beauty and flexibility of QT

With the RidgeRun GstQtOverlay element, GUIs will be available not only at the video display but also embedded in file recordings and network streaming, for example.

GstQtOverlay makes heavy use of OpenGL in order to achieve high-performance overlay rendering. The pixel blitting and memory transfers are done by GPU and DMA hardware accelerators, allowing real-time operation at HD resolutions. As a consequence, the CPU remains free for other processing.

Graphics are modeled using QT's QML, which enables fast, powerful, and dynamic GUI implementation. QML is loaded at runtime so there is no need to recompile the GstQtOverlay plug-in in order to change the GUI. Having QML independent of the GStreamer element speeds up development time eases quick prototyping, and reduces time-to-market without impacting performance. A single GstQtOverlay element is able to handle many different GUIs.

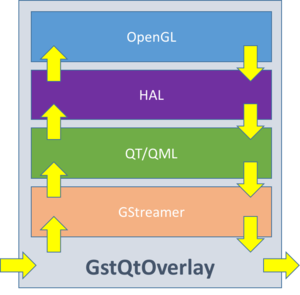

GStreamer QT Overlay Architecture

GstQtOverlay element's architecture is summarized in Figure 1.

- GStreamer

This layer provides the necessary classes to expose GstQtOverlay as a GStreamer element. As such, GstQtOverlay can be linked into any compatible GStreamer pipeline and will participate in the standard pipeline lifecycle. This includes among other, caps and allocator negotiation, and pipeline state changes.

- QT/QML

On top of GStreamer, a QT powered class is instantiated in order to manage graphic resources in a decoupled way. Here, the QT event loop is specially processed in order to have both Glib's and QT's event sources active. Finally, a QML engine is created to read and render the user's QML file.

- HAL

The Hardware Abstraction Layer (or simply HAL) is a thin layer that acts as an adapter between QT and OpenGL in order to leverage the platform specific GPU utilities and maximize performance. This layer is the only one that is HW dependent and will be conditionally built via configuration parameters. The HAL ensures that data transfers are HW accelerated so that there is no performance penalty.

- OpenGL

The OpenGL layer will render QT content into the image data using the GPU's parallel power. The blitting to merge the QT image onto a video frame is performed into a copy of the frame image so that the integrity of the original data is preserved.

Supported platforms

Currently, the following platforms support an Autotools based standalone GstQtOverlay plug-in installation:

- PC: both x86 and x64.

- NVIDIA Jetson boards: TX1, TX2, AGX Xavier, Nano, Xavier NX.

Also, GstQtOverlay plug-in can be installed using Yocto. Some of the platforms tested with Yocto:

- NXP i.MX6-based boards.

- NXP i.MX8-based boards. (coming soon!!! sponsor this effort)

More platforms are under development. If you wish to sponsor the plug-in for a custom platform or accelerate development for another SOC not listed above, please contact us at support@ridgerun.com

Evaluating GstQtOverlay

Evaluation Features

| Feature | Professional | Evaluation |

|---|---|---|

| GstQtOverlay Element | Y | Y |

| Unlimited Processing Time | Y | N (1) |

| Source Code | Y | N |

(1) The evaluation version will limit the processing to a maximum of 10.000 frames.

Requesting the Evaluation Binary

In order to request an evaluation binary for a specific architecture, please contact us providing the following information:

Platform (i.e.: iMX6, TX2, etc...) Target Qt version gst-launch-1.0 --gst-version uname -a

Testing the Evaluation Binaries

Installing the Binaries

First, make sure the dependencies are fulfilled:

RidgeRun should've provided you with a .tar package: gst-qt-overlay-1.Y.Z-gst-1.X-P-eval.tar. Copy the files into your system:

tar -xvf gst-qt-overlay-1.Y.Z-gst-1.X-P-eval.tar sudo cp -r gst-qt-overlay-1.Y.Z-gst-1.X-P-eval/usr/lib/* /usr/lib/

Test that the plugin is being properly picked up by GStreamer by running:

gst-inspect-1.0 qtoverlay

You should see the inspect output for the evaluation binary.

Testing the Binaries

Please refer to the following links for example pipelines

Troubleshooting

The first level of debugging to troubleshoot a failing evaluation binary is to inspect GStreamer debug output.

GST_DEBUG=2 gst-launch-1.0

If the output doesn't help you figure out the problem, please contact support@ridgerun.com with the output of the GStreamer debug and any additional information you consider useful.

Building GstQtOverlay

GstQtOverlay can be built and installed GstQtOverlay either on Stand-alone or through Yocto. In this section, the two methods are explained and detailed in order to add GstQtOverlay to your system.

Stand-alone build

GstQtOverlay can be built and installed on stand-alone easily using Autotools. This method is recommended for PC-based platforms and NVIDIA Jetson boards.

Dependencies

Before starting with the installation, make sure your system has the following dependencies already installed.

QT5 packages

sudo apt-get install \ qtdeclarative5-dev \ qtbase5-dev-tools \ qml-module-qtquick-dialogs \ qml-module-qtquick2 \ qml-module-qtquick-controls \ qt5-default

OpenGL packages

If you have a platform with NVIDIA or ATI GPU, your mesa packages could override your drivers. |

sudo apt-get install \ libx11-dev \ xorg-dev \ libglu1-mesa-dev \ freeglut3-dev \ libglew1.5 \ libglew1.5-dev \ libglu1-mesa \ libglu1-mesa-dev \ libgl1-mesa-glx \ libgl1-mesa-dev

GStreamer packages

sudo apt-get install \ gstreamer1.0-x \ libgstreamer1.0-dev \ libgstreamer-plugins-base1.0-dev

Autotools packages

sudo apt install \ autotools-dev \ autoconf

Jetson

For Jetson boards, you may want to have the Jetson Multimedia API installed. It can be installed during the flashing with the SDK Manager or by:

sudo apt install nvidia-l4t-jetson-multimedia-api

Build steps

When you purchase GstQtOverlay, you will get a Gitlab repository with the source code inside. You need it to build it in your system and add it to your GStreamer plug-ins catalogue. So, in a temporary path, clone the repository using:

git clone git://git@gitlab.com/RidgeRun/orders/${CUSTOMER_DIRECTORY}/gst-qt-overlay.git

Where ${CUSTOMER_DIRECTORY} contains the name of your customer directory given after the purchase.

Afterward, find the path where GStreamer looks for plug-ins and libraries (LIBDIR). Take as a reference to the following table:

| Platform | LIBDIR path |

|---|---|

| PC 32-bits/x86 | /usr/lib/i386-linux-gnu/ |

| PC 64-bits/x86 | /usr/lib/x86_64-linux-gnu/ |

| NVIDIA Jetson | /usr/lib/aarch64-linux-gnu/ |

For x86/64

According to the table, define an environment variable which stores the path accordingly to your system. For example, for an x64 PC, it will be:

LIBDIR=/usr/lib/x86_64-linux-gnu/

Then, do the installation (with defaults) using:

cd gst-qt-overlay ./autogen.sh ./configure --libdir $LIBDIR make sudo make install

For NVIDIA Jetson

Adjust the LIBDIR according to the table:

LIBDIR=/usr/lib/aarch64-linux-gnu/

For Jetson, you may want to enable the NVMM support:

export CXXFLAGS=-I/usr/src/jetson_multimedia_api/include/ cd gst-qt-overlay ./autogen.sh ./configure --libdir $LIBDIR --with-platform=jetson make sudo make install

Testing the installation

Finally, verify the plug-in installation, running:

gst-inspect-1.0 qtoverlay

It should show:

Factory Details: Rank none (0) Long-name Qt Overlay Klass Generic Description Overlays a Qt interface over a video stream Author <http://www.ridgerun.com> Plugin Details: Name qtoverlay Description Overlay a Qt interface over a video stream. ...

It can happen that the qtoverlay element reports that there is no display found and gets banned from the GStreamer elements. You can fix it by removing GStreamer from the ban list and adjusting the display server settings:

1. Remove from the ban list:

rm ~/.cache/gstreamer-1.0/*.bin

2. Adjust the display server according to the Running without a graphics server section.

Build using Yocto

RidgeRun offers a Yocto layer containing RidgeRun commonly used packages. You can download this package from Meta-Ridgerun Repository.

It contains a recipe to build GstQtOverlay but you need also to purchase GstQtOverlay with full source code from RidgeRun Online Store.

Dependencies

For building GstQtOverlay using Yocto, make sure you have installed the following packages on your target platform:

gstreamer1.0 gstreamer1.0-plugins-base gstreamer1.0-plugins-bad qtbase qtbase-native qtdeclarative imx-gpu-viv libffi gstreamer1.0-plugins-imx

You will also require some extra environment variables before running a GStreamer pipeline with GstQtOverlay. Please, refer to GStreamer QT Overlay Examples to see how it works.

Build steps

Adding meta-ridgerun to your Yocto build

First you need to copy meta-ridgerun in your Yocto sources directory, where $YOCTO_DIRECTORY is the location of your Yocto project:

cp -r meta-ridgerun $YOCTO_DIRECTORY/sources/

Then add the RidgeRun meta-layer to your bblayers.conf file. First, go to the build configuration directory

cd $YOCTO_DIRECTORY/build/conf/

open the bblayers.conf file and add the RidgeRun meta layer path $YOCTO_DIRECTORY/sources/meta-ridgerun to BBLAYERS.

Building GstQtOverlay

Once you have access to the repository, open gst-qt-overlay_xxx.bb in $YOCTO_DIRECTORY/sources/meta-ridgerun/recipes-multimedia/gstreamer/. Make sure to edit gst-qt-overlay_xxx.bb accordingly to your plug-in version given by the purchase.

Then, modify the following line in SRC_URI with the correct GstQtOverlay URL by fitting ${CUSTOMER_DIRECTORY} with your own.

git://git@gitlab.com/RidgeRun/orders/${CUSTOMER_DIRECTORY}/gst-qt-overlay.git;protocol=ssh;branch=${SRCBRANCH};name=base \

Besides, make sure you have added your ssh key to your GitLab account. The recipe will fetch the repository using your SSH key; thus it is necessary to add the key to the Gitlab page.

For adding the SSH key go to Settings -> SSH Keys and attach your key. If you do not have an SSH key yet, you can generate and get it using:

ssh-keygen -t rsa -C "your_email@example.com" cat ~/.ssh/id_rsa.pub

Finally, build the recipe:

bitbake gst-qt-overlay

Known Issues

- SHH Issue

If your list of known hosts on the PC does not have the GitLab key, you will have fetch errors when trying to build the GstQtOverlay recipe.

One easy way to add the key is when cloning the repository for the first time from Gitlab, it will ask if you want to add the key to your list of known hosts.

Example:

git clone git@gitlab.com:RidgeRun/orders/${CUSTOMER_DIRECTORY}/gst-qt-overlay.git

- No element imxg2dvideotransform

This may happen when testing the example pipelines.

Add imx-gpu-g2d to DEPENDS in the gstreamer1.0-plugins-imx recipe.

- glTexDirectInvalidateVIV not declared in scope

There's a imx-gpu-viv*.bbappend file in meta-ridgerun/recipes-graphics/imx-gpu-viv/. You need to rename it based on the recipe for imx-gpu-viv (usually found at meta-freescale/recipes-graphics/imx-gpu-viv/). For example, you might want to rename the file as: imx-gpu-viv_6.2.4.p1.8-aarch32.bbappend

- This plugin does not support createPlatformOpenGLContext!

You probably need to use a DISTRO or image that has no X11 and Wayland, so that EGL can be properly built. For example, for imx6 platforms li nitrogen6x you can use this distro: DISTRO=fsl-imx-fb. Also, this guide may be useful to properly add Qt with EGL support https://doc.qt.io/QtForDeviceCreation/qtee-meta-qt5.html

- QFontDatabase: Cannot find font directory /usr/lib/fonts

Make sure you have a font package installed in your Yocto image, for example ttf-dejavu-sans.

Interacting with the GUI

Supported Capabilities

Currently, the GstQtOverlay element supports the following formats at the Sink and Source pads respectively. More formats may be supported in the future, subject to platform capabilities.

Input

- RGBA.

- YUV. (Jetson Only)

Output

- RGBA.

- YUV. (Jetson Only)

GStreamer Properties

GStreamer properties are used to customize the QT image overlay and to interact with QML.

qml property

The qml property specifies the location of the QML source file to use. This path can be either relative or absolute and must point to a valid/existing file at the moment the pipeline starts up. Some examples include:

gst-launch-1.0 videotestsrc ! qtoverlay qml=/mnt/gui/sources/main.qml ! videoconvert ! autovideosink

gst-launch-1.0 videotestsrc ! qtoverlay qml=../../sources/main.qml ! videoconvert ! autovideosink

If not specified, the element will attempt to load main.qml from the current working directory.

qml-attribute property

QML attributes may be configured at runtime using the qml-attribute property. The property syntax is as follows:

<item name>.<attribute name>:<value>

Item name refers to the QML objectName attribute of an item, rather than its ID. The following QML contains an item named labelMain:

Label {

text: "Way cool QT imaging with GStreamer!"

font.pixelSize: 22

font.italic: true

color: "steelblue"

objectName: "labelMain"

}

For example, to modify the text attribute of a label named labelMain to display GStreamer with QT imaging is way cool! you would run:

gstd-client element_set qtoverlay qml-attribute "labelMain.text:GStreamer with QT imaging is way cool!"

The qml-attribute will recursively traverse the objects tree to find all the items in the hierarchy with the given objectName.

The objectName attribute is not required to be unique, so multiple items can be modified at once by assigning them the same objectName.

qml-action property

NOT IMPLEMENTED YET |

External events can also trigger actions on the QML by using the qml-action property. Similarly, the syntax goes as the following:

<item name>.<method name>()

Again, the qml-action will recursively traverse the objects tree to find all the items in the hierarchy with the given objectName.

For example, consider the following QML snippet:

import QtQuick 2.0

Item {

objectName:i1

function sayHi() {

console.log("Hello World!")

}

}

To invoke sayHi you would run:

gstd-client element_set qtoverlay qml-action "i1.sayHi()"

At this time, passing parameters to the invoked functions is not supported. Instead, first set an attribute value, then invoke the function which can access the attribute. There are future plans to support function parameters via Variants or some similar mechanism.

GStreamer QT Overlay Examples

Here are some examples to run and test GstQtOverlay:

PC examples

1. Display a text overlay

For this example, create a file named: "main.qml" with the following content:

import QtQuick 2.0

Item {

id: root

transform: Rotation { origin.x: root.width/2; origin.y: root.height/2; axis { x: 1; y: 0; z: 0 } angle: 180 }

Text {

text: "Way cool QT imaging with GStreamer!"

font.pointSize: 30

color: "Black"

objectName: "labelMain"

}

}

gst-launch-1.0 videotestsrc ! qtoverlay qml=main.qml ! videoconvert ! ximagesink

2. Animated gif overlay

For this example, create a file named: "animation.qml" with the following content:

import QtQuick 2.0

Item {

id: root

transform: Rotation { origin.x: root.width/2; origin.y: root.height/2; axis { x: 1; y: 0; z: 0 } angle: 180 }

Rectangle {

width: animation.width; height: animation.height + 8

AnimatedImage { id: animation; source: "animation.gif" }

Rectangle {

property int frames: animation.frameCount

width: 4; height: 8

x: (animation.width - width) * animation.currentFrame / frames

y: animation.height

color: "red"

}

}

}

Then generate or download an animation ".gif" file and rename it as "animation.gif". This file will be used as the animation to be displayed from the QML file.

gst-launch-1.0 videotestsrc ! "video/x-raw, width=1280, height=720" ! qtoverlay qml=animation.qml ! videoconvert ! ximagesink

3. Using GstQtOverlay within a GStreamer application

This use case is applicable when using a pipeline within a GStreamer application, where the application should interact with the QML nodes to change them dynamically. For achieving it, please, take into account the qml-attribute.

1. Supposing you have the following QML file:

import QtQuick 2.0

Item {

id: root

transform: Rotation { origin.x: root.width/2; origin.y: root.height/2; axis { x: 1; y: 0; z: 0 } angle: 180 }

Text {

text: "Way cool QT imaging with GStreamer!"

font.pointSize: 30

color: "Black"

objectName: "labelMain"

}

}

Please, notice that the objectName is set to "labelMain". It works as an ID to identify the nodes within the QML file. These IDs should be unique within the file to avoid ambiguity.

2. You can create a QtOverlay element with the following instructions:

GstElement overlay; const gchar *factory = "qtoverlay"; overlay = gst_element_factory_make (factory, "overlay");

In the factory make, you need to pass the element name (findable by gst-inspect) and the name of the instance within the pipeline, respectively. After that, you can add it to a GstBin or a pipeline. This example can be easily applied to the NVIDIA Jetson platform. The differences will rely on the pipeline description within the application.

3. For dynamically changing the QML node "labelName", we can use the qml-attribute property. Reminding the syntax:

<item name>.<attribute name>:<value>

For changing the text of the node, it would be:

"labelMain.text:GStreamer with QT imaging is way cool!"

Programatically in the GStreamer application:

g_object_set (overlay, "qml-attribute", "labelMain.text:GStreamer with QT imaging is way cool!", NULL);

4. Stream video over network from RSTP source

Let's assume:

SOURCE_ADRESS=rtsp://10.251.101.176:5004/test: origin rtsp video

DEST_ADRESS=10.251.101.57: destination computer/board

PORT=5004: source/dest port

On destination computer/board:

gst-launch-1.0 udpsrc buffer-size=57600 caps="application/x-rtp\,\ media\=\(string\)video\,\ clock-rate\=\(int\)90000\,\ encoding-name\=\(string\)JPEG\,\ a-framerate\=\(string\)9.000000\,\ payload\=\(int\)26\,\ ssrc\=\(uint\)1563094421\,\ timestamp-offset\=\(uint\)3492706426\,\ seqnum-offset\=\(uint\)11616" ! rtpjpegdepay ! jpegparse ! avdec_mjpeg ! videoconvert ! xvimagesink -v sync=false async=false

NVIDIA Jetson examples

You can run all the examples presented for a PC-based platform without issues in an NVIDIA Jetson. Nevertheless, you can use NVMM support for Jetson, which can be even faster since you reduce the number of memory copies from userspace to device memory and vice-versa.

Depending on your setup (whether you have a display connected or not), please, take into account configuring the display server by setting the variables presented in Running without a graphics server.

Display a text overlay

Take the file main.qml used in the PC example.

On Jetson, you can use the nvoverlaysink element, which removes a memory copy (from NVMM to userspace, and a videoconvert in CPU):

gst-launch-1.0 nvarguscamerasrc ! nvvidconv ! qtoverlay qml=main.qml ! nvoverlaysink sync=false

For using the nvarguscamerasrc, your Jetson shall have a camera connected.

Note: For Jetson 4.5, you must add a color space conversion before the nvoverlaysink element. |

gst-launch-1.0 nvarguscamerasrc ! nvvidconv ! qtoverlay qml=main.qml ! nvvidconv ! "video/x-raw(memory:NVMM),format=I420" ! nvoverlaysink sync=false

The nvvidconv elements are intended for converting to RGBA back-and-forth because of QT compatibility

You can still NVMM when using user-space elements, like videoconvert or videotestsrc:

gst-launch-1.0 videotestsrc ! nvvidconv ! 'video/x-raw(memory:NVMM)' ! qtoverlay qml=main.qml ! nvvidconv ! xvimagesink sync=false

gst-launch-1.0 videotestsrc ! nvvidconv ! 'video/x-raw(memory:NVMM)' ! qtoverlay qml=main.qml ! nvoverlaysink sync=false

Animated gif overlay

Take the file animation.qml used in the PC example.

Run the example shown above using NVMM:

gst-launch-1.0 nvarguscamerasrc ! nvvidconv ! qtoverlay qml=animation.qml ! nvoverlaysink

You can use the other pipelines shown in other examples. The only change is the qml property of qtoverlay.

Note: For Jetson 4.5, you must add a color space conversion before the nvoverlaysink element. |

gst-launch-1.0 nvarguscamerasrc ! nvvidconv ! qtoverlay qml=animation.qml ! nvvidconv ! "video/x-raw(memory:NVMM),format=I420" ! nvoverlaysink

NvArgus camera to display

Take the file main.qml used in the PC example.

This example manages the memory throughout the pipeline in NVMM format, never bringing the data to userspace.

gst-launch-1.0 nvarguscamerasrc ! nvvidconv ! qtoverlay qml=main.qml ! nvoverlaysink

Note: For Jetson 4.5, you must add a color space conversion before the nvoverlaysink element. |

gst-launch-1.0 nvarguscamerasrc ! nvvidconv ! qtoverlay qml=main.qml ! nvvidconv ! "video/x-raw(memory:NVMM),format=I420" ! nvoverlaysink

If you want to use X for displaying:

gst-launch-1.0 nvarguscamerasrc ! nvvidconv ! qtoverlay qml=main.qml ! nvvidconv ! nvvidconv ! ximagesink sync=false

The first nvvidconv is used for converting from any format to RGBA. Then, the two nvvidconvs at the end are used for converting from RBGA to a format acceptable by ximagesink, and the transfer from NVMM memory to user-space memory.

V4L2 camera to display

Take the file main.qml used in the PC example.

Except for v4l2src, this example manages the memory in NVMM format, never bringing the data to userspace.

gst-launch-1.0 v4l2src ! nvvidconv ! qtoverlay qml=main.qml ! nvoverlaysink

Capturing and saving into a file

Having a display is not needed to use the GstQtOverlay plug-in. The display should be set for the Qt Engine to have a reference of the GPU resources available, especially for the EGL.

You can have even pipelines with multiple paths. Please, find the following example by using a 4K camera as a capture device:

With NVMM support:

gst-launch-1.0 nvarguscamerasrc \ ! nvvidconv \ ! qtoverlay qml=main.qml \ ! nvvidconv \ ! nvv4l2h264enc maxperf-enable=1 \ ! h264parse \ ! qtmux ! filesink location=test.mp4 -e

Some important aspects of the NVMM support observed from the pipeline shown above:

1. Each QtOverlay element should have a fresh NVMM buffer since it works in-place. It is achieved by using an nvvidconv element.

2. The storage can represent a bottleneck. It is recommended to handle the file dumping on a fast storage unit such as SSD or RAM disk.

Without NVMM

The following pipeline uses qtoverlay in Non-NVMM mode. In this case, take into account the video conversion and the encoding, which are done in the CPU. Besides, there is a need for video conversion from RGBA because of QT compatibility.

gst-launch-1.0 nvarguscamerasrc \ ! nvvidconv \ ! qtoverlay qml=main.qml \ ! videoconvert \ ! x264enc \ ! h264parse \ ! qtmux ! filesink location=test.mp4 -e

For an even simpler case:

gst-launch-1.0 videotestsrc \ ! qtoverlay qml=main.qml \ ! videoconvert \ ! x264enc \ ! h264parse \ ! qtmux ! filesink location=test.mp4 -e

The pipelines shown above was tested on a Jetson Nano, performing faster than 30fps.

4. Stream video over network from RSTP source

Let's assume:

SOURCE_ADRESS=rtsp://10.251.101.176:5004/test: origin rtsp video

DEST_ADRESS=10.251.101.57: destination computer/board

PORT=5004: source/dest port

On an NVIDIA Jetson board:

gst-launch-1.0 rtspsrc location=$SOURCE_ADRESS ! rtph264depay ! h264parse ! omxh264dec ! nvvidconv ! video/x-raw ! qtoverlay qml=main.qml ! videoconvert ! video/x-raw,format=I420 ! queue ! jpegenc ! rtpjpegpay ! udpsink host=$DEST_ADRESS port=$PORT sync=false enable-last-sample=false max-lateness=00000000 -v

IMX6 examples

Before running the examples below, make sure you have set up the environment correctly.

export QT_EGLFS_IMX6_NO_FB_MULTI_BUFFER=1 export QT_QPA_PLATFORM=eglfs export DISPLAY=:0.0

Now, you are able to run the following examples:

Display a text overlay

gst-launch-1.0 videotestsrc is-live=true ! 'video/x-raw, width=640, height=480, framerate=30/1' ! queue ! imxg2dvideotransform ! \ queue ! qtoverlay qml=/main.qml ! queue ! imxeglvivsink qos=false sync=false enable-last-sample=false

Saving a video with a text overlay

gst-launch-1.0 videotestsrc is-live=true ! 'video/x-raw, width=640, height=480, framerate=30/1' ! queue ! imxg2dvideotransform ! \ queue ! qtoverlay qml=/main.qml ! queue ! imxipuvideotransform input-crop=false ! \ capsfilter caps=video/x-raw,width=640,height=480,format=NV12 ! imxvpuenc_h264 bitrate=4000 gop-size=15 idr-interval=15 ! \ capsfilter caps=video/x-h264 ! mpegtsmux alignment=7 ! queue ! filesink location=test.mp4 -e

Network streaming with a text overlay

Send stream to a host with IP address $IP

On the IMX6: video source

gst-launch-1.0 videotestsrc is-live=true ! 'video/x-raw, width=640, height=480, framerate=30/1' ! queue ! imxg2dvideotransform ! \ queue ! qtoverlay qml=/main.qml ! queue ! imxipuvideotransform input-crop=false ! \ capsfilter caps=video/x-raw,width=640,height=480,format=NV12 ! imxvpuenc_h264 bitrate=4000 gop-size=15 idr-interval=15 ! \ capsfilter caps=video/x-h264 ! mpegtsmux alignment=7 ! queue ! udpsink async=false sync=false host=$IP port=5012

On the host: video receptor

gst-launch-1.0 udpsrc address=$IP port=5012 ! queue ! tsdemux ! identity single-segment=true ! queue ! decodebin ! \ queue ! fpsdisplaysink sync=false -v

IMX8 examples

Before running the examples below, make sure you have set up the environment correctly.

export QT_QPA_PLATFORM=wayland export DISPLAY=:0.0

Now, you are able to run the following examples:

Save a video overlay using a video test source

gst-launch-1.0 videotestsrc is-live=1 ! "video/x-raw,width=1920,height=1080,format=RGBA" ! qtoverlay qml=main.qml ! vpuenc_h264 ! avimux ! filesink location=video.avi -e

Save a video overlay using a camera source

gst-launch-1.0 v4l2src device=/dev/video1 ! imxvideoconvert_g2d ! "video/x-raw,format=RGBA" ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf ! vpuenc_h264 ! avimux ! filesink location=video.avi -e

Performance

To measure the performance, we have used our GStreamer Perf Tool gst-perf.

Jetson Xavier NX

For testing purposes, take into account the following points:

- Maximum performance mode enabled: all cores, and Jetson clocks enabled

- Jetpack 4.4 (4.2.1 or earlier is not recommended)

- Base installation

- The GstQtOverlay is surrounded by queues to measure the actual capability of GstQtOverlay

For measuring and contrasting the performances with and without NVMM support, we used the following pipelines:

NVMM:

gst-launch-1.0 videotestsrc pattern=black ! 'video/x-raw, width=1920, height=1080' ! queue ! nvvidconv ! queue ! 'video/x-raw(memory:NVMM)' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf ! queue ! nvvidconv ! 'video/x-raw' ! fakesink sync=false

No NVMM

gst-launch-1.0 videotestsrc pattern=black ! 'video/x-raw, width=1920, height=1080' ! queue ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf ! queue ! fakesink sync=false

The results obtained:

| Measurement | Jetson NX |

|---|---|

| No NVMM | 190 fps |

| NVMM |

265 fps |

With the addition of native UYVY and NV12 support for NVMM memory, we measured the performance for each format between using nvvidconv or the native support. The following pipelines were used:

NVMM using nvvidconv:

gst-launch-1.0 videotestsrc pattern=black ! 'video/x-raw, width=1920, height=1080, format=RGBA' ! queue ! nvvidconv ! queue ! 'video/x-raw(memory:NVMM), format=RGBA' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf ! queue ! nvvidconv ! 'video/x-raw' ! fakesink sync=false

NVMM with native UYVY and NV12 support:

gst-launch-1.0 videotestsrc pattern=black ! 'video/x-raw, width=1920, height=1080, format=NV12' ! queue ! nvvidconv ! queue ! 'video/x-raw(memory:NVMM), format=NV12' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf ! queue ! nvvidconv ! 'video/x-raw' ! fakesink sync=false

The results obtained:

| Measurement | RGBA | UYVY | NV12 |

|---|---|---|---|

| NVMM with nvvidconv | 227 fps |

200 fps |

177 fps |

| NVMM with native formats |

227 fps |

172 fps |

177 fps |

CPU usage

Taking the following pipelines as reference:

No NVMM

gst-launch-1.0 videotestsrc is-live=true ! 'video/x-raw, width=1920, height=1080' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! 'video/x-raw, width=1920, height=1080' ! fakesink

NVMM:

gst-launch-1.0 videotestsrc is-live=true ! 'video/x-raw, width=1920, height=1080' ! nvvidconv ! 'video/x-raw(memory:NVMM), width=1920, height=1080' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! nvvidconv ! 'video/x-raw, width=1920, height=1080' ! fakesink sync=false

Results

| Measurement | No NVMM |

NVMM |

|---|---|---|

| GstQtOverlay | 16.5% | 7.3% |

| Rest of pipeline | 14% | 27.8% |

| Total | 30.5% | 35.1% |

The total consumption of the pipeline is higher in the NVMM case since there are more elements. The GstQtOverlay consumes less CPU in NVMM mode.

Jetson Nano

For testing purposes, take into account the following points:

- Maximum performance mode enabled: all cores, and Jetson clocks enabled

- Jetpack 4.5 (4.2.1 or earlier is not recommended)

- Base installation

- The GstQtOverlay is surrounded by queues to measure the actual capability of GstQtOverlay

For measuring and contrasting the performances with and without NVMM support for every format, we used the following pipelines:

NVMM using nvvidconv:

gst-launch-1.0 videotestsrc pattern=black ! 'video/x-raw, width=1920, height=1080, format=NV12' ! queue ! nvvidconv ! queue ! 'video/x-raw(memory:NVMM), format=RGBA' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf ! queue ! nvvidconv ! 'video/x-raw' ! fakesink sync=false

NVMM with native UYVY and NV12 support:

gst-launch-1.0 videotestsrc pattern=black ! 'video/x-raw, width=1920, height=1080, format=NV12' ! queue ! nvvidconv ! queue ! 'video/x-raw(memory:NVMM), format=NV12' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf ! queue ! nvvidconv ! 'video/x-raw' ! fakesink sync=false

No NVMM

gst-launch-1.0 videotestsrc pattern=black ! 'video/x-raw, width=1920, height=1080' ! queue ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf ! queue ! fakesink sync=false

The results obtained:

| Measurement | RGBA | UYVY | NV12 |

|---|---|---|---|

| No NVMM | 57 fps |

N/A | N/A |

| NVMM with nvvidconv |

121 fps |

67 fps | 61 fps |

| NVMM with native formats |

123 fps |

67 fps | 61 fps |

While using the native formats may not provide big performance gains here as it still uses nvvidconv to upload to NVMM memory, it allows connecting directly to some cameras that output NV12 in NVMM memory like with the nvarguscamerasrc element.

CPU usage

Taking the following pipelines as reference:

No NVMM

gst-launch-1.0 videotestsrc is-live=true ! 'video/x-raw, width=1920, height=1080' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf print-cpu-load=true ! 'video/x-raw, width=1920, height=1080' ! fakesink

NVMM:

gst-launch-1.0 videotestsrc is-live=true ! 'video/x-raw, width=1920, height=1080' ! nvvidconv ! 'video/x-raw(memory:NVMM), width=1920, height=1080' ! qtoverlay qml=gst-libs/gst/qt/main.qml ! perf print-cpu-load=true ! nvvidconv ! 'video/x-raw, width=1920, height=1080' ! fakesink sync=false

Results

| Measurement | No NVMM |

NVMM |

|---|---|---|

| GstQtOverlay | 2% | 2% |

| Rest of pipeline | 17% | 13% |

| Total | 19% | 15% |

Running Without a Graphics Server

Many embedded systems run without a graphics server. The most common graphics servers would be X11 (Xorg) or Wayland (Weston). QtOverlay may run without a graphics server by using purely EGL.

To run without a graphics server set the following variables:

export QT_QPA_EGLFS_INTEGRATION=none export QT_QPA_PLATFORM=eglfs

For example, the following pipeline will run in a Jetson platform without any graphics server running:

QT_QPA_EGLFS_INTEGRATION=none QT_QPA_PLATFORM=eglfs GST_DEBUG=2 gst-launch-1.0 videotestsrc ! nvvidconv ! qtoverlay qml=main.qml ! nvvidconv ! video/x-raw\(memory:NVMM\),format=I420 ! nvoverlaysink

GstQtOverlay FAQ

- Where can I get GstQtOverlay?

You can purchase it at RidgeRun Online Store. Please refer Contact Us section of this wiki for reaching out RidgeRun if you have any questions.

- Is the source code delivered with the purchase?

Yes. After the purchase, the complete source code is delivered.

- Can I use external events to interact with my GUI?

Yes. See GStreamer Properties

- Am I limited to a single QML?

No. As with any regular QML powered application, events can fire up a load of other QML files. This allows the user to build state-pattern like apps with ease.

- Can I use the mouse and/or keyboard events?

Not yet. At least not out-of-the-box.

- Is QML enough for my GUI?

Yes (most likely). QML support is great, there's a lot of documentation and examples available. QML is competitive with HTML5 in cross-platform app development.

- Can I create my own custom QML components?

Yes. See the QT QML module guide.

- Can I use animations?

Yes.

- Does my HW support GstQtOverlay?

Check Supported platforms

- Do I need a Graphic server running?

No, see Running Without a Graphics Server

Troubleshooting

1. Memory leak problem when using GStreamer Daemon + Gstreamer_QT_Overlay

The Qt library requests thread contexts from GLIB that may interfere with the ones requested by Gstd. If a memory leak problem is noticed run gstd with the following prepended environment variable:

QT_NO_GLIB=1 gstd

Known issues

1. NVMM support on Jetson is recommended for Jetpack 4.4 or later.

2. NVMM support does not support splitmux, since it is not capable of allocating NVMM buffers.

For direct inquiries, please refer to the contact information available on our Contact page. Alternatively, you may complete and submit the form provided at the same link. We will respond to your request at our earliest opportunity.

Links to RidgeRun Resources and RidgeRun Artificial Intelligence Solutions can be found in the footer below.