GStreamer Buffer Synchronization: Basics and foundation

|

|

| |

| Basics and foundation | |

|---|---|

|

|

|

| Getting Started | |

|

|

|

| Documentation | |

|

|

|

| Examples | |

|

|

|

| Contact Us | |

|

|

Sensor Synchronization Foundations

It's the process of aligning the data streams from multiple sensors—such as lidars, radars, and cameras—so that their outputs correspond to the same real-world event or moment in time. This alignment is essential for accurate sensor fusion, ensuring that the perception system can correlate information correctly across modalities. Synchronisation can be achieved through hardware triggers, timestamping via a common clock, or post-processing techniques, depending on the application requirements and system constraints.

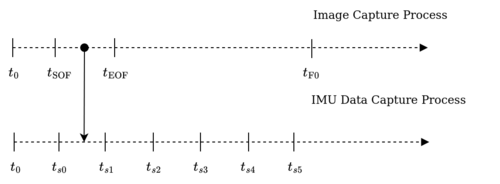

For instance, for video stabilization, it is critical that the camera sensor synchronize with the inertial moment unit (IMU):

Other critical applications where synchronization is mandatory are:

- Sensor fusion: combining IMU, LiDAR, Radars, and cameras in automotive and robotics.

- Video-audio sync: synchronizing the audio with the images is critical in video applications.

- Multi-camera: image stitching, birds-eye-view, and stereo vision require proper timestamping and synchronization to match reality.

What happens when synchronization is absent?

This is a consequence when the sensors of a multi-sensor system start at different times. This implies that the first buffer mismatch in the capture time occurs in the real world.

In the picture, the frames 0 from each sensor start at different times. The case becomes more critical when the time difference exceeds the frame period (inverse of the framerate). The first two streams are close, with 4 ms of difference, whereas the third stream started much earlier, where the difference exceeds 33 ms in a 30 fps capture.

How to synchronize?

Some options to synchronize sensors are:

- Hardware Triggering: All sensors receive a shared electrical pulse to capture data simultaneously.

High precision; needs wiring and hardware support.

- PTP (Precision Time Protocol): Synchronizes clocks over Ethernet with sub-microsecond accuracy. Accurate; requires compatible network hardware.

- NTP (Network Time Protocol): Network-based time sync with millisecond precision. Easy setup; less accurate.

- Shared Clock Timestamping: Sensors use a common system clock for timestamping data. Reliable; needs tight integration.

How a couple of synchronized streams look

Overall, the behavior of synchronized streams feels more natural, as in reality. In the stitched video, the object transitions from one scene to another and feels soft, and there are no jumps.

Below, there is a video example that illustrates this: