DeepStream 7.0 examples

|

|

On this page, you are going to find a set of DeepStream 7.0 pipelines used on Jetson Orin Nano DevKit with JetPack 6.0. This wiki describes some of the DeepStream features for the NVIDIA platforms and a summary of how to run Deepstream 7.0 samples and pipelines examples using default models.

Initialization

Prepare your Jetson and check all Deepstream SDK components are fulfill to start using the reference-apps and samples.

Dependencies

sudo apt install \ libssl3 \ libssl-dev \ libgstreamer1.0-0 \ gstreamer1.0-tools \ gstreamer1.0-plugins-good \ gstreamer1.0-plugins-bad \ gstreamer1.0-plugins-ugly \ gstreamer1.0-libav \ libgstreamer-plugins-base1.0-dev \ libgstrtspserver-1.0-0 \ libjansson4 \ libyaml-cpp-dev

Make sure the prerequisite packages are installed.

Glib

Sometimes user can encounter the following error:

GLib (gthread-posix.c): Unexpected error from C library during 'pthread_setspecific': Invalid argument. Aborting.

The issue is caused by bug in glib 2.0-2.72 version which comes with ubuntu22.04 by default. To address this issue install the glib version 2.76.6.

sudo apt install meson ninja-build

Download sources and install glib.

git clone https://github.com/GNOME/glib.git cd glib git checkout 2.76.6 meson build --prefix=/usr ninja -C build/ cd build/ ninja install

Check the installed version.

pkg-config --modversion glib-2.0

Boost the clocks

Run the following commands to boost the clocks:

sudo nvpmodel -m 0 sudo jetson_clocks

Troubleshooting

There may be an error with JetPack 6.0 rev2 at crt CUDA directory due to incomplete Jetpack installation when building Sample Apps.

cc -c -o deepstream_test1_app.o -DPLATFORM_TEGRA -I../../../includes -I /usr/local/cuda-12.2/include -pthread -I/usr/include/gstreamer-1.0 -I/usr/include/aarch64-linux-gnu -I/usr/include/glib-2.0 -I/usr/lib/aarch64-linux-gnu/glib-2.0/include deepstream_test1_app.c

In file included from deepstream_test1_app.c:16:

/usr/local/cuda-12.2/include/cuda_runtime_api.h:148:10: fatal error: crt/host_defines.h: No such file or directory

148 | #include "crt/host_defines.h"

| ^~~~~~~~~~~~~~~~~~~~

compilation terminated.

make: *** [Makefile:53: deepstream_test1_app.o] Error 1

If this issue appears reinstall JetPack using the following commands:

sudo apt update sudo apt install nvidia-jetpack

DeepsStream Sample Apps

The DeepStream SDK includes several precompiled reference applications designed to help users understand and leverage the capabilities of DeepStream. Some of these applications can be found at: /opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/.

Follow this instructions to run the deepstream-test1 app example.

Install the dependency packages:

sudo apt-get install libgstreamer-plugins-base1.0-dev libgstreamer1.0-dev \ libgstrtspserver-1.0-dev libx11-dev

Go to the app directory:

cd /opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream-test1

Export the CUDA_VER environment variable and compile the app.

export CUDA_VER=12.2 sudo -E make

Run the app:

./deepstream-test1-app dstest1_config.yml

You can explore the other examples by following the instructions in their respective README files, in each application directory located at /opt/nvidia/deepstream/deepstream/sources/apps/sample_apps.

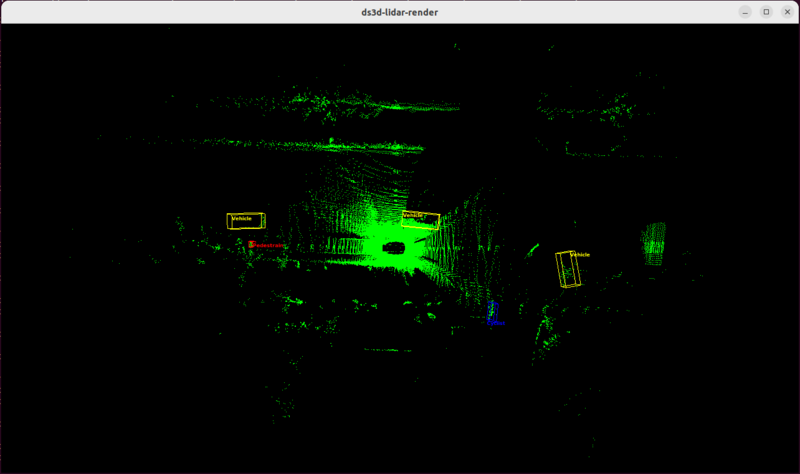

DeepStream Lidar Inference App

Deepstream 7.0 includes a sample application for lidar inference in app/sample_apps/deepstream-lidar-inference-app/. The deepstream_lidar_inference_app provides an end-to-end inference sample for lidar pointcloud data.

This application reads the point cloud data from dataset files and use the PointPillarNet model with inference results of the group of 3D bounding boxes of the objects using GLES 3D rendering.

Check the following steps to check the sample app:

- 1. Make sure to fulfill the prerequisite packages:

sudo apt-get install libgstreamer-plugins-base1.0-dev libgstreamer1.0-dev \ libgstrtspserver-1.0-dev libx11-dev libyaml-cpp-dev

- 2. Prepare Triton Enviroment by setting up the Triton backend:

cd /opt/nvidia/deepstream/deepstream/samples sudo ./triton_backend_setup.sh

This should take a few minutes.

- 3. Generate the engine files:

cd /opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream-lidar-inference-app/tritonserver/ sudo ./build_engine.sh

cd /opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream-lidar-inference-app sudo make sudo make install

- 4. Run the application:

sudo mkdir data sudo ./deepstream-lidar-inference-app -c configs/config_lidar_source_triton_render.yaml

Note: config_lidar_triton_infer.yaml is configured to save detection inference results into files.

DeepStream-3D Sensor Fusion Multi-Modal Application and Framework

This sample application showcases multi-modal sensor fusion pipelines for LiDAR and camera data using the DS3D framework. It processes data up to 4 cameras and their corresponding LIDARs and inference with a pre-trained model called V2XFusion that combines BEVFusion model and the BEVHeight models showing 3D bboxes of detected objects.

The deepstream-3d-lidar-sensor fusion application demonstrate LIDAR and video data fusion. The pipeline use DS3D components and uses the following process:

Sub-pipeline 1

- 1. LiDAR Data Loading: LiDAR point cloud data is loaded from disk using dataloader and reads list of lidar point files into

ds3d::datamap.

- 2. LiDAR Data Preprocessing: LiDAR data is preprocessed using lidarpreprocess to generate features, coordinates, and point numbers (feats/coords/N) tensors.

Sub-pipeline 2

- 3.1. Video Source and Preprocessing: The pipeline begins with reading image sequences (example JPEG files) and preprocessing them using

nvdspreprocess.

- 3.2. Data Bridging: The preprocessed 2D video data is then moved into the common data format

ds3d::datamapusingds3d::databridge.

4.Data Mixing: Both 2D video and 3D LiDAR data are combined into the same ds3d::datamap using ds3d::datamixer. This mixer ensures the synchronized merging of video and LiDAR data.

6.Alignment: The combined data is passed through ds3d::datafilter for alignment, which transforms the 3D LiDAR points into the 2D image coordinate system.

7.Inference: Data is then sent for inference through ds3d::datafilter using Triton Inference Server. The inference uses the V2XFusion pre-trained model.

8.Rendering: The 2D images are rendered using ds3d::datarender and projects 3D lidar data. The 3D visualization is performed with GLES, displaying multiple renders (texture, lidar, bbox) with different layouts in a single window.

The following image comprises the above process:

Example

- 1. Fulfill the prerequisite packages:

sudo apt-get install libgstreamer-plugins-base1.0-dev libgstreamer1.0-dev \ libgstrtspserver-1.0-dev libx11-dev libyaml-cpp-dev

Triton Server

- 2. Prepare Trtion Enviroment:

Setup Triton backend

cd /opt/nvidia/deepstream/deepstream/samples sudo ./triton_backend_setup.sh

Download dataset and model files

- 3. Install pip3:

sudo apt install python3-pip

Please install the following python packages

pip3 install numpy python-lzf gdown

- 4. Go to the v2xfusion directory and run the prepare script:

cd /opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream-3d-lidar-sensor-fusion/v2xfusion/scripts sudo ./prepare.sh

- 5. Compile 3D lidar sensor fusion sample app

cd /opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream-3d-lidar-sensor-fusion export CUDA_VER=12.2 sudo make sudo make install

- 6. Run the application:

This examples performs fusion of video and LiDAR to achieve object detection and 3D tracking.

./deepstream-3d-lidar-sensor-fusion -c ds3d_lidar_video_sensor_v2x_fusion.yml

This example shows that it can process multiple sets of video and LiDAR data concurrently as is configure to handle four batches in parallel.

./deepstream-3d-lidar-sensor-fusion -c ds3d_lidar_video_sensor_v2x_fusion_single_batch.yml

This example is configured for a single batch size, processing one set of video and LiDAR data at a time.

Deepstream Service Maker APIs

Deepstream Service Maker is a new C++ API that simplifies the application development using Deepstream SDK. It uses Cmake to build and compile the application.

To create the application the following files are needed:

- CMakeLists.txt: For building the application.

- deepstream_app.cpp: C++ file with application AI streaming pipeline and metadata management.

- config.yaml (optional): Pipeline construction.

This API uses GStreamer pipelines to create an AI stream using the nvinfer plugin which allows to apply an AI model to the multimedia stream. This plugin generates metadata with information about the obtained objects.

Fundamentals elements to develop

Check the following concepts to develop with Deepstream Service Maker API:

Pipeline API

Deepstream-based AI streaming use the GStreamer pipelines to create the multimedia stream. The pipeline process buffers and metadata in blocks withing the pipeline.

A pipeline is comprise of link elements from Deepstream plugins, for example:

Pipeline pipeline("sample");

// nvstreammux is the factory name in Deepstream to create a streammuxer Element

// mux is the name of the Element instance

// multiple key value pairs can be appended to configure the added Element

pipeline.add("nvstreammux", "mux", "batch-size", 1, "width", 1280, "height", 720);

To link the elements use the link function:

// Elements with single static input and output

pipeline.link("mux", "infer", "osd", "sink");

// Element with dynamic or multiple input/output

pipelin.link({"src", "mux"}, {"", "sink_%u"});

YAML Configuration

Pipeline can also be configure in a YAML configuration file which reduce coding efforts. The file should start with the keyword deepstream. For example:

deepstream:

nodes:

- type: nvurisrcbin

name: src

- type: nvstreammux

name: mux

properties:

batch-size: 1

width: 1280

height: 720

- type: nvinferbin

...

Buffer

Buffer works as a wrapper for the data segments flowing through the pipeline. You can use methods to read-only buffers for data processing and for read-write buffers for modifying the data.

Metadata

Pipeline elements generate metadata eith inference results of the AI inference models. Some of the available metadata classes are: BatchMetaData, FrameMetaData, UserMetaData, ObjectMetadata, ClassifierMetadata, DisplayMetadata and EventMessageUserMetadata. Use the following link for information on Deepstream Metadata.

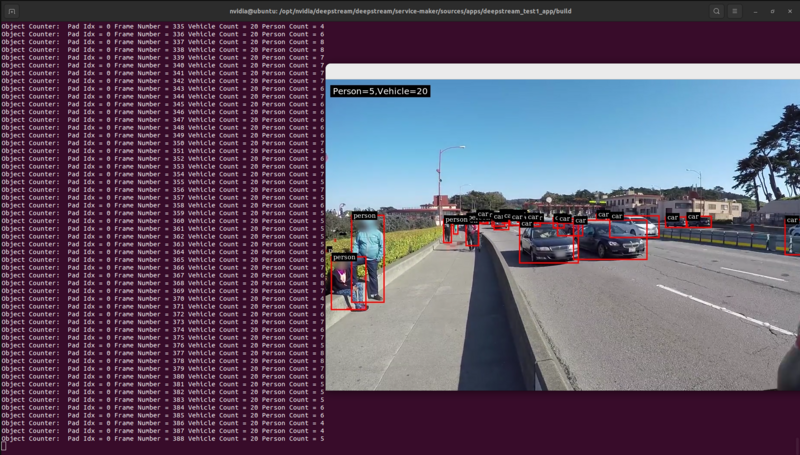

Test example

Samples applications made with the Server Maker API can be found in the directory /opt/nvidia/deepstream/deepstream/service-maker/sources/apps. Use the following instructions to build an example:

- 1. Install cmake

sudo apt update sudo apt install cmake

- 2. Go to the example directory

cd /opt/nvidia/deepstream/deepstream/service-maker/sources/apps/deepstream_test1_app

- 3. Create a build directory and compile the application

sudo mkdir build cd build sudo cmake ../ sudo make

- 4. To run the application select a H264 file as source:

./deepstream-test1-app /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.h264

- Or run the application using a YAML file:

./deepstream-test1-app ../dstest1_config.yaml

The example should look like:

Pipelines

DeepStream provides several plugins to construct an inference pipeline, the common steps are the following:

- Capture: Video stream source can vary between a camera, a video file or a RTSP stream.

- Decode: Once the frames are captured the are decoded using NVDEC accelerator with the element

nvv4l2decoder. - Pre-processing: After decoding pre-processing of the image can be made if needed. This step involves dewarping from a fisheye or 306 degrees camera using the

nvdewarperplugin or perform a color space conversion with thenvvideoconvertplugin. - Batching: Next step includes batch the frames for optimal inference performance using the

nvstreammuxplugin. - Inference: Now inference can be done using native TensorRT inference with the

nvinferplugin. Also inference can be done using the the native framework such as TensorFlow or PyTorch using Triton inference server with thenvinferserverplugin. - Tracking: An optional step is to perform tracking to the detected objects with the

nvtrackerplugin. - Overlay: To create overlays such bounding boxes, labels, segmentation masks use the

nvdsosdplugin. - Output: Now you can output the inference results using a sink element, send via RTSP stream or save in a file.

Engine files

DeepStream creates TensorRT CUDA engines files for models, when a model with no existing engine file is use it may take some minutes for the engine to generate. This engine file is saved and can be reused in later runs for a faster loading of the model.

First time to run a pipeline with a sample model use sudo and the engine file will be saved in the /opt/nvidia/deepstream/deepstream-7.0/samples/models directory for future uses.

Note: Engine file creation of sample models can take to 25 minutes to generate in Orin NX and Nano platforms.

Examples

Primary_Detector

Play sample video with detector model:

gst-launch-1.0 filesrc location = /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.mp4 ! qtdemux ! h264parse ! nvv4l2decoder ! m.sink_0 nvstreammux name=m batch-size=1 width=1280 height=720 ! nvinfer config-file-path=/opt/nvidia/deepstream/deepstream/samples/configs/deepstream-app/config_infer_primary.txt ! nvdsosd ! nv3dsink

Save mp4 file sample video with detector model:

gst-launch-1.0 filesrc location = /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.mp4 ! qtdemux ! h264parse ! nvv4l2decoder ! m.sink_0 nvstreammux name=m batch-size=1 width=1280 height=720 ! nvinfer config-file-path=/opt/nvidia/deepstream/deepstream/samples/configs/deepstream-app/config_infer_primary.txt ! nvdsosd ! nvv4l2h264enc ! h264parse ! qtmux ! filesink location=primary-detector.mp4

Send stream via RTSP:

gst-launch-1.0 filesrc location = /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.mp4 ! qtdemux ! h264parse ! nvv4l2decoder ! m.sink_0 nvstreammux name=m batch-size=1 width=1280 height=720 ! nvinfer config-file-path=/opt/nvidia/deepstream/deepstream/samples/configs/deepstream-app/config_infer_primary.txt ! nvdsosd ! nvv4l2h264enc insert-sps-pps=true ! video/x-h264, mapping=/stream1 ! rtspsink service=5000

Primary Detector with Tracking

DeepStream use the low-level tracker library libnvds_nvmultiobjecttracker.so, the path to this library needs to be specify in the ll-lib-file property.

The NvMultiObjectTracker library supports various types of multi-object tracking algorithms. This algorithms can be specified in the ll-config-file for the library configuration, if this is not defined the library will run with default parameters. Find the config files in the directory /opt/nvidia/deepstream/deepstream/samples/configs/deepstream-app/.

- IOU Tracker

- NvSORT

- NvDeepSORT

- NvDCF

gst-launch-1.0 filesrc location = /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.mp4 ! qtdemux ! h264parse ! nvv4l2decoder ! m.sink_0 nvstreammux name=m batch-size=1 width=1280 height=720 ! nvinfer config-file-path=/opt/nvidia/deepstream/deepstream/samples/configs/deepstream-app/config_infer_primary.txt ! nvtracker ll-lib-file=/opt/nvidia/deepstream/deepstream/lib/libnvds_nvmultiobjecttracker.so ! nvdsosd ! nv3dsink

Using NvDeepSORT algorithm:

gst-launch-1.0 filesrc location = /opt/nvidia/deepstream/deepstream/samples/streams/sample_720p.mp4 ! qtdemux ! h264parse ! nvv4l2decoder ! m.sink_0 nvstreammux name=m batch-size=1 width=1280 height=720 ! nvinfer config-file-path=/opt/nvidia/deepstream/deepstream/samples/configs/deepstream-app/config_infer_primary.txt ! nvtracker ll-lib-file=/opt/nvidia/deepstream/deepstream/lib/libnvds_nvmultiobjecttracker.so ll-config-file=/opt/nvidia/deepstream/deepstream/samples/configs/deepstream-app/config_tracker_NvDeepSORT.yml ! nvdsosd ! nv3dsink

|

RidgeRun Resources | |||||

|

|||||

| Visit our Main Website for the RidgeRun Products and Online Store. RidgeRun Engineering information is available at RidgeRun Engineering Services, RidgeRun Professional Services, RidgeRun Subscription Model and Client Engagement Process wiki pages. Please email to support@ridgerun.com for technical questions and contactus@ridgerun.com for other queries. Contact details for sponsoring the RidgeRun GStreamer projects are available in Sponsor Projects page. |

|