High performance SD card tuning using the EXT4 file system: Difference between revisions

No edit summary |

|||

| Line 101: | Line 101: | ||

echo 100 > /proc/sys/vm/dirty_writeback_centisecs | echo 100 > /proc/sys/vm/dirty_writeback_centisecs | ||

echo 100 > /proc/sys/vm/dirty_expire_centisecs | echo 100 > /proc/sys/vm/dirty_expire_centisecs | ||

After setting these parameters, CPU load peaks were no longer detected. | |||

<pre> | |||

perf0: frames: 1341 current: 29.85 average: 29.99 arm-load: 15 | |||

perf1: frames: 1371 current: 30.13 average: 29.99 arm-load: 20 <--- No peaks to 100% anymore | |||

perf0: frames: 1372 current: 29.91 average: 29.99 arm-load: 20 | |||

perf1: frames: 1401 current: 29.86 average: 29.99 arm-load: 20 | |||

perf0: frames: 1403 current: 30.12 average: 29.99 arm-load: 20 | |||

perf1: frames: 1432 current: 30.13 average: 29.99 arm-load: 12 | |||

perf0: frames: 1433 current: 29.86 average: 29.99 arm-load: 14 | |||

perf1: frames: 1462 current: 29.86 average: 29.99 arm-load: 15 | |||

perf0: frames: 1464 current: 30.13 average: 29.99 arm-load: 13 | |||

</pre> | |||

= References = | = References = | ||

Revision as of 19:55, 14 February 2014

While trying to dual-record from two 1080P@30fps input channels at the same time, we faced the problem of saving all these huge amount of incoming data to the filesystem that eventually drowned the system. The setup was an ext3 filesystem in a Class 10 SD card, but that was not enough and just some seconds after starting writing the files the system would stall.

Solution

The solution involves using an ext4 filesystem instead of ext3, and then optimizing this filesystem for a better write performance. Additionally, depending on how intense your gstreamer pipeline is, you may be required to tune the kernel's virtual memory (vm) subsystem, to avoid peaks on the CPU load when the kernel's flusher threads wakes up to write, minimizing any chance to stall the system.

1. Enable ext4 support in the kernel

Kernel Configuration:

File Systems ->

<*> The Extended 4 (ext4) filesystem

[*] Ext4 extended attributes

[*] Ext4 POSIX Access Control Lists

[*] Ext4 Security Labels

Enable the block layer

[*] Support for large (2TB+) block devices and files

2. Prepare your SD card with an optimized ext4 filesystem

2.1 Create the ext4 partition

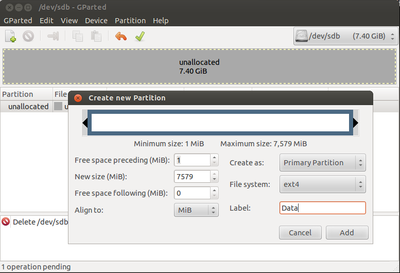

There could be many tools to prepare an ext4 partition in your SD card. Probably, the simplest way is to use gparted.

sudo gparted

See Figure 1 for an example setup of a new ext4 partition in an 8GB SD card using gparted. In this example, the SD card was assigned the device node /dev/sdb, and the new partition will be mapped to /dev/sdb1. This could be different in your computer.

Note: Please be careful, you don't want to format the wrong device or lose information.

2.2 Optimize the ext4 partition

The optimization consists on selecting the data mode for our fileystem as data=writeback. Over the three existing possible data modes (the other two being data=ordered and data=journal), writeback is the one that gets the best write performance out of ext4 by disabling journaling. For more information, read the "Data Mode" section from the Ext4 Filesystem kernel documentation.

sudo tune2fs -o journal_data_writeback /dev/sdb1 sudo tune2fs -O ^has_journal /dev/sdb1 sudo e2fsck -f /dev/sdb1

You can check your filesystem setup using:

dumpe2fs /dev/sdb1 | less

3. Mount the ext4 partition in your system

Mount the ext4 partition specifying the following flags for optimization:

- noatime,nodiratime - avoid writing access times

- data=writeback - tell mount we are mounting a filesystem with no journaling

To mount the ext4 fileystem at /mnt/sd in your board:

mkdir -p /mnt/sd mount -t ext4 -O noatime,nodirame,data=writeback /dev/mmcblk0p1 /mnt/sd

Note: These same flags can be specified in the /etc/fstab file.

4. Optimizing the virtual memory subsystem

The kernel's virtual memory subsystem allows to tune the writeout of dirty data to disk. In a write-intense scenario like dual-recording 1080P@30fps, this tuning can be fundamental to avoid crashing the system. Why? In just a fractions of a second, the amount of input data we've captured is huge and we need to write it eventually to disk; if the system waits too long to wake up its kernel's flusher threads, there will be too many data to write, and then the write process would consume completely the CPU for even seconds; consequences involve undesired behaviour (i.e. dropping frames while recording), and eventually these CPU load peaks can stall the system. The default setup of the vm subsystem is not optimal for these cases.

Here's the output of the gstperf element running the dual-record pipeline without vm optimizations. The CPU load peaks to 100% when the kernel's flusher threads wake up.

perf0: frames: 1096 current: 29.86 average: 30.00 arm-load: 17 perf1: frames: 1127 current: 30.13 average: 30.00 arm-load: 19 perf0: frames: 1127 current: 29.89 average: 29.99 arm-load: 19 perf1: frames: 1157 current: 29.77 average: 29.99 arm-load: 29 perf0: frames: 1158 current: 30.13 average: 30.00 arm-load: 33 perf1: frames: 1188 current: 29.98 average: 29.99 arm-load: 100 <----- CPU Load peak perf0: frames: 1188 current: 29.86 average: 29.99 arm-load: 100 perf1: frames: 1219 current: 30.13 average: 30.00 arm-load: 100 perf0: frames: 1219 current: 29.90 average: 29.99 arm-load: 100 perf1: frames: 1249 current: 29.85 average: 29.99 arm-load: 88 perf0: frames: 1250 current: 30.11 average: 29.99 arm-load: 83 perf1: frames: 1280 current: 30.14 average: 30.00 arm-load: 26 perf0: frames: 1280 current: 29.87 average: 29.99 arm-load: 25 perf1: frames: 1310 current: 29.86 average: 29.99 arm-load: 19 perf0: frames: 1311 current: 30.14 average: 29.99 arm-load: 20

There are three parameters of the vm subsystem that are particularly useful for our problem:

- dirty_writeback_centisecs - the kernel flusher threads will periodically wake up and write 'old' data out to disk

- dirty_expire_centisecs - when dirty data is old enough to be eligible for writeout by the kernel flusher thread

- dirty_ratio - a percentage of total available memory that contains free pages and reclaimable pages, the number of pages at which a process which is generating disk writes will itself start writing out dirty data

Using RidgeRun's SDK for the DM8168 Z3 board, these are the default values for these parameters in the system:

/ # cat /proc/sys/vm/dirty_writeback_centisecs 500 / # cat /proc/sys/vm/dirty_expire_centisecs 3000 / # cat /proc/sys/vm/dirty_ratio 20

There is not a recipe to tweak these parameters, it all depends on your system and your application requirements. Since these parameters can affect all the processes, you may want to prioritize one parameter or another. For the case presented in this wiki page (dual-recording@30fps), the best tuning we came across was to change dirty_writeback_centisecs and dirty_expire_centisecs both to the 100 value, meaning that every second the kernel's flusher threads will wake up, and every second all the incoming data would expire and be written by those threads. These frequent writes (every second) allowed to avoid peaks on the CPU load.

echo 100 > /proc/sys/vm/dirty_writeback_centisecs echo 100 > /proc/sys/vm/dirty_expire_centisecs

After setting these parameters, CPU load peaks were no longer detected.

perf0: frames: 1341 current: 29.85 average: 29.99 arm-load: 15 perf1: frames: 1371 current: 30.13 average: 29.99 arm-load: 20 <--- No peaks to 100% anymore perf0: frames: 1372 current: 29.91 average: 29.99 arm-load: 20 perf1: frames: 1401 current: 29.86 average: 29.99 arm-load: 20 perf0: frames: 1403 current: 30.12 average: 29.99 arm-load: 20 perf1: frames: 1432 current: 30.13 average: 29.99 arm-load: 12 perf0: frames: 1433 current: 29.86 average: 29.99 arm-load: 14 perf1: frames: 1462 current: 29.86 average: 29.99 arm-load: 15 perf0: frames: 1464 current: 30.13 average: 29.99 arm-load: 13

References

http://blogofterje.wordpress.com/2012/01/14/optimizing-fs-on-sd-card/

https://www.kernel.org/doc/Documentation/filesystems/ext4.txt